FreeRTOS学习笔记---------队列

队列理论

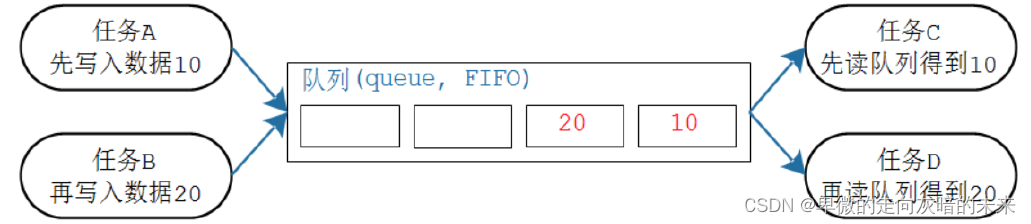

队列特性

- 数据的操作采用先进先出的方法(FIFO)

- 每个数据大小固定,队列中有若干项(长度)

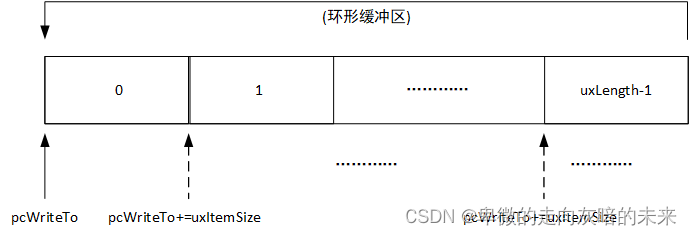

- 往头部写数据时并不会覆盖头部数据,只是向后挪动(环形缓冲区)

官方关于队列的源码如下:

typedef struct QueueDefinition /* The old naming convention is used to prevent breaking kernel aware debuggers. */

{

int8_t * pcHead; // 队列buf的头部地址,保持不变

int8_t * pcWriteTo; // 下一次队列写入地址

union

{

QueuePointers_t xQueue; /*< Data required exclusively when this structure is used as a queue. */

SemaphoreData_t xSemaphore; /*< Data required exclusively when this structure is used as a semaphore. */

} u;

List_t xTasksWaitingToSend; // 队列满时,等待写入队列数据的任务

List_t xTasksWaitingToReceive; // 队列空时,等待读出队列数据的任务

volatile UBaseType_t uxMessagesWaiting; // 当前队列中还剩多少项

UBaseType_t uxLength; // 队列长度

UBaseType_t uxItemSize; // 每一项数据大小

volatile int8_t cRxLock; /*< Stores the number of items received from the queue (removed from the queue) while the queue was locked. Set to queueUNLOCKED when the queue is not locked. */

volatile int8_t cTxLock; /*< Stores the number of items transmitted to the queue (added to the queue) while the queue was locked. Set to queueUNLOCKED when the queue is not locked. */

#if ( ( configSUPPORT_STATIC_ALLOCATION == 1 ) && ( configSUPPORT_DYNAMIC_ALLOCATION == 1 ) )

uint8_t ucStaticallyAllocated; /*< Set to pdTRUE if the memory used by the queue was statically allocated to ensure no attempt is made to free the memory. */

#endif

#if ( configUSE_QUEUE_SETS == 1 )

struct QueueDefinition * pxQueueSetContainer;

#endif

#if ( configUSE_TRACE_FACILITY == 1 )

UBaseType_t uxQueueNumber;

uint8_t ucQueueType;

#endif

} xQUEUE;队列写数据的方式有两种:向尾部写入,向头部写入。官方源码如下:

static BaseType_t prvCopyDataToQueue( Queue_t * const pxQueue,

const void * pvItemToQueue,

const BaseType_t xPosition )

{

BaseType_t xReturn = pdFALSE;

UBaseType_t uxMessagesWaiting;

/* This function is called from a critical section. */

uxMessagesWaiting = pxQueue->uxMessagesWaiting;

if( pxQueue->uxItemSize == ( UBaseType_t ) 0 )

{

#if ( configUSE_MUTEXES == 1 )

{

if( pxQueue->uxQueueType == queueQUEUE_IS_MUTEX )

{

/* The mutex is no longer being held. */

xReturn = xTaskPriorityDisinherit( pxQueue->u.xSemaphore.xMutexHolder );

pxQueue->u.xSemaphore.xMutexHolder = NULL;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

#endif /* configUSE_MUTEXES */

}

else if( xPosition == queueSEND_TO_BACK )

{

( void ) memcpy( ( void * ) pxQueue->pcWriteTo, pvItemToQueue, ( size_t ) pxQueue->uxItemSize ); /*lint !e961 !e418 !e9087 MISRA exception as the casts are only redundant for some ports, plus previous logic ensures a null pointer can only be passed to memcpy() if the copy size is 0. Cast to void required by function signature and safe as no alignment requirement and copy length specified in bytes. */

pxQueue->pcWriteTo += pxQueue->uxItemSize; /*lint !e9016 Pointer arithmetic on char types ok, especially in this use case where it is the clearest way of conveying intent. */

if( pxQueue->pcWriteTo >= pxQueue->u.xQueue.pcTail ) /*lint !e946 MISRA exception justified as comparison of pointers is the cleanest solution. */

{

pxQueue->pcWriteTo = pxQueue->pcHead;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

( void ) memcpy( ( void * ) pxQueue->u.xQueue.pcReadFrom, pvItemToQueue, ( size_t ) pxQueue->uxItemSize ); /*lint !e961 !e9087 !e418 MISRA exception as the casts are only redundant for some ports. Cast to void required by function signature and safe as no alignment requirement and copy length specified in bytes. Assert checks null pointer only used when length is 0. */

pxQueue->u.xQueue.pcReadFrom -= pxQueue->uxItemSize;

if( pxQueue->u.xQueue.pcReadFrom < pxQueue->pcHead ) /*lint !e946 MISRA exception justified as comparison of pointers is the cleanest solution. */

{

pxQueue->u.xQueue.pcReadFrom = ( pxQueue->u.xQueue.pcTail - pxQueue->uxItemSize );

}

else

{

mtCOVERAGE_TEST_MARKER();

}

if( xPosition == queueOVERWRITE )

{

if( uxMessagesWaiting > ( UBaseType_t ) 0 )

{

/* An item is not being added but overwritten, so subtract

* one from the recorded number of items in the queue so when

* one is added again below the number of recorded items remains

* correct. */

--uxMessagesWaiting;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

else

{

mtCOVERAGE_TEST_MARKER();

}

}

pxQueue->uxMessagesWaiting = uxMessagesWaiting + ( UBaseType_t ) 1;

return xReturn;

}队列向尾部写入数据的过程如下图所示:

每次向尾部写入时,先写入数据,pcWriteTo再自加uxItemSize。 ?

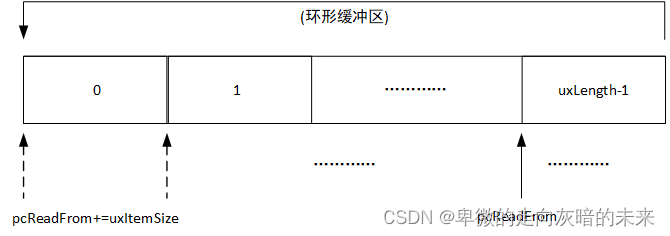

队列向头部写入数据的过程如下图所示:

每次向头部写入时,pcreadFrom先自减uxItemSize,再写入数据。 ??

队列读取数据的过程如下所示:

typedef struct QueuePointers

{

int8_t * pcTail; // 队列buf的尾部

int8_t * pcReadFrom; // 上一次读取的位置

} QueuePointers_t;BaseType_t xQueueReceive( QueueHandle_t xQueue,

void * const pvBuffer,

TickType_t xTicksToWait )

{

........

for( ; ; )

{

taskENTER_CRITICAL();

{

const UBaseType_t uxMessagesWaiting = pxQueue->uxMessagesWaiting;

/* Is there data in the queue now? To be running the calling task

* must be the highest priority task wanting to access the queue. */

if( uxMessagesWaiting > ( UBaseType_t ) 0 )

{

/* Data available, remove one item. */

prvCopyDataFromQueue( pxQueue, pvBuffer ); // 读取数据

traceQUEUE_RECEIVE( pxQueue );

pxQueue->uxMessagesWaiting = uxMessagesWaiting - ( UBaseType_t ) 1; // 删除已读项

...........

}

else

{

...............

}

}

taskEXIT_CRITICAL();

...........

} /*lint -restore */

}

// 调整pcReadFrom

static void prvCopyDataFromQueue( Queue_t * const pxQueue,

void * const pvBuffer )

{

if( pxQueue->uxItemSize != ( UBaseType_t ) 0 )

{

pxQueue->u.xQueue.pcReadFrom += pxQueue->uxItemSize; /*lint !e9016 Pointer arithmetic on char types ok, especially in this use case where it is the clearest way of conveying intent. */

if( pxQueue->u.xQueue.pcReadFrom >= pxQueue->u.xQueue.pcTail ) /*lint !e946 MISRA exception justified as use of the relational operator is the cleanest solutions. */

{

pxQueue->u.xQueue.pcReadFrom = pxQueue->pcHead;

}

else

{

mtCOVERAGE_TEST_MARKER();

}

( void ) memcpy( ( void * ) pvBuffer, ( void * ) pxQueue->u.xQueue.pcReadFrom, ( size_t ) pxQueue->uxItemSize ); /*lint !e961 !e418 !e9087 MISRA exception as the casts are only redundant for some ports. Also previous logic ensures a null pointer can only be passed to memcpy() when the count is 0. Cast to void required by function signature and safe as no alignment requirement and copy length specified in bytes. */

}

}

每次读取时,pcreadFrom先自加uxItemSize,再读取数据。

总结:

- 对尾部进行操作与pcWriteTo有关,对头部进行操作与pcReadFrom有关;

- 不管写入还是读取,都是拷贝数据(数据也可以是地址,比如字符串)。

队列阻塞访问

任务读写队列时,如果读写不成功,则阻塞;同时可以指定超时时间,即如果能读写成功就马上进入就绪态,否则就阻塞直到超时。

某个任务读队列时,如果队列没有数据,则该任务可以进入阻塞状态:还可以指定阻塞的时间。如果队列有数据了,则该阻塞的任务会变为就绪态。如果一直都没有数据,则时间到之后它也会进入就绪态。写队列也是如此。

有多个任务在等待同一个队列的数据,任务状态由阻塞态切换成就绪态的原则如下:

- 优先级最高的任务

- 同优先级时,等待时间最长的任务

队列常规使用

队列的创建分为动态和静态创建,函数原型如下所示:

// 动态创建队列

QueueHandle_t xQueueCreate( UBaseType_t uxQueueLength, // 队列长度

UBaseType_t uxItemSize ); // 每个数据大小,即几字节

// 静态态创建队列

QueueHandle_t xQueueCreateStatic(const UBaseType_t uxQueueLength, // 队列长度

const UBaseType_t uxItemSize, // 每个数据大小,即几字节

uint8_t * pucQueueStorage, // 指向一个大小为uxQueueLength * uxItemSize的数组

StaticQueue_t * pxStaticQueue)// 必须执行一个StaticQueue_t结构体,用来保存队列的数据结构

示例代码:

#define QUEUE_LENGTH 10

#define ITEM_SIZE sizeof(uint8_t) // 根据实际类型选择

StaticQueue_t xQueueBuffer; // 保存队列结构体

uint8_t ucQueueStorage[QUEUE_LENGTH * ITEM_SIZE]; // 队列的buf

QueueHandle_t xQueue1 = xQueueCreateStatic( QUEUE_LENGTH,

ITEM_SIZE,

ucQueueStorage,

&xQueueBuffer );

QueueHandle_t xQueue2 = xQueueCreate( QUEUE_LENGTH,

ITEM_SIZE);队列的读写函数如下所示:

// 写入

BaseType_t xQueueSend(QueueHandle_t xQueue,

const void * const pvItemToQueue,

TickType_t xTicksToWait);

// #define xQueueSend( xQueue, pvItemToQueue, xTicksToWait ) \

xQueueGenericSend( ( xQueue ), ( pvItemToQueue ), ( xTicksToWait ), queueSEND_TO_BACK )

BaseType_t xQueueSendToBack(QueueHandle_t xQueue,

const void * const pvItemToQueue,

TickType_t xTicksToWait);

// #define xQueueSendToBack( xQueue, pvItemToQueue, xTicksToWait ) \

xQueueGenericSend( ( xQueue ), ( pvItemToQueue ), ( xTicksToWait ), queueSEND_TO_BACK )

BaseType_t xQueueSendToFront(QueueHandle_t xQueue,

const void * const pvItemToQueue,

TickType_t xTicksToWait);

//#define xQueueSendToFront( xQueue, pvItemToQueue, xTicksToWait ) \

xQueueGenericSend( ( xQueue ), ( pvItemToQueue ), ( xTicksToWait ), queueSEND_TO_FRONT )

// 读取

BaseType_t xQueueReceive( QueueHandle_t xQueue,

void * const pvBuffer,

TickType_t xTicksToWait );?xQueue表示要读取或写入的队列,xTicksToWait表示等待时间。

使用队列实现同步与互斥

基本原理:队列的阻塞访问

使用队列实现同步的示例代码如下:

/*

* Configure the clocks, GPIO and other peripherals as required by the demo.

*/

static void prvSetupHardware( void );

/*-----------------------------------------------------------*/

void vmainTask1Function( void * param);

void vmainTask2Function( void * param);

/*-----------------------------------------------------------*/

UBaseType_t task1Run = 0;

UBaseType_t task2Run = 0;

UBaseType_t taskidleRun = 0;

TaskHandle_t xTask1Handle, xTask2Handle;

int main( void )

{

QueueHandle_t xQueueHandle;

#ifdef DEBUG

debug();

#endif

prvSetupHardware();

printf("Hello world!\r\n");

xQueueHandle = xQueueCreate(1, sizeof(int));

if(0 == xQueueHandle) {

printf("create queue failed!\r\n");

}

xTaskCreate(vmainTask1Function, "task1", 100, (void *)xQueueHandle, 1, &xTask1Handle); // 动态创建任务

xTaskCreate(vmainTask2Function, "task2", 100, (void *)xQueueHandle, 1, &xTask2Handle); // 动态创建任务 // 静态创建任务

/* Start the scheduler. */

vTaskStartScheduler();

/* Will only get here if there was not enough heap space to create the

idle task. */

return 0;

}

/*-----------------------------------------------------------*/

/*-----------------------------------------------------------*/

static void prvSetupHardware( void )

{

/* Start with the clocks in their expected state. */

RCC_DeInit();

/* Enable HSE (high speed external clock). */

RCC_HSEConfig( RCC_HSE_ON );

/* Wait till HSE is ready. */

while( RCC_GetFlagStatus( RCC_FLAG_HSERDY ) == RESET )

{

}

/* 2 wait states required on the flash. */

*( ( unsigned long * ) 0x40022000 ) = 0x02;

/* HCLK = SYSCLK */

RCC_HCLKConfig( RCC_SYSCLK_Div1 );

/* PCLK2 = HCLK */

RCC_PCLK2Config( RCC_HCLK_Div1 );

/* PCLK1 = HCLK/2 */

RCC_PCLK1Config( RCC_HCLK_Div2 );

/* PLLCLK = 8MHz * 9 = 72 MHz. */

RCC_PLLConfig( RCC_PLLSource_HSE_Div1, RCC_PLLMul_9 );

/* Enable PLL. */

RCC_PLLCmd( ENABLE );

/* Wait till PLL is ready. */

while(RCC_GetFlagStatus(RCC_FLAG_PLLRDY) == RESET)

{

}

/* Select PLL as system clock source. */

RCC_SYSCLKConfig( RCC_SYSCLKSource_PLLCLK );

/* Wait till PLL is used as system clock source. */

while( RCC_GetSYSCLKSource() != 0x08 )

{

}

/* Enable GPIOA, GPIOB, GPIOC, GPIOD, GPIOE and AFIO clocks */

RCC_APB2PeriphClockCmd( RCC_APB2Periph_GPIOA | RCC_APB2Periph_GPIOB |RCC_APB2Periph_GPIOC

| RCC_APB2Periph_GPIOD | RCC_APB2Periph_GPIOE | RCC_APB2Periph_AFIO, ENABLE );

/* SPI2 Periph clock enable */

RCC_APB1PeriphClockCmd( RCC_APB1Periph_SPI2, ENABLE );

/* Set the Vector Table base address at 0x08000000 */

NVIC_SetVectorTable( NVIC_VectTab_FLASH, 0x0 );

NVIC_PriorityGroupConfig( NVIC_PriorityGroup_4 );

/* Configure HCLK clock as SysTick clock source. */

SysTick_CLKSourceConfig( SysTick_CLKSource_HCLK );

serialPortInit();

}

void vmainTask1Function( void * param)

{

volatile int i;

int sum = 0;

while(1) {

for(i = 0; i < 1000000; i ++) {

sum += 1;

task1Run = 1;

task2Run = 0;

taskidleRun = 0;

}

xQueueSend((QueueHandle_t)param, &sum, portMAX_DELAY);

}

}

void vmainTask2Function( void * param)

{

int readVal;

while(1) {

task1Run = 0;

task2Run = 1;

taskidleRun = 0;

xQueueReceive((QueueHandle_t)param, &readVal, portMAX_DELAY);

printf("sum = %d\r\n", readVal);

}

}

void vApplicationIdleHook( void )

{

task1Run = 0;

task2Run = 0;

taskidleRun = 1;

}示例代码主要的功能是动态创建一个队列,任务1进行求和,求和结束后把值放入队列中,任务2 从队列中获取任务1的求和值。在任务1计算任务没有结束时,队列中没有数据,此时任务2因为队列的阻塞访问处于阻塞态;当任务1计算结束,队列中有数据,任务2由阻塞态进入就绪态(当等待的某件事发生后将退出阻塞态,可参考文章FreeRTOS学习笔记--------任务管理)并删除已读数据,最后进入运行态。实验现象如下图所示:

使用队列实现互斥的示例代码如下:

void vmainTask3Function( void * param);

void vmainTask4Function( void * param);

QueueHandle_t initLock(void);

void getLock(QueueHandle_t handle);

void putLock(QueueHandle_t handle);

int main( void )

{

QueueHandle_t xQueueLockHandle;

prvSetupHardware();

printf("Hello world!\r\n");

xQueueLockHandle = initLock();

xTaskCreate(vmainTask3Function, "task3", 100, (void *)xQueueLockHandle, 1, NULL); // 动态创建任务

xTaskCreate(vmainTask4Function, "task4", 100, (void *)xQueueLockHandle, 1, NULL); // 动态创建任务

/* Start the scheduler. */

vTaskStartScheduler();

/* Will only get here if there was not enough heap space to create the

idle task. */

return 0;

}

/*-----------------------------------------------------------*/

/*-----------------------------------------------------------*/

static void prvSetupHardware( void )

{

/* Start with the clocks in their expected state. */

RCC_DeInit();

/* Enable HSE (high speed external clock). */

RCC_HSEConfig( RCC_HSE_ON );

/* Wait till HSE is ready. */

while( RCC_GetFlagStatus( RCC_FLAG_HSERDY ) == RESET )

{

}

/* 2 wait states required on the flash. */

*( ( unsigned long * ) 0x40022000 ) = 0x02;

/* HCLK = SYSCLK */

RCC_HCLKConfig( RCC_SYSCLK_Div1 );

/* PCLK2 = HCLK */

RCC_PCLK2Config( RCC_HCLK_Div1 );

/* PCLK1 = HCLK/2 */

RCC_PCLK1Config( RCC_HCLK_Div2 );

/* PLLCLK = 8MHz * 9 = 72 MHz. */

RCC_PLLConfig( RCC_PLLSource_HSE_Div1, RCC_PLLMul_9 );

/* Enable PLL. */

RCC_PLLCmd( ENABLE );

/* Wait till PLL is ready. */

while(RCC_GetFlagStatus(RCC_FLAG_PLLRDY) == RESET)

{

}

/* Select PLL as system clock source. */

RCC_SYSCLKConfig( RCC_SYSCLKSource_PLLCLK );

/* Wait till PLL is used as system clock source. */

while( RCC_GetSYSCLKSource() != 0x08 )

{

}

/* Enable GPIOA, GPIOB, GPIOC, GPIOD, GPIOE and AFIO clocks */

RCC_APB2PeriphClockCmd( RCC_APB2Periph_GPIOA | RCC_APB2Periph_GPIOB |RCC_APB2Periph_GPIOC

| RCC_APB2Periph_GPIOD | RCC_APB2Periph_GPIOE | RCC_APB2Periph_AFIO, ENABLE );

/* SPI2 Periph clock enable */

RCC_APB1PeriphClockCmd( RCC_APB1Periph_SPI2, ENABLE );

/* Set the Vector Table base address at 0x08000000 */

NVIC_SetVectorTable( NVIC_VectTab_FLASH, 0x0 );

NVIC_PriorityGroupConfig( NVIC_PriorityGroup_4 );

/* Configure HCLK clock as SysTick clock source. */

SysTick_CLKSourceConfig( SysTick_CLKSource_HCLK );

serialPortInit();

}

QueueHandle_t initLock(void)

{

QueueHandle_t xQueueHandle;

int val;

xQueueHandle = xQueueCreate(1, sizeof(int));

if(0 == xQueueHandle) {

printf("create queue failed!\r\n");

return 0;

}

xQueueSend(xQueueHandle, &val, portMAX_DELAY);

return xQueueHandle;

}

void getLock(QueueHandle_t handle)

{

int val;

xQueueReceive(handle, &val, portMAX_DELAY); // 读取后,队列为空

}

void putLock(QueueHandle_t handle)

{

int val;

xQueueSend(handle, &val, portMAX_DELAY);

}

void vmainTask3Function( void * param)

{

while(1) {

getLock((QueueHandle_t)param);

printf("3\r\n");

putLock((QueueHandle_t)param);

taskYIELD();

}

}

void vmainTask4Function( void * param)

{

while(1) {

getLock((QueueHandle_t)param);

printf("4\r\n");

// 在释放前,任务3因队列中无数据处于阻塞态

putLock((QueueHandle_t)param);

// 释放后,任务3处于就绪态,任务4处于运行态

taskYIELD();

}

}原理与实现同步相同,需要注意的是,队列长度为1,并且释放lock需调用taskYIELD()函数让出CPU资源。实验现象如下,任务3和4交替使用串口。

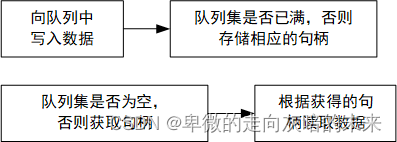

队列集

队列集允许任务从多个队列接收数据,而无需任务依次轮询每个队列以确定哪个队列(如果有的话)包含数据。

使用队列集时,读写队列的流程如下图所示:

综上所述,向队列中写N次数据,那么队列集也相应的操作N次。另外,由此不能理解,队列集中存储的是含有数据的队列的句柄,队列集长度为所有队列长度之和。

示例代码如下所示,在使用队列集前需先在FreeRTOSConfig.h中将configUSE_QUEUE_SETS配置为1。

/*

* Configure the clocks, GPIO and other peripherals as required by the demo.

*/

static void prvSetupHardware( void );

/*-----------------------------------------------------------*/

void vmainTask1Function( void * param);

void vmainTask2Function( void * param);

void vmainTask3Function( void * param);

/*-----------------------------------------------------------*/

int main( void )

{

QueueHandle_t queueHandle1, queueHandle2;

QueueSetHandle_t queueSetHandle;

prvSetupHardware();

printf("Hello world!\r\n");

/*1.创建队列*/

queueHandle1 = xQueueCreate(2, sizeof(int));

queueHandle2 = xQueueCreate(2, sizeof(int));

/*2.创建队列集,队列集长度等于所有队列长度之和*/

queueSetHandle = xQueueCreateSet(4);

/*3.将队列添加进队列集*/

xQueueAddToSet(queueHandle1, queueSetHandle);

xQueueAddToSet(queueHandle2, queueSetHandle);

xTaskCreate(vmainTask1Function, "task1", 100, (void *)queueHandle1, 1, NULL); // 动态创建任务

xTaskCreate(vmainTask2Function, "task2", 100, (void *)queueHandle2, 1, NULL); // 动态创建任务

xTaskCreate(vmainTask3Function, "task3", 100, (void *)queueSetHandle, 1, NULL); // 动态创建任务

/* Start the scheduler. */

vTaskStartScheduler();

/* Will only get here if there was not enough heap space to create the

idle task. */

return 0;

}

/*-----------------------------------------------------------*/

/*-----------------------------------------------------------*/

static void prvSetupHardware( void )

{

/* Start with the clocks in their expected state. */

RCC_DeInit();

/* Enable HSE (high speed external clock). */

RCC_HSEConfig( RCC_HSE_ON );

/* Wait till HSE is ready. */

while( RCC_GetFlagStatus( RCC_FLAG_HSERDY ) == RESET )

{

}

/* 2 wait states required on the flash. */

*( ( unsigned long * ) 0x40022000 ) = 0x02;

/* HCLK = SYSCLK */

RCC_HCLKConfig( RCC_SYSCLK_Div1 );

/* PCLK2 = HCLK */

RCC_PCLK2Config( RCC_HCLK_Div1 );

/* PCLK1 = HCLK/2 */

RCC_PCLK1Config( RCC_HCLK_Div2 );

/* PLLCLK = 8MHz * 9 = 72 MHz. */

RCC_PLLConfig( RCC_PLLSource_HSE_Div1, RCC_PLLMul_9 );

/* Enable PLL. */

RCC_PLLCmd( ENABLE );

/* Wait till PLL is ready. */

while(RCC_GetFlagStatus(RCC_FLAG_PLLRDY) == RESET)

{

}

/* Select PLL as system clock source. */

RCC_SYSCLKConfig( RCC_SYSCLKSource_PLLCLK );

/* Wait till PLL is used as system clock source. */

while( RCC_GetSYSCLKSource() != 0x08 )

{

}

/* Enable GPIOA, GPIOB, GPIOC, GPIOD, GPIOE and AFIO clocks */

RCC_APB2PeriphClockCmd( RCC_APB2Periph_GPIOA | RCC_APB2Periph_GPIOB |RCC_APB2Periph_GPIOC

| RCC_APB2Periph_GPIOD | RCC_APB2Periph_GPIOE | RCC_APB2Periph_AFIO, ENABLE );

/* SPI2 Periph clock enable */

RCC_APB1PeriphClockCmd( RCC_APB1Periph_SPI2, ENABLE );

/* Set the Vector Table base address at 0x08000000 */

NVIC_SetVectorTable( NVIC_VectTab_FLASH, 0x0 );

NVIC_PriorityGroupConfig( NVIC_PriorityGroup_4 );

/* Configure HCLK clock as SysTick clock source. */

SysTick_CLKSourceConfig( SysTick_CLKSource_HCLK );

serialPortInit();

}

/*-----------------------------------------------------------*/

void vmainTask1Function( void * param)

{

int i = 1;

while(1) {

xQueueSend((QueueHandle_t)param, &i, portMAX_DELAY);

i ++;

vTaskDelay(10);

}

}

void vmainTask2Function( void * param)

{

int i = -1;

while(1) {

xQueueSend((QueueHandle_t)param, &i, portMAX_DELAY);

i --;

vTaskDelay(5);

}

}

void vmainTask3Function( void * param)

{

int readVal;

QueueHandle_t queueHandleRead;

while(1) {

/*1.从队列集中获取有数据的队列, 若无则阻塞*/

queueHandleRead = xQueueSelectFromSet((QueueSetHandle_t)param, portMAX_DELAY);

/*2.从队列中获取数据*/

xQueueReceive(queueHandleRead, &readVal, portMAX_DELAY);

printf("get data = %d\r\n", readVal);

}

}本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!