pytorch强化学习(1)——DQN&SARSA

发布时间:2023年12月17日

实验环境

python=3.10

torch=2.1.1

gym=0.26.2

gym[classic_control]

matplotlib=3.8.0

numpy=1.26.2

DQN代码

首先是module.py代码,在这里定义了网络模型和DQN模型

import torch

import torch.nn as nn

import numpy as np

class Net(nn.Module):

# 构造只有一个隐含层的网络

def __init__(self, n_states, n_hidden, n_actions):

super(Net, self).__init__()

# [b,n_states]-->[b,n_hidden]

self.network = nn.Sequential(

torch.nn.Linear(n_states, n_hidden),

torch.nn.ReLU(),

torch.nn.Linear(n_hidden, n_actions)

)

# 前传

def forward(self, x): # [b,n_states]

return self.network(x)

class DQN:

def __init__(self, n_states, n_hidden, n_actions, lr, gamma, epsilon):

# 属性分配

self.n_states = n_states # 状态的特征数

self.n_hidden = n_hidden # 隐含层个数

self.n_actions = n_actions # 动作数

self.lr = lr # 训练时的学习率

self.gamma = gamma # 折扣因子,对下一状态的回报的缩放

self.epsilon = epsilon # 贪婪策略,有1-epsilon的概率探索

# 计数器,记录迭代次数

self.count = 0

# 实例化训练网络

self.q_net = Net(self.n_states, self.n_hidden, self.n_actions)

# 优化器,更新训练网络的参数

self.optimizer = torch.optim.Adam(self.q_net.parameters(), lr=lr)

self.criterion = torch.nn.MSELoss() # 损失函数

def choose_action(self, gym_state):

state = torch.Tensor(gym_state)

if np.random.random() < self.epsilon:

action_values = self.q_net(state) # q_net(state)采取动作后的预测

action = action_values.argmax().item()

else:

# 随机选择一个动作

action = np.random.randint(self.n_actions)

return action

def update(self, gym_state, action, reward, next_gym_state, done):

state, next_state = torch.tensor(gym_state), torch.tensor(next_gym_state)

q_value = self.q_net(state)[action]

# 前千万不能缺少done,如果下一步游戏结束的花,那下一步的q值应该为0

q_target = reward + self.gamma * self.q_net(next_state).max() * (1 - float(done))

self.optimizer.zero_grad()

dqn_loss = self.criterion(q_value, q_target)

dqn_loss.backward()

self.optimizer.step()

然后是train.py代码,在这里调用DQN模型和gym环境,来进行训练:

import gym

import torch

from module import DQN

import matplotlib.pyplot as plt

lr = 1e-3 # 学习率

gamma = 0.95 # 折扣因子

epsilon = 0.8 # 贪心系数

n_hidden = 200 # 隐含层神经元个数

env = gym.make("CartPole-v1")

n_states = env.observation_space.shape[0] # 4

n_actions = env.action_space.n # 2 动作的个数

dqn = DQN(n_states, n_hidden, n_actions, lr, gamma, epsilon)

if __name__ == '__main__':

reward_list = []

for i in range(500):

state = env.reset()[0] # len=4

total_reward = 0

done = False

while True:

# 获取当前状态下需要采取的动作

action = dqn.choose_action(state)

# 更新环境

next_state, reward, done, _, _ = env.step(action)

dqn.update(state, action, reward, next_state, done)

state = next_state

total_reward += reward

if done:

break

print("第%d回合,total_reward=%f" % (i, total_reward))

reward_list.append(total_reward)

# 绘图

episodes_list = list(range(len(reward_list)))

plt.plot(episodes_list, reward_list)

plt.xlabel('Episodes')

plt.ylabel('Returns')

plt.title('DQN Returns')

plt.show()

SARSA代码

首先是module.py代码,在这里定义了网络模型和SARSA模型。

SARSA和DQN基本相同,只有在更新Q网络的时候略有不同,已在代码相应位置做出注释。

import torch

import torch.nn as nn

import numpy as np

import torch.nn.functional as F

class Net(nn.Module):

# 构造只有一个隐含层的网络

def __init__(self, n_states, n_hidden, n_actions):

super(Net, self).__init__()

# [b,n_states]-->[b,n_hidden]

self.network = nn.Sequential(

torch.nn.Linear(n_states, n_hidden),

torch.nn.ReLU(),

torch.nn.Linear(n_hidden, n_actions)

)

# 前传

def forward(self, x): # [b,n_states]

return self.network(x)

class SARSA:

def __init__(self, n_states, n_hidden, n_actions, lr, gamma, epsilon):

# 属性分配

self.n_states = n_states # 状态的特征数

self.n_hidden = n_hidden # 隐含层个数

self.n_actions = n_actions # 动作数

self.lr = lr # 训练时的学习率

self.gamma = gamma # 折扣因子,对下一状态的回报的缩放

self.epsilon = epsilon # 贪婪策略,有1-epsilon的概率探索

# 计数器,记录迭代次数

self.count = 0

# 实例化训练网络

self.q_net = Net(self.n_states, self.n_hidden, self.n_actions)

# 优化器,更新训练网络的参数

self.optimizer = torch.optim.Adam(self.q_net.parameters(), lr=lr)

self.criterion = torch.nn.MSELoss() # 损失函数

def choose_action(self, gym_state):

state = torch.Tensor(gym_state)

# 基于贪婪系数,有一定概率采取随机策略

if np.random.random() < self.epsilon:

action_values = self.q_net(state) # q_net(state)是在当前状态采取各个动作后的预测

action = action_values.argmax().item()

else:

# 随机选择一个动作

action = np.random.randint(self.n_actions)

return action

def update(self, gym_state, action, reward, next_gym_state, done):

state, next_state = torch.tensor(gym_state), torch.tensor(next_gym_state)

q_value = self.q_net(state)[action]

'''

sarsa在更新网络时选择的是q_net(next_state)[next_action]

这是sarsa算法和dqn的唯一不同

dqn是选择max(q_net(next))

'''

next_action = self.choose_action(next_state)

# 千万不能缺少done,如果下一步游戏结束的话,那下一步的q值应该为0,而不是q网络输出的值

q_target = reward + self.gamma * self.q_net(next_state)[next_action] * (1 - float(done))

self.optimizer.zero_grad()

dqn_loss = self.criterion(q_value, q_target)

dqn_loss.backward()

self.optimizer.step()

SARSA也有tarin.py文件,功能和上面DQN的一样,内容也几乎完全一样,只是把DQN的名字改成SARSA而已,所以在这里不再赘述。

运行结果

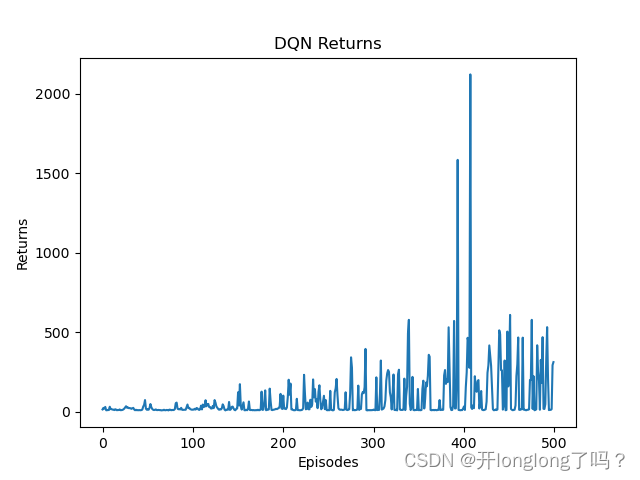

DQN的运行结果如下:

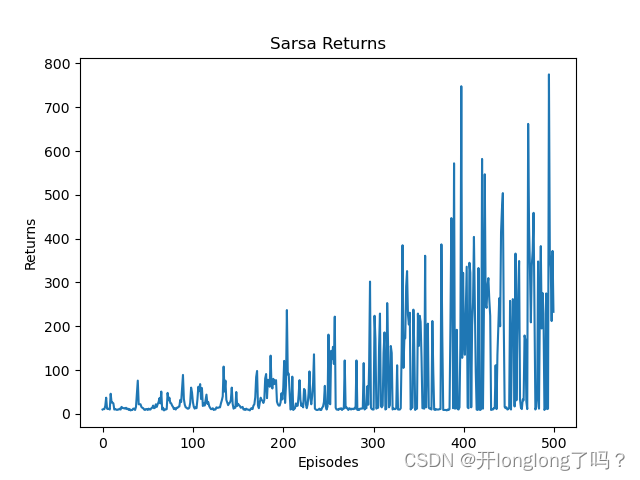

SARSA运行结果如下:

文章来源:https://blog.csdn.net/m0_52744273/article/details/134968432

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- CSS 选择器全攻略:从入门到精通(上)

- 工业企业能源管理平台,可以帮助企业解决哪些方面的能源问题?

- (每日持续更新)jdk api之BufferedReader基础

- 【打卡】牛客网:BM91 反转字符串

- 如何编写有效的WBS?

- 绕某个点旋转

- 【fly-iot飞凡物联】(15):IOT项目使用TDengine数据库,进行技术调研,本地使用docker启动成功,可以使用python进行连接数据插入。

- (每日持续更新)信息系统项目管理(第四版)(高级项目管理)考试重点整理第3章 信息系统治理(二)

- pyhton3中通过matplotlib做图表,导入excel制成图表

- 2023 我的编程之旅——路人贾‘ω‘