Design Pattern——Two-Phase Predictions

As Machine Learning models grow more sophisticated, their complexity can become a double-edged sword. While they deliver superior accuracy, their computational demands pose a challenge when deploying them on resource-constrained edge devices. This is where the Two-Phase Predictions design pattern shines, offering a way to unleash the power of complex models on the edge while keeping things lightweight and efficient.

The Dilemma: Performance vs. Power

Imagine training a cutting-edge image recognition model to identify endangered species in real-time on a wildlife drone. While the model's accuracy is crucial, running it directly on the drone's limited processing power is simply not feasible. This is where Two-Phase Predictions come to the rescue.

Dividing and Conquering: The Two-Phase Approach

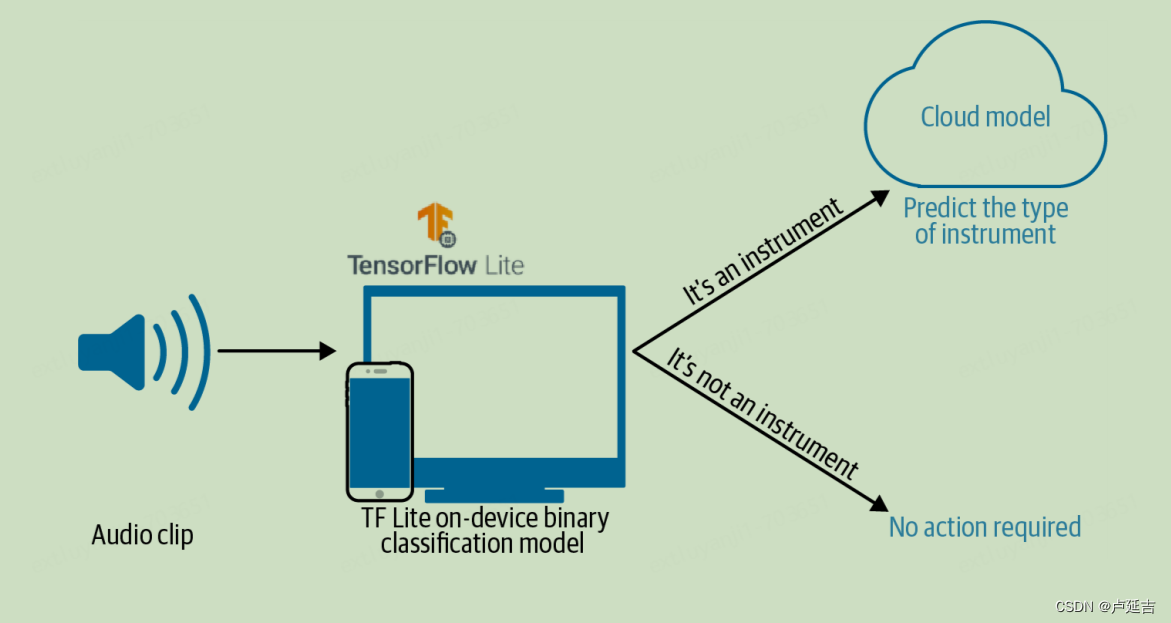

This design pattern cleverly splits the prediction process into two stages:

Phase 1: Local Filtering (Lightweight Model)

A smaller, less complex model runs directly on the edge device. This "local filter" performs a quick and efficient first-pass assessment, potentially filtering out the vast majority of irrelevant inputs. For example, the drone's model might first identify objects resembling animals before analyzing them further for specific endangered species.

Phase 2: Cloud Consultation (Complex Model)

The filtered and prioritized inputs are then sent to a more powerful model residing in the cloud. This "cloud consultant" leverages its full capabilities to deliver the final, highly accurate predictions. As only a fraction of the input reaches the cloud, the overall computational cost and latency remain manageable.

The Benefits of Two-Phase Predictions

This approach offers a multitude of advantages:

- Faster Edge Inference:?The lightweight local model ensures quick first-pass assessments, minimizing processing time on the edge device.

- Reduced Cloud Load:?By filtering out irrelevant inputs, the cloud model receives a smaller workload, leading to improved scalability and cost efficiency.

- Flexibility:?Different models can be chosen for each phase, tailoring the solution to specific needs and resource constraints.

- Offline Functionality:?Even in disconnected environments, the local model can still operate, providing basic predictions until reconnection is established.

Real-World Applications

Two-Phase Predictions find applications in various scenarios where edge devices need to leverage powerful models:

- IoT devices:?Recognizing objects, detecting anomalies, and making crucial decisions at the edge, even with limited resources.

- Autonomous vehicles:?Analyzing sensor data for real-time obstacle detection and path planning, while offloading complex processing to the cloud.

- Consumer electronics:?Personalizing experiences on smart devices through on-device filtering and cloud-based fine-tuning of recommendations.

Conclusion: Unleashing the Power of Complexity

By cleverly dividing the prediction process, Two-Phase Predictions unlock the potential of complex models on resource-constrained devices. This design pattern empowers us to build smarter, faster, and more efficient intelligent systems at the edge, paving the way for a future where powerful AI seamlessly integrates into our everyday lives.

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 07 结构型模式-适配器模式

- 2023年房产赛道短视频平台营销观察

- Java框架相关高频面试题

- 智慧停车场:AI智能烟火识别算法在停车场的运用

- 图像拼接——ORB和SIFT特征检测器

- 高压架空线太阳能警示灯

- 【Vue2+3入门到实战】(22)VUE3之组合式API - setup、reactive和ref函数、computed、watch、生命周期函数详细讲解

- 如何升级composer的版本

- 性能测试干2年,还不会这个技术点?!

- 嵌入式科普(7)你知道JTAG&SWD接口最少接几根线?