FFmpeg之SwrRessample

文章目录

??团队博客: 汽车电子社区

一、概述

??音频应用有时遇到44.1kHz/48KHz/32kHz/16kHz以及8kHz之间互相转换,这一过程称为SRC(sample rate converter),产品上有用codec芯片硬件实现SRC功能,有用软件实现SRC。

??采样率转换的基本思想是抽取和内插,从信号角度看音频重采样就是滤波。滤波函数的窗口大小以及插值函数一旦被确定,其重采样的性能也就确定了。

??抽取可能引起频谱混叠,而内插会产生镜频分量。

??通常在抽取前先加抗混叠滤波器,在内插后加抗镜频滤波器,在语音识别里所需语音信号采样率由ASR(automatic speech recognition)声学模型输入特征决定的。

??FFmpeg在libswresample模块提供重采样函数。音频重采样过程是先建立原始音频信号,然后重新采样。重采样分为上采样和下采样,其中上采样需要插值,下采样需要抽取。从高采样率到低采样率转换是一种有损过程,FFmpeg提供若干选项和算法进行重采样。

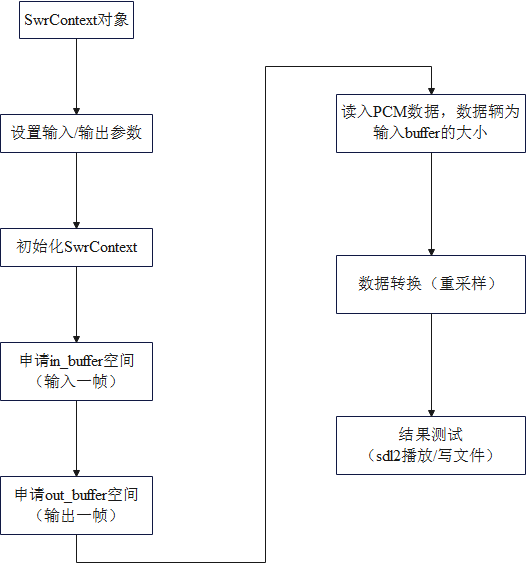

二、重采样流程

三、重要结构体

3.1、SwrContext

struct SwrContext {

const AVClass *av_class; ///< AVClass used for AVOption and av_log()

int log_level_offset; ///< logging level offset

void *log_ctx; ///< parent logging context

enum AVSampleFormat in_sample_fmt; ///< input sample format

enum AVSampleFormat int_sample_fmt; ///< internal sample format (AV_SAMPLE_FMT_FLTP or AV_SAMPLE_FMT_S16P)

enum AVSampleFormat out_sample_fmt; ///< output sample format

AVChannelLayout used_ch_layout; ///< number of used input channels (mapped channel count if channel_map, otherwise in.ch_count)

AVChannelLayout in_ch_layout; ///< input channel layout

AVChannelLayout out_ch_layout; ///< output channel layout

int in_sample_rate; ///< input sample rate

int out_sample_rate; ///< output sample rate

int flags; ///< miscellaneous flags such as SWR_FLAG_RESAMPLE

float slev; ///< surround mixing level

float clev; ///< center mixing level

float lfe_mix_level; ///< LFE mixing level

float rematrix_volume; ///< rematrixing volume coefficient

float rematrix_maxval; ///< maximum value for rematrixing output

int matrix_encoding; /**< matrixed stereo encoding */

const int *channel_map; ///< channel index (or -1 if muted channel) map

int engine;

#if FF_API_OLD_CHANNEL_LAYOUT

int user_used_ch_count; ///< User set used channel count

int user_in_ch_count; ///< User set input channel count

int user_out_ch_count; ///< User set output channel count

int64_t user_in_ch_layout; ///< User set input channel layout

int64_t user_out_ch_layout; ///< User set output channel layout

#endif

AVChannelLayout user_used_chlayout; ///< User set used channel layout

AVChannelLayout user_in_chlayout; ///< User set input channel layout

AVChannelLayout user_out_chlayout; ///< User set output channel layout

enum AVSampleFormat user_int_sample_fmt; ///< User set internal sample format

int user_dither_method; ///< User set dither method

struct DitherContext dither;

int filter_size; /**< length of each FIR filter in the resampling filterbank relative to the cutoff frequency */

int phase_shift; /**< log2 of the number of entries in the resampling polyphase filterbank */

int linear_interp; /**< if 1 then the resampling FIR filter will be linearly interpolated */

int exact_rational; /**< if 1 then enable non power of 2 phase_count */

double cutoff; /**< resampling cutoff frequency (swr: 6dB point; soxr: 0dB point). 1.0 corresponds to half the output sample rate */

int filter_type; /**< swr resampling filter type */

double kaiser_beta; /**< swr beta value for Kaiser window (only applicable if filter_type == AV_FILTER_TYPE_KAISER) */

double precision; /**< soxr resampling precision (in bits) */

int cheby; /**< soxr: if 1 then passband rolloff will be none (Chebyshev) & irrational ratio approximation precision will be higher */

float min_compensation; ///< swr minimum below which no compensation will happen

float min_hard_compensation; ///< swr minimum below which no silence inject / sample drop will happen

float soft_compensation_duration; ///< swr duration over which soft compensation is applied

float max_soft_compensation; ///< swr maximum soft compensation in seconds over soft_compensation_duration

float async; ///< swr simple 1 parameter async, similar to ffmpegs -async

int64_t firstpts_in_samples; ///< swr first pts in samples

int resample_first; ///< 1 if resampling must come first, 0 if rematrixing

int rematrix; ///< flag to indicate if rematrixing is needed (basically if input and output layouts mismatch)

int rematrix_custom; ///< flag to indicate that a custom matrix has been defined

AudioData in; ///< input audio data

AudioData postin; ///< post-input audio data: used for rematrix/resample

AudioData midbuf; ///< intermediate audio data (postin/preout)

AudioData preout; ///< pre-output audio data: used for rematrix/resample

AudioData out; ///< converted output audio data

AudioData in_buffer; ///< cached audio data (convert and resample purpose)

AudioData silence; ///< temporary with silence

AudioData drop_temp; ///< temporary used to discard output

int in_buffer_index; ///< cached buffer position

int in_buffer_count; ///< cached buffer length

int resample_in_constraint; ///< 1 if the input end was reach before the output end, 0 otherwise

int flushed; ///< 1 if data is to be flushed and no further input is expected

int64_t outpts; ///< output PTS

int64_t firstpts; ///< first PTS

int drop_output; ///< number of output samples to drop

double delayed_samples_fixup; ///< soxr 0.1.1: needed to fixup delayed_samples after flush has been called.

struct AudioConvert *in_convert; ///< input conversion context

struct AudioConvert *out_convert; ///< output conversion context

struct AudioConvert *full_convert; ///< full conversion context (single conversion for input and output)

struct ResampleContext *resample; ///< resampling context

struct Resampler const *resampler; ///< resampler virtual function table

double matrix[SWR_CH_MAX][SWR_CH_MAX]; ///< floating point rematrixing coefficients

float matrix_flt[SWR_CH_MAX][SWR_CH_MAX]; ///< single precision floating point rematrixing coefficients

uint8_t *native_matrix;

uint8_t *native_one;

uint8_t *native_simd_one;

uint8_t *native_simd_matrix;

int32_t matrix32[SWR_CH_MAX][SWR_CH_MAX]; ///< 17.15 fixed point rematrixing coefficients

uint8_t matrix_ch[SWR_CH_MAX][SWR_CH_MAX+1]; ///< Lists of input channels per output channel that have non zero rematrixing coefficients

mix_1_1_func_type *mix_1_1_f;

mix_1_1_func_type *mix_1_1_simd;

mix_2_1_func_type *mix_2_1_f;

mix_2_1_func_type *mix_2_1_simd;

mix_any_func_type *mix_any_f;

/* TODO: callbacks for ASM optimizations */

};

3.2、ResamplerContext

??ResamplerContext是重采样上下文的结构体,位于libswresample/resample.h,代码如下:

typedef struct ResampleContext {

const AVClass *av_class;

uint8_t *filter_bank;

int filter_length;

int filter_alloc;

int ideal_dst_incr;

int dst_incr;

int dst_incr_div;

int dst_incr_mod;

int index;

int frac;

int src_incr;

int compensation_distance;

int phase_count;

int linear;

enum SwrFilterType filter_type;

double kaiser_beta;

double factor;

enum AVSampleFormat format;

int felem_size;

int filter_shift;

int phase_count_compensation; /* desired phase_count when compensation is enabled */

struct {

void (*resample_one)(void *dst, const void *src,

int n, int64_t index, int64_t incr);

int (*resample_common)(struct ResampleContext *c, void *dst,

const void *src, int n, int update_ctx);

int (*resample_linear)(struct ResampleContext *c, void *dst,

const void *src, int n, int update_ctx);

} dsp;

} ResampleContext;

??其中,SwrFilterType是重采样滤波的枚举类型:

enum SwrFilterType {

SWR_FILTER_TYPE_CUBIC, // 三次插值

SWR_FILTER_TYPE_BLACKMAN_NUTTALL, // 布莱克曼窗

SWR_FILTER_TYPE_KAISER, // 凯塞窗

};

??AVSampleFormat是音频格式及量化精度(位宽)的枚举类型,每种音频格式有不同的量化精度(位宽),位数越多,表示值就越精确,声音表现自然就越精准。FFMpeg中音频格式有以下几种,每种格式有其占用的字节数信息:

enum AVSampleFormat {

AV_SAMPLE_FMT_NONE = -1,

AV_SAMPLE_FMT_U8, ///< unsigned 8 bits

AV_SAMPLE_FMT_S16, ///< signed 16 bits

AV_SAMPLE_FMT_S32, ///< signed 32 bits

AV_SAMPLE_FMT_FLT, ///< float

AV_SAMPLE_FMT_DBL, ///< double

AV_SAMPLE_FMT_U8P, ///< unsigned 8 bits, planar

AV_SAMPLE_FMT_S16P, ///< signed 16 bits, planar

AV_SAMPLE_FMT_S32P, ///< signed 32 bits, planar

AV_SAMPLE_FMT_FLTP, ///< float, planar

AV_SAMPLE_FMT_DBLP, ///< double, planar

AV_SAMPLE_FMT_S64, ///< signed 64 bits

AV_SAMPLE_FMT_S64P, ///< signed 64 bits, planar

AV_SAMPLE_FMT_NB ///< Number of sample formats. DO NOT USE if linking dynamically

};

四、重要函数

4.1、swr_alloc

av_cold struct SwrContext *swr_alloc(void){

SwrContext *s= av_mallocz(sizeof(SwrContext));

if(s){

s->av_class= &av_class;

av_opt_set_defaults(s);

}

return s;

}

void av_opt_set_defaults(void *s)

{

av_opt_set_defaults2(s, 0, 0);

}

??此函数用于申请一个SwrContext结构体

4.2、swr_alloc_set_opts

??swr_alloc_set_opts函数可以在创建SwrContext的同时设置必要的参数。

/**

* 根据需要分配 SwrContext 并设置/重置通用参数。

*

* 此函数不需要使用 swr_alloc() 分配 s。 另一方面,swr_alloc() 可以使用 swr_alloc_set_opts() 在分配的上下文中设置参数。

*

* @param s 现有的 Swr 上下文(如果可用),否则为 NULL

* @param out_ch_layout 输出通道布局 (AV_CH_LAYOUT_*)

* @param out_sample_fmt 输出样本格式 (AV_SAMPLE_FMT_*)。

* @param out_sample_rate 输出采样率(频率以赫兹为单位)

* @param in_ch_layout 输入通道布局 (AV_CH_LAYOUT_*)

* @param in_sample_fmt 输入样本格式 (AV_SAMPLE_FMT_*)。

* @param in_sample_rate 输入采样率(以Hz为单位的频率)

* @param log_offset 日志级别偏移

* @param log_ctx 父日志上下文,可以为 NULL

*

* @参见 swr_init(), swr_free()

* @return NULL 出错,否则分配上下文

*/

#if FF_API_OLD_CHANNEL_LAYOUT

FF_DISABLE_DEPRECATION_WARNINGS

struct SwrContext *swr_alloc_set_opts(struct SwrContext *s,

int64_t out_ch_layout, enum AVSampleFormat out_sample_fmt, int out_sample_rate,

int64_t in_ch_layout, enum AVSampleFormat in_sample_fmt, int in_sample_rate,

int log_offset, void *log_ctx){

if(!s) s= swr_alloc();

if(!s) return NULL;

s->log_level_offset= log_offset;

s->log_ctx= log_ctx;

if (av_opt_set_int(s, "ocl", out_ch_layout, 0) < 0)

goto fail;

if (av_opt_set_int(s, "osf", out_sample_fmt, 0) < 0)

goto fail;

if (av_opt_set_int(s, "osr", out_sample_rate, 0) < 0)

goto fail;

if (av_opt_set_int(s, "icl", in_ch_layout, 0) < 0)

goto fail;

if (av_opt_set_int(s, "isf", in_sample_fmt, 0) < 0)

goto fail;

if (av_opt_set_int(s, "isr", in_sample_rate, 0) < 0)

goto fail;

if (av_opt_set_int(s, "ich", av_get_channel_layout_nb_channels(s-> user_in_ch_layout), 0) < 0)

goto fail;

if (av_opt_set_int(s, "och", av_get_channel_layout_nb_channels(s->user_out_ch_layout), 0) < 0)

goto fail;

av_opt_set_int(s, "uch", 0, 0);

return s;

fail:

av_log(s, AV_LOG_ERROR, "Failed to set option\n");

swr_free(&s);

return NULL;

}

FF_ENABLE_DEPRECATION_WARNINGS

#endif

??根据通道布局、音频数据格式、采样频率,返回分配的转换上下文 。

??struct SwrContext *s:如果为NULL则创建一个新的SwrContext,否则对已有的SwrContext进行参数设置。

??使用方法如下:

SwrContext *swr = swr_alloc_set_opts(NULL, // we're allocating a new context

AV_CH_LAYOUT_STEREO, // out_ch_layout

AV_SAMPLE_FMT_S16, // out_sample_fmt

44100, // out_sample_rate

AV_CH_LAYOUT_5POINT1, // in_ch_layout

AV_SAMPLE_FMT_FLTP, // in_sample_fmt

48000, // in_sample_rate

0, // log_offset

NULL); // log_ctx

4.3、av_opt_set_*

??如果SwrContext结构体是通过swr_alloc函数申请的,则必须使用AVOptions API设置选项。使用方法如下:

SwrContext *swr = swr_alloc();

av_opt_set_channel_layout(swr, "in_channel_layout", AV_CH_LAYOUT_5POINT1, 0);

av_opt_set_channel_layout(swr, "out_channel_layout", AV_CH_LAYOUT_STEREO, 0);

av_opt_set_int(swr, "in_sample_rate", 48000, 0);

av_opt_set_int(swr, "out_sample_rate", 44100, 0);

av_opt_set_sample_fmt(swr, "in_sample_fmt", AV_SAMPLE_FMT_FLTP, 0);

av_opt_set_sample_fmt(swr, "out_sample_fmt", AV_SAMPLE_FMT_S16, 0);

4.4、swr_init

??用来初始化上下文,源码如下:

/**

* 设置用户参数后初始化上下文。

* @note 必须使用 AVOption API 配置上下文。

*

* @see av_opt_set_int()

* @see av_opt_set_dict()

*

* @param[in,out] s 要初始化的 Swr 上下文

* @return AVERROR 失败时的错误代码。

*/

av_cold int swr_init(struct SwrContext *s){

int ret;

char l1[1024], l2[1024];

clear_context(s);

if((unsigned) s-> in_sample_fmt >= AV_SAMPLE_FMT_NB){

av_log(s, AV_LOG_ERROR, "Requested input sample format %d is invalid\n", s->in_sample_fmt);

return AVERROR(EINVAL);

}

if((unsigned) s->out_sample_fmt >= AV_SAMPLE_FMT_NB){

av_log(s, AV_LOG_ERROR, "Requested output sample format %d is invalid\n", s->out_sample_fmt);

return AVERROR(EINVAL);

}

if(s-> in_sample_rate <= 0){

av_log(s, AV_LOG_ERROR, "Requested input sample rate %d is invalid\n", s->in_sample_rate);

return AVERROR(EINVAL);

}

if(s->out_sample_rate <= 0){

av_log(s, AV_LOG_ERROR, "Requested output sample rate %d is invalid\n", s->out_sample_rate);

return AVERROR(EINVAL);

}

#if FF_API_OLD_CHANNEL_LAYOUT

s->out.ch_count = s-> user_out_ch_count;

s-> in.ch_count = s-> user_in_ch_count;

// if the old/new fields are set inconsistently, prefer the old ones

if (s->user_used_ch_count && s->user_used_ch_count != s->user_used_chlayout.nb_channels) {

av_channel_layout_uninit(&s->used_ch_layout);

s->used_ch_layout.order = AV_CHANNEL_ORDER_UNSPEC;

s->used_ch_layout.nb_channels = s->user_used_ch_count;

} else if (av_channel_layout_check(&s->user_used_chlayout)) {

ret = av_channel_layout_copy(&s->used_ch_layout, &s->user_used_chlayout);

if (ret < 0)

return ret;

}

if ((s->user_in_ch_count && s->user_in_ch_count != s->user_in_chlayout.nb_channels) ||

(s->user_in_ch_layout && (s->user_in_chlayout.order != AV_CHANNEL_ORDER_NATIVE ||

s->user_in_chlayout.u.mask != s->user_in_ch_layout))) {

av_channel_layout_uninit(&s->in_ch_layout);

if (s->user_in_ch_layout)

av_channel_layout_from_mask(&s->in_ch_layout, s->user_in_ch_layout);

else {

s->in_ch_layout.order = AV_CHANNEL_ORDER_UNSPEC;

s->in_ch_layout.nb_channels = s->user_in_ch_count;

}

} else if (av_channel_layout_check(&s->user_in_chlayout))

av_channel_layout_copy(&s->in_ch_layout, &s->user_in_chlayout);

if ((s->user_out_ch_count && s->user_out_ch_count != s->user_out_chlayout.nb_channels) ||

(s->user_out_ch_layout && (s->user_out_chlayout.order != AV_CHANNEL_ORDER_NATIVE ||

s->user_out_chlayout.u.mask != s->user_out_ch_layout))) {

av_channel_layout_uninit(&s->out_ch_layout);

if (s->user_out_ch_layout)

av_channel_layout_from_mask(&s->out_ch_layout, s->user_out_ch_layout);

else {

s->out_ch_layout.order = AV_CHANNEL_ORDER_UNSPEC;

s->out_ch_layout.nb_channels = s->user_out_ch_count;

}

} else if (av_channel_layout_check(&s->user_out_chlayout))

av_channel_layout_copy(&s->out_ch_layout, &s->user_out_chlayout);

if (!s->out.ch_count)

s->out.ch_count = s->out_ch_layout.nb_channels;

if (!s-> in.ch_count)

s-> in.ch_count = s->in_ch_layout.nb_channels;

if (!(ret = av_channel_layout_check(&s->in_ch_layout)) || s->in_ch_layout.nb_channels > SWR_CH_MAX) {

if (ret)

av_channel_layout_describe(&s->in_ch_layout, l1, sizeof(l1));

av_log(s, AV_LOG_WARNING, "Input channel layout \"%s\" is invalid or unsupported.\n", ret ? l1 : "");

return AVERROR(EINVAL);

}

if (!(ret = av_channel_layout_check(&s->out_ch_layout)) || s->out_ch_layout.nb_channels > SWR_CH_MAX) {

if (ret)

av_channel_layout_describe(&s->out_ch_layout, l2, sizeof(l2));

av_log(s, AV_LOG_WARNING, "Output channel layout \"%s\" is invalid or unsupported.\n", ret ? l2 : "");

return AVERROR(EINVAL);

}

#else

s->out.ch_count = s-> user_out_chlayout.nb_channels;

s-> in.ch_count = s-> user_in_chlayout.nb_channels;

if (!(ret = av_channel_layout_check(&s->user_in_chlayout)) || s->user_in_chlayout.nb_channels > SWR_CH_MAX) {

if (ret)

av_channel_layout_describe(&s->user_in_chlayout, l1, sizeof(l1));

av_log(s, AV_LOG_WARNING, "Input channel layout \"%s\" is invalid or unsupported.\n", ret ? l1 : "");

return AVERROR(EINVAL);

}

if (!(ret = av_channel_layout_check(&s->user_out_chlayout)) || s->user_out_chlayout.nb_channels > SWR_CH_MAX) {

if (ret)

av_channel_layout_describe(&s->user_out_chlayout, l2, sizeof(l2));

av_log(s, AV_LOG_WARNING, "Output channel layout \"%s\" is invalid or unsupported.\n", ret ? l2 : "");

return AVERROR(EINVAL);

}

ret = av_channel_layout_copy(&s->in_ch_layout, &s->user_in_chlayout);

ret |= av_channel_layout_copy(&s->out_ch_layout, &s->user_out_chlayout);

ret |= av_channel_layout_copy(&s->used_ch_layout, &s->user_used_chlayout);

if (ret < 0)

return ret;

#endif

s->int_sample_fmt= s->user_int_sample_fmt;

s->dither.method = s->user_dither_method;

switch(s->engine){

#if CONFIG_LIBSOXR

case SWR_ENGINE_SOXR: s->resampler = &swri_soxr_resampler; break;

#endif

case SWR_ENGINE_SWR : s->resampler = &swri_resampler; break;

default:

av_log(s, AV_LOG_ERROR, "Requested resampling engine is unavailable\n");

return AVERROR(EINVAL);

}

if (!av_channel_layout_check(&s->used_ch_layout))

av_channel_layout_default(&s->used_ch_layout, s->in.ch_count);

if (s->used_ch_layout.nb_channels != s->in_ch_layout.nb_channels)

av_channel_layout_uninit(&s->in_ch_layout);

if (s->used_ch_layout.order == AV_CHANNEL_ORDER_UNSPEC)

av_channel_layout_default(&s->used_ch_layout, s->used_ch_layout.nb_channels);

if (s->in_ch_layout.order == AV_CHANNEL_ORDER_UNSPEC) {

ret = av_channel_layout_copy(&s->in_ch_layout, &s->used_ch_layout);

if (ret < 0)

return ret;

}

if (s->out_ch_layout.order == AV_CHANNEL_ORDER_UNSPEC)

av_channel_layout_default(&s->out_ch_layout, s->out.ch_count);

s->rematrix = av_channel_layout_compare(&s->out_ch_layout, &s->in_ch_layout) ||

s->rematrix_volume!=1.0 ||

s->rematrix_custom;

if(s->int_sample_fmt == AV_SAMPLE_FMT_NONE){

// 16bit or less to 16bit or less with the same sample rate

if( av_get_bytes_per_sample(s-> in_sample_fmt) <= 2

&& av_get_bytes_per_sample(s->out_sample_fmt) <= 2

&& s->out_sample_rate==s->in_sample_rate) {

s->int_sample_fmt= AV_SAMPLE_FMT_S16P;

// 8 -> 8, 16->8, 8->16bit

} else if( av_get_bytes_per_sample(s-> in_sample_fmt)

+av_get_bytes_per_sample(s->out_sample_fmt) <= 3 ) {

s->int_sample_fmt= AV_SAMPLE_FMT_S16P;

}else if( av_get_bytes_per_sample(s-> in_sample_fmt) <= 2

&& !s->rematrix

&& s->out_sample_rate==s->in_sample_rate

&& !(s->flags & SWR_FLAG_RESAMPLE)){

s->int_sample_fmt= AV_SAMPLE_FMT_S16P;

}else if( av_get_planar_sample_fmt(s-> in_sample_fmt) == AV_SAMPLE_FMT_S32P

&& av_get_planar_sample_fmt(s->out_sample_fmt) == AV_SAMPLE_FMT_S32P

&& !s->rematrix

&& s->out_sample_rate == s->in_sample_rate

&& !(s->flags & SWR_FLAG_RESAMPLE)

&& s->engine != SWR_ENGINE_SOXR){

s->int_sample_fmt= AV_SAMPLE_FMT_S32P;

}else if(av_get_bytes_per_sample(s->in_sample_fmt) <= 4){

s->int_sample_fmt= AV_SAMPLE_FMT_FLTP;

}else{

s->int_sample_fmt= AV_SAMPLE_FMT_DBLP;

}

}

av_log(s, AV_LOG_DEBUG, "Using %s internally between filters\n", av_get_sample_fmt_name(s->int_sample_fmt));

if( s->int_sample_fmt != AV_SAMPLE_FMT_S16P

&&s->int_sample_fmt != AV_SAMPLE_FMT_S32P

&&s->int_sample_fmt != AV_SAMPLE_FMT_S64P

&&s->int_sample_fmt != AV_SAMPLE_FMT_FLTP

&&s->int_sample_fmt != AV_SAMPLE_FMT_DBLP){

av_log(s, AV_LOG_ERROR, "Requested sample format %s is not supported internally, s16p/s32p/s64p/fltp/dblp are supported\n", av_get_sample_fmt_name(s->int_sample_fmt));

return AVERROR(EINVAL);

}

set_audiodata_fmt(&s-> in, s-> in_sample_fmt);

set_audiodata_fmt(&s->out, s->out_sample_fmt);

if (s->firstpts_in_samples != AV_NOPTS_VALUE) {

if (!s->async && s->min_compensation >= FLT_MAX/2)

s->async = 1;

s->firstpts =

s->outpts = s->firstpts_in_samples * s->out_sample_rate;

} else

s->firstpts = AV_NOPTS_VALUE;

if (s->async) {

if (s->min_compensation >= FLT_MAX/2)

s->min_compensation = 0.001;

if (s->async > 1.0001) {

s->max_soft_compensation = s->async / (double) s->in_sample_rate;

}

}

if (s->out_sample_rate!=s->in_sample_rate || (s->flags & SWR_FLAG_RESAMPLE)){

s->resample = s->resampler->init(s->resample, s->out_sample_rate, s->in_sample_rate, s->filter_size, s->phase_shift, s->linear_interp, s->cutoff, s->int_sample_fmt, s->filter_type, s->kaiser_beta, s->precision, s->cheby, s->exact_rational);

if (!s->resample) {

av_log(s, AV_LOG_ERROR, "Failed to initialize resampler\n");

return AVERROR(ENOMEM);

}

}else

s->resampler->free(&s->resample);

if( s->int_sample_fmt != AV_SAMPLE_FMT_S16P

&& s->int_sample_fmt != AV_SAMPLE_FMT_S32P

&& s->int_sample_fmt != AV_SAMPLE_FMT_FLTP

&& s->int_sample_fmt != AV_SAMPLE_FMT_DBLP

&& s->resample){

av_log(s, AV_LOG_ERROR, "Resampling only supported with internal s16p/s32p/fltp/dblp\n");

ret = AVERROR(EINVAL);

goto fail;

}

#define RSC 1 //FIXME finetune

if(!s-> in.ch_count)

s-> in.ch_count = s->in_ch_layout.nb_channels;

if (!av_channel_layout_check(&s->used_ch_layout))

av_channel_layout_default(&s->used_ch_layout, s->in.ch_count);

if(!s->out.ch_count)

s->out.ch_count = s->out_ch_layout.nb_channels;

if(!s-> in.ch_count){

av_assert0(s->in_ch_layout.order == AV_CHANNEL_ORDER_UNSPEC);

av_log(s, AV_LOG_ERROR, "Input channel count and layout are unset\n");

ret = AVERROR(EINVAL);

goto fail;

}

av_channel_layout_describe(&s->out_ch_layout, l2, sizeof(l2));

#if FF_API_OLD_CHANNEL_LAYOUT

if (s->out_ch_layout.order != AV_CHANNEL_ORDER_UNSPEC && s->out.ch_count != s->out_ch_layout.nb_channels) {

av_log(s, AV_LOG_ERROR, "Output channel layout %s mismatches specified channel count %d\n", l2, s->out.ch_count);

ret = AVERROR(EINVAL);

goto fail;

}

#endif

av_channel_layout_describe(&s->in_ch_layout, l1, sizeof(l1));

if (s->in_ch_layout.order != AV_CHANNEL_ORDER_UNSPEC && s->used_ch_layout.nb_channels != s->in_ch_layout.nb_channels) {

av_log(s, AV_LOG_ERROR, "Input channel layout %s mismatches specified channel count %d\n", l1, s->used_ch_layout.nb_channels);

ret = AVERROR(EINVAL);

goto fail;

}

if (( s->out_ch_layout.order == AV_CHANNEL_ORDER_UNSPEC

|| s-> in_ch_layout.order == AV_CHANNEL_ORDER_UNSPEC) && s->used_ch_layout.nb_channels != s->out.ch_count && !s->rematrix_custom) {

av_log(s, AV_LOG_ERROR, "Rematrix is needed between %s and %s "

"but there is not enough information to do it\n", l1, l2);

ret = AVERROR(EINVAL);

goto fail;

}

av_assert0(s->used_ch_layout.nb_channels);

av_assert0(s->out.ch_count);

s->resample_first= RSC*s->out.ch_count/s->used_ch_layout.nb_channels - RSC < s->out_sample_rate/(float)s-> in_sample_rate - 1.0;

s->in_buffer= s->in;

s->silence = s->in;

s->drop_temp= s->out;

if ((ret = swri_dither_init(s, s->out_sample_fmt, s->int_sample_fmt)) < 0)

goto fail;

if(!s->resample && !s->rematrix && !s->channel_map && !s->dither.method){

s->full_convert = swri_audio_convert_alloc(s->out_sample_fmt,

s-> in_sample_fmt, s-> in.ch_count, NULL, 0);

return 0;

}

s->in_convert = swri_audio_convert_alloc(s->int_sample_fmt,

s-> in_sample_fmt, s->used_ch_layout.nb_channels, s->channel_map, 0);

s->out_convert= swri_audio_convert_alloc(s->out_sample_fmt,

s->int_sample_fmt, s->out.ch_count, NULL, 0);

if (!s->in_convert || !s->out_convert) {

ret = AVERROR(ENOMEM);

goto fail;

}

s->postin= s->in;

s->preout= s->out;

s->midbuf= s->in;

if(s->channel_map){

s->postin.ch_count=

s->midbuf.ch_count= s->used_ch_layout.nb_channels;

if(s->resample)

s->in_buffer.ch_count= s->used_ch_layout.nb_channels;

}

if(!s->resample_first){

s->midbuf.ch_count= s->out.ch_count;

if(s->resample)

s->in_buffer.ch_count = s->out.ch_count;

}

set_audiodata_fmt(&s->postin, s->int_sample_fmt);

set_audiodata_fmt(&s->midbuf, s->int_sample_fmt);

set_audiodata_fmt(&s->preout, s->int_sample_fmt);

if(s->resample){

set_audiodata_fmt(&s->in_buffer, s->int_sample_fmt);

}

av_assert0(!s->preout.count);

s->dither.noise = s->preout;

s->dither.temp = s->preout;

if (s->dither.method > SWR_DITHER_NS) {

s->dither.noise.bps = 4;

s->dither.noise.fmt = AV_SAMPLE_FMT_FLTP;

s->dither.noise_scale = 1;

}

if(s->rematrix || s->dither.method) {

ret = swri_rematrix_init(s);

if (ret < 0)

goto fail;

}

return 0;

fail:

swr_close(s);

return ret;

}

??swr_init() 参数设置好之后必须调用swr_init()对SwrContext进行初始化。 如果需要修改转换的参数:重新进行参数设置,再次调用swr_init()。

4.5、av_samples_alloc_array_and_samples

??根据音频格式分配相应大小的内存空间。

int av_samples_alloc_array_and_samples(uint8_t ***audio_data, int *linesize, int nb_channels,

int nb_samples, enum AVSampleFormat sample_fmt, int align)

{

int ret, nb_planes = av_sample_fmt_is_planar(sample_fmt) ? nb_channels : 1;

*audio_data = av_calloc(nb_planes, sizeof(**audio_data));

if (!*audio_data)

return AVERROR(ENOMEM);

ret = av_samples_alloc(*audio_data, linesize, nb_channels,

nb_samples, sample_fmt, align);

if (ret < 0)

av_freep(audio_data);

return ret;

}

4.6、av_samples_alloc

??根据音频格式分配相应大小的内存空间。用于转换过程中对输出内存大小进行调整。

/**

* 为 nb_samples 样本分配一个样本缓冲区,并相应地填充数据指针和 linesize。

* 分配的样本缓冲区可以通过使用 av_freep(&audio_data[0]) 释放分配的数据将被初始化为静音。

*

* @see enum AVSampleFormat

* AVSampleFormat 的文档描述了数据布局。

*

* @param [out] audio_data 数组要填充每个通道的指针

* @param [out] linesize 对齐的音频缓冲区大小,可能为 NULL

* @param nb_channels 音频通道数

* @param nb_samples 每个通道的样本数

* @param sample_fmt 采样格式

* @param align 缓冲区大小对齐(0 = 默认,1 = 无对齐)

* @return >=0 成功或失败时返回负错误代码

* @todo 如果在下一次开辟内存空间成功,则返回已分配缓冲区的大小

* @see av_samples_fill_arrays()

* @see av_samples_alloc_array_and_samples()

*/

int av_samples_alloc(uint8_t **audio_data, int *linesize, int nb_channels,

int nb_samples, enum AVSampleFormat sample_fmt, int align)

{

uint8_t *buf;

int size = av_samples_get_buffer_size(NULL, nb_channels, nb_samples,

sample_fmt, align);

if (size < 0)

return size;

buf = av_malloc(size);

if (!buf)

return AVERROR(ENOMEM);

size = av_samples_fill_arrays(audio_data, linesize, buf, nb_channels,

nb_samples, sample_fmt, align);

if (size < 0) {

av_free(buf);

return size;

}

av_samples_set_silence(audio_data, 0, nb_samples, nb_channels, sample_fmt);

return size;

}

4.7、swr_convert

??针对每一帧音频的处理。把一帧帧的音频作相应的重采样

/** Convert audio 转换音频。

*

* in 和 in_count 可以设置为 0 以在最后刷新最后几个样本。

*

* 如果提供的输入多于输出空间,则输入将被缓冲。 您可以通过使用 swr_get_out_samples() 为给定数量的输入样本检索所需输出样本数量的上限来避免这种缓冲。 只要有可能,转换将直接运行而无需复制。

*

* @param s 分配的 Swr 上下文,带参数设置

* @param out 输出缓冲区,在打包音频的情况下只需要设置第一个

* @param out_count 一个通道的样本中可用于输出的空间量

* @param in 输入缓冲区,在打包音频的情况下只需要设置第一个

* @param in_count 一个通道中可用的输入样本数

*

* @return 每个通道输出的样本数,错误时为负值

*/

int attribute_align_arg swr_convert(struct SwrContext *s,

uint8_t **out_arg, int out_count,

const uint8_t **in_arg, int in_count)

{

AudioData * in= &s->in;

AudioData *out= &s->out;

int av_unused max_output;

if (!swr_is_initialized(s)) {

av_log(s, AV_LOG_ERROR, "Context has not been initialized\n");

return AVERROR(EINVAL);

}

#if defined(ASSERT_LEVEL) && ASSERT_LEVEL >1

max_output = swr_get_out_samples(s, in_count);

#endif

while(s->drop_output > 0){

int ret;

uint8_t *tmp_arg[SWR_CH_MAX];

#define MAX_DROP_STEP 16384

if((ret=swri_realloc_audio(&s->drop_temp, FFMIN(s->drop_output, MAX_DROP_STEP)))<0)

return ret;

reversefill_audiodata(&s->drop_temp, tmp_arg);

s->drop_output *= -1; //FIXME find a less hackish solution

ret = swr_convert(s, tmp_arg, FFMIN(-s->drop_output, MAX_DROP_STEP), in_arg, in_count); //FIXME optimize but this is as good as never called so maybe it doesn't matter

s->drop_output *= -1;

in_count = 0;

if(ret>0) {

s->drop_output -= ret;

if (!s->drop_output && !out_arg)

return 0;

continue;

}

av_assert0(s->drop_output);

return 0;

}

if(!in_arg){

if(s->resample){

if (!s->flushed)

s->resampler->flush(s);

s->resample_in_constraint = 0;

s->flushed = 1;

}else if(!s->in_buffer_count){

return 0;

}

}else

fill_audiodata(in , (void*)in_arg);

fill_audiodata(out, out_arg);

if(s->resample){

int ret = swr_convert_internal(s, out, out_count, in, in_count);

if(ret>0 && !s->drop_output)

s->outpts += ret * (int64_t)s->in_sample_rate;

av_assert2(max_output < 0 || ret <= max_output);

return ret;

}else{

AudioData tmp= *in;

int ret2=0;

int ret, size;

size = FFMIN(out_count, s->in_buffer_count);

if(size){

buf_set(&tmp, &s->in_buffer, s->in_buffer_index);

ret= swr_convert_internal(s, out, size, &tmp, size);

if(ret<0)

return ret;

ret2= ret;

s->in_buffer_count -= ret;

s->in_buffer_index += ret;

buf_set(out, out, ret);

out_count -= ret;

if(!s->in_buffer_count)

s->in_buffer_index = 0;

}

if(in_count){

size= s->in_buffer_index + s->in_buffer_count + in_count - out_count;

if(in_count > out_count) { //FIXME move after swr_convert_internal

if( size > s->in_buffer.count

&& s->in_buffer_count + in_count - out_count <= s->in_buffer_index){

buf_set(&tmp, &s->in_buffer, s->in_buffer_index);

copy(&s->in_buffer, &tmp, s->in_buffer_count);

s->in_buffer_index=0;

}else

if((ret=swri_realloc_audio(&s->in_buffer, size)) < 0)

return ret;

}

if(out_count){

size = FFMIN(in_count, out_count);

ret= swr_convert_internal(s, out, size, in, size);

if(ret<0)

return ret;

buf_set(in, in, ret);

in_count -= ret;

ret2 += ret;

}

if(in_count){

buf_set(&tmp, &s->in_buffer, s->in_buffer_index + s->in_buffer_count);

copy(&tmp, in, in_count);

s->in_buffer_count += in_count;

}

}

if(ret2>0 && !s->drop_output)

s->outpts += ret2 * (int64_t)s->in_sample_rate;

av_assert2(max_output < 0 || ret2 < 0 || ret2 <= max_output);

return ret2;

}

}

??说明:

????1. 如果没有提供足够的空间用于保存输出数据,采样数据会缓存在swr中。可以通过swr_get_out_samples()来获取下一次调用swr_convert在给定输入样本数量下输出样本数量的上限,来提供足够的空间。

????2. 如果是采样频率转换,转换完成后采样数据可能会缓存在swr中,它期待你提供更多的输入数据。

????3. 如果实际上并不需要更多输入数据,通过调用swr_convert(),其中参数in_count设置为0来获取缓存在swr中的数据。

????4. 转换结束之后需要冲刷swr_context的缓冲区,通过调用swr_convert(),其中参数in设置为NULL,参数in_count设置为0。

4.8、swr_get_delay

??swr_get_delay ( ) 获取的是下一次的样本数据 A 输入 经过多长时间延迟后 , 才能将样本 A 播放出来 , 这个延迟就是积压的数据的播放时间 , 因此每次处理时将少部分积压数据进行处理 , 可以有效降低音频延迟 ;

int64_t swr_get_delay(struct SwrContext *s, int64_t base){

if (s->resampler && s->resample){

return s->resampler->get_delay(s, base);

}else{

return (s->in_buffer_count*base + (s->in_sample_rate>>1))/ s->in_sample_rate;

}

}

4.9、av_rescale_rnd

该函数传入上述 输入音频采样个数 , 输入音频采样率 , 输出音频采样率 参数 , 进行上述计算 , 没有溢出问题 ; 计算公式是 a * b / c ;

int64_t av_rescale_rnd(int64_t a, int64_t b, int64_t c, enum AVRounding rnd)

{

int64_t r = 0;

av_assert2(c > 0);

av_assert2(b >=0);

av_assert2((unsigned)(rnd&~AV_ROUND_PASS_MINMAX)<=5 && (rnd&~AV_ROUND_PASS_MINMAX)!=4);

if (c <= 0 || b < 0 || !((unsigned)(rnd&~AV_ROUND_PASS_MINMAX)<=5 && (rnd&~AV_ROUND_PASS_MINMAX)!=4))

return INT64_MIN;

if (rnd & AV_ROUND_PASS_MINMAX) {

if (a == INT64_MIN || a == INT64_MAX)

return a;

rnd -= AV_ROUND_PASS_MINMAX;

}

if (a < 0)

return -(uint64_t)av_rescale_rnd(-FFMAX(a, -INT64_MAX), b, c, rnd ^ ((rnd >> 1) & 1));

if (rnd == AV_ROUND_NEAR_INF)

r = c / 2;

else if (rnd & 1)

r = c - 1;

if (b <= INT_MAX && c <= INT_MAX) {

if (a <= INT_MAX)

return (a * b + r) / c;

else {

int64_t ad = a / c;

int64_t a2 = (a % c * b + r) / c;

if (ad >= INT32_MAX && b && ad > (INT64_MAX - a2) / b)

return INT64_MIN;

return ad * b + a2;

}

} else {

#if 1

uint64_t a0 = a & 0xFFFFFFFF;

uint64_t a1 = a >> 32;

uint64_t b0 = b & 0xFFFFFFFF;

uint64_t b1 = b >> 32;

uint64_t t1 = a0 * b1 + a1 * b0;

uint64_t t1a = t1 << 32;

int i;

a0 = a0 * b0 + t1a;

a1 = a1 * b1 + (t1 >> 32) + (a0 < t1a);

a0 += r;

a1 += a0 < r;

for (i = 63; i >= 0; i--) {

a1 += a1 + ((a0 >> i) & 1);

t1 += t1;

if (c <= a1) {

a1 -= c;

t1++;

}

}

if (t1 > INT64_MAX)

return INT64_MIN;

return t1;

#else

/* reference code doing (a*b + r) / c, requires libavutil/integer.h */

AVInteger ai;

ai = av_mul_i(av_int2i(a), av_int2i(b));

ai = av_add_i(ai, av_int2i(r));

return av_i2int(av_div_i(ai, av_int2i(c)));

#endif

}

}

4.9.1、原理说明

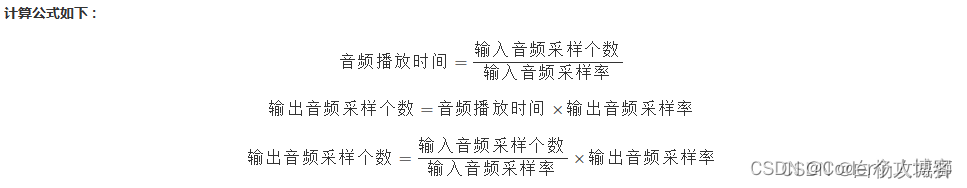

??音频重采样操作 , 需要指定一个输出样本个数, 目前已知的是 输入音频采样个数 , 输出音频采样率 , 输入音频采样率 , 需要计算出输出的音频采样个数。

??音频播放时间=输入音频采样个数输入音频采样率。

??输出音频采样个数=音频播放时间×输出音频采样率。

??输出音频采样个数=输入音频采样个数输入音频采样率×输出音频采样率。

??上面涉及到的计算数据过大 , 音频采样率 与 采样个数 相乘 , 如 44100 Hz 采样率 , 10 万采样 , 相乘结果为 4,410,000,000 , 这个数量级有溢出的风险 , 为了解决计算溢出问题 , FFMPEG 给出了专门的函数 av_rescale_rnd ( ) 来处理这个计算 ;

五、实例

typedef struct {

const char *filename;

int sampleRate;

AVSampleFormat sampleFmt;

int chLayout;

} ResampleAudioSpec;

#ifdef Q_OS_WIN

#define IN_PCM_FILEPATH "G:/Resource/record_to_pcm.pcm"

#define OUT_PCM_FILEPATH "G:/Resource/record_to_pcm2.pcm"

#else

#define IN_PCM_FILEPATH "/Users/liliguang/Desktop/record_to_pcm.pcm"

#define OUT_PCM_FILEPATH "/Users/liliguang/Desktop/record_to_pcm2.pcm"

#endif

void ResampleThread::run() {

// 源文件 ==》输入缓冲区 ==》 输出缓冲区 ==》 输出文件

//输入音频文件上下文

ResampleAudioSpec inSpec;

inSpec.filename = IN_PCM_FILEPATH;

inSpec.sampleFmt = AV_SAMPLE_FMT_S16;

inSpec.sampleRate = 44100;

inSpec.chLayout = AV_CH_LAYOUT_STEREO;

//输出音频文件上下文

ResampleAudioSpec outSpec;

outSpec.filename = OUT_PCM_FILEPATH;

outSpec.sampleFmt = AV_SAMPLE_FMT_S16;

outSpec.sampleRate = 48000;

outSpec.chLayout = AV_CH_LAYOUT_STEREO;

audio_resampleS(inSpec, outSpec);

}

void audio_resampleS (ResampleAudioSpec inSpec, ResampleAudioSpec outSpec) {

// 前置变量声明 (因为goto语句后面不能定义变量)

// 初始化上下文ret

int initRet = 0;

// 缓冲区大小

int inBufferSize = 0;

int outBufferSize = 0;

// 文件操作

QFile inFile(inSpec.filename);

QFile outFile(outSpec.filename);

int inOpenFileRet = 0;

int outOpenFileRet = 0;

// 读取输出文件数据的大小

int inDataLen = 0;

// 每个样本的总大小

int inBytesPerSample = ( av_get_channel_layout_nb_channels(inSpec.chLayout) * av_get_bytes_per_sample(inSpec.sampleFmt) ) ;

int outBytesPerSample = (av_get_channel_layout_nb_channels(outSpec.chLayout) * av_get_bytes_per_sample(outSpec.sampleFmt)) ;

// 重采样ret

int swrConvertRet = 0;

//重采样目标 44100 ==> 48000

/*

inSampleRate (48000) in_nb_samples (输入缓冲区大小)

------------------------ = ------------------------

outSampleRate (44100) out_nb_samples (输出缓冲区大小)

输出缓冲区大小计算公式 out_nb_samples = outSampleRate * in_nb_samples / inSampleRate

输入缓冲区大小计算公式 in_nb_samples = inSampleRate * out_nb_samples / outSampleRate

*/

//输入缓冲区

uint8_t **inData = nullptr;

int inlinesize = 0;

int in_nb_channels = av_get_channel_layout_nb_channels(inSpec.chLayout);

int in_nb_samples = 1024 ;

enum AVSampleFormat in_sample_fmt = inSpec.sampleFmt;

int in_align = 0;

//输出缓冲区

uint8_t **outData = nullptr;

int outlinesize = 0;

int out_nb_channels = av_get_channel_layout_nb_channels(outSpec.chLayout);

int out_nb_samples = av_rescale_rnd(outSpec.sampleRate, in_nb_samples, inSpec.sampleRate, AV_ROUND_UP);

enum AVSampleFormat out_sample_fmt = outSpec.sampleFmt;

int out_align = 0;

qDebug() << "输入缓冲区" << inSpec.sampleRate << in_nb_samples;

qDebug() << "输出缓冲区" << outSpec.sampleRate << out_nb_samples;

//===================================================================

//===================================================================

//=========================== 主要步骤 ===========================

//===================================================================

//===================================================================

// 1. 创建上下文

SwrContext *swrCtx = swr_alloc_set_opts(nullptr,

outSpec.chLayout,

outSpec.sampleFmt,

outSpec.sampleRate,

inSpec.chLayout,

inSpec.sampleFmt,

inSpec.sampleRate,

0,

nullptr

);

CHECK_IF_ERROR_BUF_END(!swrCtx, "swr_alloc_set_opts");

// 2. 初始化上下文

initRet = swr_init(swrCtx);

CHECK_IF_ERROR_BUF_END(initRet, "swr_init");

// 3. 输入缓冲区

qDebug() << "输入outBufferRet" << inData << inlinesize << in_nb_channels << in_nb_samples << in_sample_fmt;

inBufferSize = av_samples_alloc_array_and_samples (&inData,

&inlinesize,

in_nb_channels,

in_nb_samples,

in_sample_fmt,

in_align);

CHECK_IF_ERROR_BUF_END(inBufferSize < 0, "av_samples_alloc_array_and_samples inBufferSize");

// 4. 输出缓冲区

qDebug() << "输出outBufferRet" << outData << outlinesize << out_nb_channels << out_nb_samples << out_sample_fmt;

outBufferSize = av_samples_alloc_array_and_samples ( &outData,

&outlinesize,

out_nb_channels,

out_nb_samples,

out_sample_fmt,

out_align);

CHECK_IF_ERROR_BUF_END(outBufferSize < 0, "av_samples_alloc_array_and_samples outBufferSize");

// 文件操作

inOpenFileRet = !inFile.open(QFile::ReadOnly);

outOpenFileRet = !outFile.open(QFile::WriteOnly);

CHECK_IF_ERROR_BUF_END(inOpenFileRet, "inFile.open inSpec.filename");

CHECK_IF_ERROR_BUF_END(outOpenFileRet, "outFile.open outSpec.filename");

qDebug() << "av_get_channel_layout_nb_channels(outSpec.chLayout)" << av_get_channel_layout_nb_channels(outSpec.chLayout) ;

qDebug() << "av_get_bytes_per_sample(outSpec.sampleFmt)" << av_get_bytes_per_sample(outSpec.sampleFmt);

// 5.重采样

while ((inDataLen = inFile.read((char *) inData[0], inlinesize)) > 0) {

// 读取的样本数量

qDebug() << "in_nb_samples" << in_nb_samples << "out_nb_samples" << out_nb_samples ;

qDebug() << "inDataLen" << (inDataLen / inBytesPerSample) ;

// 有的代码Demo用的是inDataLen / inBytesPerSample 来计算swr_convert中的in_count,我发现目前和in_nb_samples 是一样的大小的

// swrConvertRet:每个通道输出的样本数,错误时为负值

swrConvertRet = swr_convert(swrCtx,

outData,

out_nb_samples,

(const uint8_t **) inData,

in_nb_samples);

// qDebug() << "swrConvertRet" << outDataLen << swrConvertRet << out_nb_samples << in_nb_samples;

CHECK_IF_ERROR_BUF_END(swrConvertRet <= 0, "swr_convert");

// 写入文件, 转换得出swrConvertRet每个通道样本数, 写入数据 = swrConvertRet * 每个样本的总大小, 写入到outData中

outFile.write((char *) outData[0], swrConvertRet * outBytesPerSample);

}

// 检查一下输出缓冲区是否还有残留的样本(已经重采样过的,转换过的)

while ((swrConvertRet = swr_convert(swrCtx, outData, out_nb_samples, nullptr, 0)) > 0) {

outFile.write((char *) outData[0], swrConvertRet * outBytesPerSample );

}

end:

// 关闭文件,释放资源

inFile.close();

outFile.close();

// 释放输入缓冲区

if (inData) {

av_freep(&inData[0]);

}

av_freep(&inData);

// 释放输出缓冲区

if (outData) {

av_freep(&outData[0]);

}

av_freep(&outData);

// 释放重采样上下文

swr_free(&swrCtx);

}

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 【运维】安装双系统之后,如何删除主硬盘的Linux的引导,图文教程

- Kafka系列之:统计kafka集群Topic的分区数和副本数,批量增加topic副本数

- 【刷题日志】深度理解除(/)与取模(%)附水仙花数以及变种水仙花数题解

- AOC有源光缆 SFP-10G-AOC简介

- ubuntu开机卡在[OK],,,,,的界面无法正常开机后进入桌面

- Linux命令-top

- Spring Boot 和 Spring 有什么区别

- dll不能运行是什么意思,分享5种有效的修复方法

- 智能优化算法应用:基于适应度相关算法3D无线传感器网络(WSN)覆盖优化 - 附代码

- 《HTML5网页设计》——HTML5基础