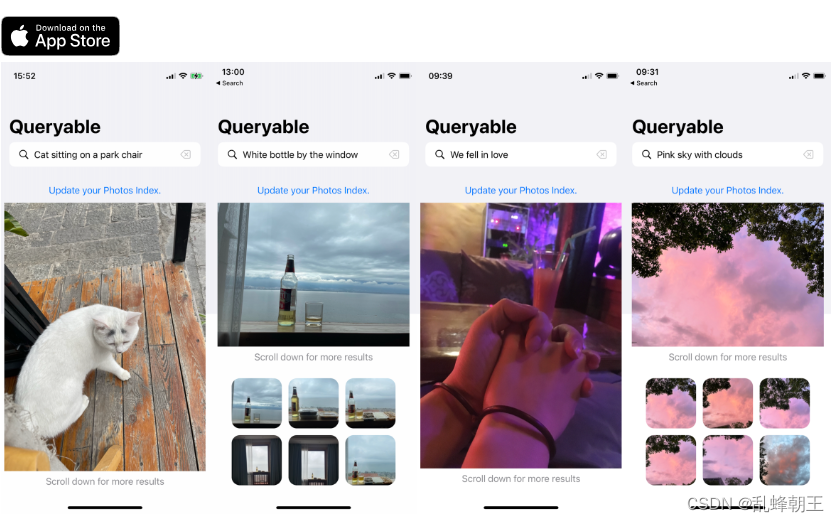

给出一句话来描述想要的图片,就能从图库中搜出来符合要求的

介绍

地址:https://github.com/mazzzystar/Queryable

The open-source code of Queryable, an iOS app, leverages the OpenAI's?CLIP?model to conduct offline searches in the 'Photos' album. Unlike the category-based search model built into the iOS Photos app, Queryable allows you to use natural language statements, such as?a brown dog sitting on a bench, to search your album. Since it's offline, your album privacy won't be compromised by any company, including Apple or Google.

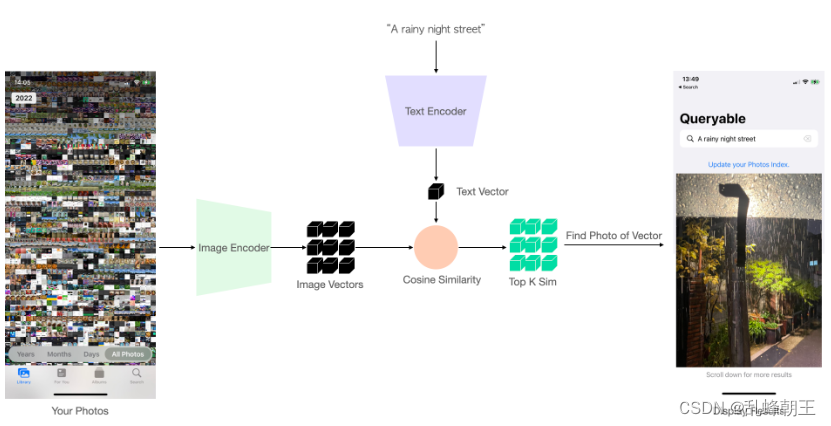

How does it work?

- Encode all album photos using the CLIP Image Encoder, compute image vectors, and save them.

- For each new text query, compute the corresponding text vector using the Text Encoder.

- Compare the similarity between this text vector and each image vector.

- Rank and return the top K most similar results.

The process is as follows:

For more details, please refer to my blog:?Run CLIP on iPhone to Search Photos.

Run on Xcode

Download the?ImageEncoder_float32.mlmodelc?and?TextEncoder_float32.mlmodelc?from?Google Drive. Clone this repo, put the downloaded models below?CoreMLModels/?path and run Xcode, it should work.

?

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- Java基础知识

- AMEYA360汇总:中国pcb百强企业排行榜

- sql文件导入:ERROR : (2006, ‘MySQL server has gone away’...)

- 【数学建模】图论模型

- 自动驾驶学习笔记(二十二)——自动泊车算法

- can转profinet网关实现汽车生产的革新

- 网络安全(黑客)技术——自学2024

- 这里聊聊扫地机的 IOT 开发

- 基于Word2vec词聚类的关键词实现

- Dynamic Effects for Stylized Water 2 (Extension)