状态的一致性和FlinkSQL

发布时间:2023年12月19日

状态一致性

一致性其实就是结果的正确性。精确一次是指数据有可能被处理多次,但是结果只有一个。

三个级别:

- 最多一次:1次或0次,有可能丢数据

- 至少一次:1次或n次,出错可能会重试

- 输入端只要可以做到数据重放,即在出错后,可以重新发送一样的数据

- 精确一次:数据只会发送1次

- 幂等写入:多次重复操作不影响结果,有可能出现某个值由于数据重放,导致结果回到原先的值,然后逐渐恢复。

- 预写日志:

- 先把结果数据作为日志状态保存起来

- 进行检查点保存时,也会将这些结果数据一并做持久化存储

- 在收到检查点完成的通知时,将所有结果数据

一次性写入外部系统

- 预写日志缺点:这种再次确认的方式,如果写入成功返回的ack出现故障,还是会出现数据重复。

- 两阶段提交(2PC):数据写入过程和数据提交分为两个过程,如果写入过程没有发生异常,就将事务进行提交。

- 算子节点在收到第一个数据时,就开启一个事务,然后提交数据,在下一个检查点到达前都是预写入,如果下一个检查点正常,再进行最终提交。

- 对外部系统有一定的要求,要能够识别事务ID,事务的重复提交应该是无效的。

- 即barrier到来时,如果结果一致,就提交事务,否则进行事务回滚

Flink和Kafka连接时的精确一次保证

- 开启检查点

- 开启事务隔离级别,读已提交

- 注意设置kafka超时时间为10分钟

public class Flink02_KafkaToFlink {

public static void main(String[] args) {

//1.创建运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//默认是最大并行度

env.setParallelism(1);

//开启检查点

env.enableCheckpointing(1000L);

//kafka source

KafkaSource<String> kafkaSource = KafkaSource.<String>builder()

.setBootstrapServers("hadoop102:9092,hadoop103:9092")

.setGroupId("flinkb")

.setTopics("topicA")

//优先使用消费者组 记录的Offset进行消费,如果offset不存在,根据策略进行重置

.setStartingOffsets(OffsetsInitializer.committedOffsets(OffsetResetStrategy.LATEST))

.setValueOnlyDeserializer(new SimpleStringSchema())

//如果还有别的配置需要指定,统一使用通用方法

.setProperty("isolation.level", "read_committed")

.build();

DataStreamSource<String> ds = env.fromSource(kafkaSource, WatermarkStrategy.noWatermarks(), "kafkasource");

//处理过程

//kafka Sink

KafkaSink<String> kafkaSink = KafkaSink.<String>builder()

.setBootstrapServers("hadoop102:9092,hadoop103:9092")

.setRecordSerializer(

KafkaRecordSerializationSchema.<String>builder()

.setTopic("first")

.setValueSerializationSchema(new SimpleStringSchema())

.build()

)

//语义

//AT_LEAST_ONCE:至少一次,表示数据可能重复,需要考虑去重操作

//EXACTLY_ONCE:精确一次

//kafka transaction timeout is larger than broker

//kafka超时时间:1H

//broker超时时间:15分钟

// .setDeliveryGuarantee(DeliveryGuarantee.AT_LEAST_ONCE)//数据传输的保障

.setDeliveryGuarantee(DeliveryGuarantee.EXACTLY_ONCE)//数据传输的保障

.setTransactionalIdPrefix("flink"+ RandomUtils.nextInt(0,100000))

// .setProperty(ProducerConfig.RETRIES_CONFIG,"10")

.setProperty(ProducerConfig.TRANSACTION_TIMEOUT_CONFIG,"60*1000*10")//10分钟

.build();

ds.map(

JSON::toJSONString

).sinkTo(kafkaSink);//写入到kafka 生产者

ds.sinkTo(kafkaSink);

try {

env.execute();

} catch (Exception e) {

throw new RuntimeException(e);

}

}

}

FlinkSQL1.17

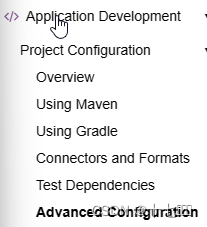

FlinkSQL不同版本的接口仍在变化,有变动查看官网。

在官网这个位置可以查看Flink对于以来的一些官方介绍。

Table依赖剖析

三个依赖:

1. flink-table-api-java-uber-1.17.2.jar (所有的Java API)

2. flink-table-runtime-1.17.2.jar (包含Table运行时)

3. flink-table-planner-loader-1.17.2.jar (查询计划器,即SQL解析器)

静态导包:在import后添加static,并在类后面加上*导入全部。主要是为了方便使用下面的 $ 方法,否则 $ 方法前面都要添加Expressions的类名前缀

table.where($("vc").isGreaterOrEqual(100))

.select($("id"),$("vc"),$("ts"))

.execute()

.print();

程序架构

- 准备环境

- 流表环境:基于流创建表环境

- 表环境:从操作层面与流独立,底层处理还是流

- 创建表

- 基于流:将流转换为表

- 连接器表

- 转换处理

- 基于Table对象,使用API进行处理

- 基于SQL的方式,直接写SQL处理

- 输出

- 基于Table对象或连接器表,输出结果

- 表转换为流,基于流的方式输出

流处理中的表

- 处理的数据对象

- 关系:字段元组的有界集合

- 流处理:字段元组的无限序列

- 对数据的访问

- 关系:可以得到完整的

- 流处理:数据是动态的

因此处理过程中的表是动态表,必须要持续查询。

流表转换

持续查询

- 追加查询:窗口查询的结果通过追加的方式添加到表的末尾,使用toDataStream

- 更新查询:窗口查询的结果会对原有的结果进行修改, 使用toChangeLogStream

- 如果不清楚是什么类型,直接使用toChangeLogSteam()将表转换为流

public class Flink04_TableToStreamQQ {

public static void main(String[] args) {

//1.创建运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//默认是最大并行度

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

SingleOutputStreamOperator<Event> ds = env.socketTextStream("hadoop102", 8888)

.map(

line -> {

String[] fields = line.split(",");

return new Event(fields[0].trim(), fields[1].trim(), Long.valueOf(fields[2].trim()));

}

);

Table table = tableEnv.fromDataStream(ds);

tableEnv.createTemporaryView("t1", table);

//SQL

String appendSQL = "select user, url, ts from t1 where user <> 'zhangsan'";

//需要在查询过程中更新上一次的值

String updateSQL = "select user, count(*) cnt from t1 group by user";

Table resultTable = tableEnv.sqlQuery(updateSQL);

//表转换为流

//doesn't support consuming update changes which is produced by node GroupAggregate(groupBy=[user], select=[user, COUNT(*) AS cnt])

// DataStream<Row> rowDs = tableEnv.toDataStream(resultTable);

//有更新操作时,使用toChangelogStream(),它即支持追加,也支持更新查询

DataStream<Row> rowDs = tableEnv.toChangelogStream(resultTable);

rowDs.print();

try {

env.execute();

} catch (Exception e) {

throw new RuntimeException(e);

}

}

}

将动态表转换为流

- 仅追加流:如果表的结果都是追加查询

- Retract撤回流:

- 包含两类消息,添加消息和撤回消息

- 下游需要根据这两类消息进行处理

- 更新插入流:

- 两种消息:更新插入消息(带key)和删除消息

连接器

- DataGen和Print连接器

public class Flink01_DataGenPrint {

public static void main(String[] args) {

//TableEnvironment tableEnv = TableEnvironment.create(EnvironmentSettings.newInstance().build());

//1. 准备表环境, 基于流环境,创建表环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

//DataGen

String createTable =

" create table t1 ( " +

" id STRING , " +

" vc INT ," +

" ts BIGINT " +

" ) WITH (" +

" 'connector' = 'datagen' ," +

" 'rows-per-second' = '1' ," +

" 'fields.id.kind' = 'random' , " +

" 'fields.id.length' = '6' ," +

" 'fields.vc.kind' = 'random' , " +

" 'fields.vc.min' = '100' , " +

" 'fields.vc.max' = '1000' ," +

" 'fields.ts.kind' = 'sequence' , " +

" 'fields.ts.start' = '1000000' , " +

" 'fields.ts.end' = '100000000' " +

" )" ;

tableEnv.executeSql(createTable);

//Table resultTable = tableEnv.sqlQuery("select * from t1 where vc >= 200");

//.execute().print();

//print

String sinkTable =

"create table t2(" +

"id string," +

"vc int," +

"ts bigint" +

") with (" +

" 'connector' = 'print', " +

" 'print-identifier' = 'print>' " +

")";

tableEnv.executeSql(sinkTable);

tableEnv.executeSql("insert into t2 select id, vc, ts from t1 where vc >= 200");

}

}

- 文件连接器

public class Flink02_FileConnector {

public static void main(String[] args) {

TableEnvironment tableEnvironment = TableEnvironment.create(EnvironmentSettings.newInstance().build());

//FileSource

String sourceTable =

" create table t1 ( " +

" id STRING , " +

" vc INT ," +

" ts BIGINT," +

//" `file.name` string not null METADATA," + 文件名字由于系统原因无法识别盘符后面的冒号

" `file.size` bigint not null METADATA" +

" ) WITH (" +

" 'connector' = 'filesystem' ," +

" 'path' = 'input/ws.txt' ," +

" 'format' = 'csv' " +

" )" ;

tableEnvironment.executeSql(sourceTable);

//tableEnvironment.sqlQuery(" select * from t1 ").execute().print();

//转换处理...

//File sink

String sinkTable =

" create table t2 ( " +

" id STRING , " +

" vc INT ," +

" ts BIGINT," +

//" `file.name` string not null METADATA," + 文件名字由于系统原因无法识别盘符后面的冒号

" file_size bigint" +

" ) WITH (" +

" 'connector' = 'filesystem' ," +

" 'path' = 'output' ," +

" 'format' = 'json' " +

" )" ;

tableEnvironment.executeSql(sinkTable);

tableEnvironment.executeSql("insert into t2 " +

"select id, vc, ts, `file.size` from t1");

}

}

- kafka连接器

public class Flink03_KafkaConnector {

public static void main(String[] args) {

TableEnvironment tableEnvironment = TableEnvironment.create(EnvironmentSettings.newInstance().build());

//kafka source

String sourceTable =

" create table t1 ( " +

" id STRING , " +

" vc INT ," +

" ts BIGINT," +

" `topic` string not null METADATA," +

" `partition` int not null METADATA," +

" `offset` bigint not null METADATA" +

" ) WITH (" +

" 'connector' = 'kafka' ," +

" 'properties.bootstrap.servers' = 'hadoop102:9092,hadoop103:9092' ," +

" 'topic' = 'topicA', " +

" 'properties.group.id' = 'flinksql', " +

" 'value.format' = 'csv', " +

" 'scan.startup.mode' = 'group-offsets'," +

" 'properties.auto.offset.reset' = 'latest' " +

" )" ;

//创建表

tableEnvironment.executeSql(sourceTable);

//打印查询结果

//tableEnvironment.sqlQuery(" select * from t1 ").execute().print();

//转换处理...

//kafka Sink

String sinkTable =

" create table t2 ( " +

" id STRING , " +

" vc INT ," +

" ts BIGINT," +

" `topic` string " +

" ) WITH (" +

" 'connector' = 'kafka' ," +

" 'properties.bootstrap.servers' = 'hadoop102:9092,hadoop103:9092' ," +

" 'topic' = 'topicB', " +

" 'sink.delivery-guarantee' = 'at-least-once', " +

//" 'properties.transaction.timeout.ms' = '', " +

//" 'sink.transactional-id-prefix' = 'xf', " +

//" 'properties.group.id' = 'flinksql', " +

" 'value.format' = 'json' " +

//" 'scan.startup.mode' = 'group-offsets'," +

//" 'properties.auto.offset.reset' = 'latest' " +

" )" ;

tableEnvironment.executeSql(sinkTable);

tableEnvironment.executeSql("insert into t2 " +

"select id, vc, ts, `topic` from t1");

}

}

- Jdbc连接器

文章来源:https://blog.csdn.net/qq_44273739/article/details/135008552

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 第7章 7.6.5 常量指针 Page406~407

- 每日一练 | 华为认证真题练习Day159

- 行业报告 | 智能焊接机器人的星星之火

- 220v转5v芯片

- 在Excel中,如何简单快速地删除重复项,这里提供详细步骤

- 嵌入式(三)中断解析 | 中断基本概念 CC2530中断系统 中断编程全解析

- 计算机视觉:驾驶员疲劳检测

- Hotspot源码解析-第21章-引用处理器和JNI句柄初始化

- MySQL基础应用之DDL、DCL、DML、DQL

- 常见的 JavaScript 调试工具详解