KPU特征识别

发布时间:2024年01月08日

前面的颜色识别、二维码识别都是使用了一些简单的图像处理功能,而更高

级的机器视觉就需要使用

KPU

。可以简单类别为计算机的

GPU

(显卡),本质是

实现高速的图像数据运算

我们来简单介绍一下 K210 的 KPU 。 KPU 是 K210 内部一个神经网络处理器,它可以在低功耗的情况下实现卷积神经网络计算,实时获取被检测目标的大小、坐标和种类,对人脸或者物体进行检测和分类。KPU 具备以下几个特点:? 支持主流训练框架按照特定限制规则训练出来的定点化模型? 对网络层数无直接限制,支持每层卷积神经网络参数单独配置,包括输入输出通道数目、输入输 出行宽列高? 支持两种卷积内核 1x1 和 3x3? 支持任意形式的激活函数? 实时工作时最大支持神经网络参数大小为 5.5MiB 到 5.9MiB? 非实时工作时最大支持网络参数大小为( Flash 容量 - 软件体积)

简单来说就是 KPU 能加载和运行不同的模型,实现各种机器视觉等功能。目前 K210 的 KPU 上主要跑 YOLO ( You Only Look Once )框架的目标检测算法来实现。通过 micropython 的封装让我们可以不关心底层实现原理,非常方便的使用起来。我们来看一下通过 KPU 运行 Yolo 的对象用法

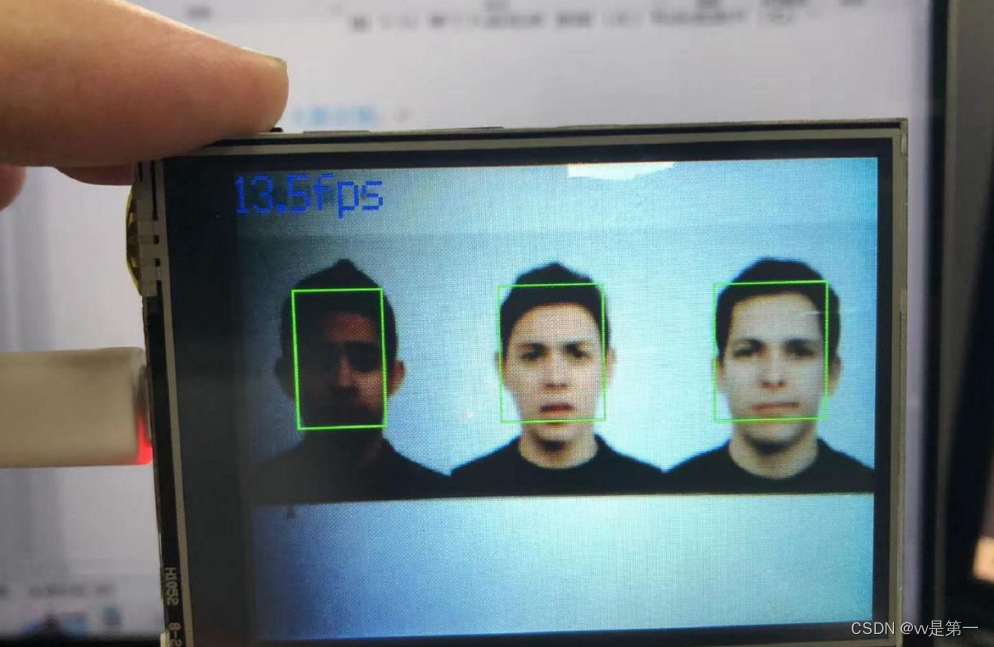

1:人脸检测?

实验目的:

将摄像头拍摄到的画面中的人脸用矩形框表示出来。

实验讲解:

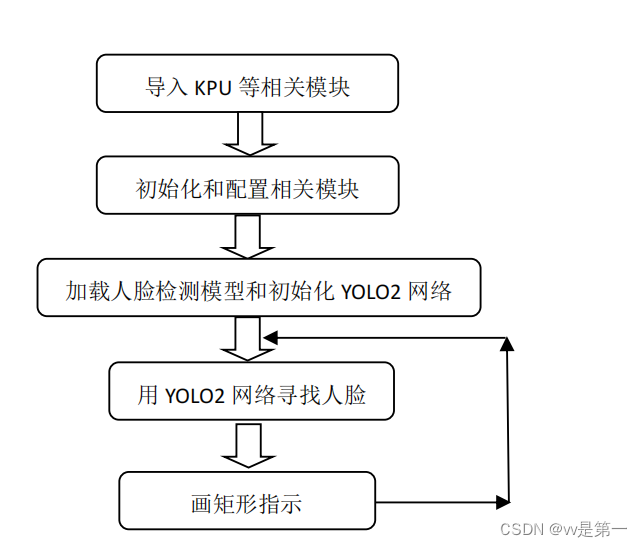

从上表可以看到通过

KPU

模块直接加载

YOLO2

网络,再结合人脸检测模型

来实现人脸识别。具体编程思路如下:

?

#实验名称:人脸检测

#翻译和注释:01Studio

#导入相关模块

import sensor, image, time, lcd, gc

from maix import KPU

#lcd初始化

lcd.init()

#摄像头初始化

sensor.reset()

sensor.set_pixformat(sensor.RGB565)

sensor.set_framesize(sensor.QVGA)

sensor.set_vflip(True) #摄像头后置

sensor.skip_frames(time = 1000)

clock = time.clock()

od_img = image.Image(size=(320,256))

#构建KPU对象

kpu = KPU()

kpu.load_kmodel("/sd/yolo_face_detect.kmodel") #加载KPU模型,放在SD卡根目录

#kpu.load_kmodel(0x300000, 278440) #需要将kmdel通过固件下载工具下载到0x300000的位置(3M偏移)

anchor = (0.893, 1.463, 0.245, 0.389, 1.55, 2.58, 0.375, 0.594, 3.099, 5.038, 0.057, 0.090, 0.567, 0.904, 0.101, 0.160, 0.159, 0.255)

kpu.init_yolo2(anchor, anchor_num=9, img_w=320, img_h=240, net_w=320 , net_h=256 ,layer_w=10 ,layer_h=8, threshold=0.7, nms_value=0.3, classes=1)

while True:

#print("mem free:",gc.mem_free()) #查询内存余量

clock.tick()

img = sensor.snapshot()

a = od_img.draw_image(img, 0,0)

od_img.pix_to_ai()

#将摄像头采集图片输送到KPU和yolo模型运算。

kpu.run_with_output(od_img)

dect = kpu.regionlayer_yolo2()

fps = clock.fps()

if len(dect) > 0: #识别到人脸

print("dect:",dect)

for l in dect : #画矩形

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(0, 255, 0))

a = img.draw_string(0, 0, "%2.1ffps" %(fps), color=(0, 60, 128), scale=2.0) #显示屏显示FPS

lcd.display(img)

gc.collect()#内存回收

kpu.deinit()

并且可在串口终端看见人脸位置。可用于面容跟踪

并且可在串口终端看见人脸位置。可用于面容跟踪

2:人手识别

#实验名称:人手检测

#翻译和注释:01Studio

#导入相关模块

import sensor, image, time, lcd

from maix import KPU

import gc

lcd.init()

sensor.reset() # Reset and initialize the sensor. It will

# run automatically, call sensor.run(0) to stop

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.set_vflip(True) #摄像头后置

sensor.skip_frames(time = 1000) # Wait for settings take effect.

clock = time.clock() # Create a clock object to track the FPS.

od_img = image.Image(size=(320,256))

#构建KPU对象

anchor = (0.8125, 0.4556, 1.1328, 1.2667, 1.8594, 1.4889, 1.4844, 2.2000, 2.6484, 2.9333)

kpu = KPU()

print("ready load model")

#加载KPU模型,放在SD卡根目录

kpu.load_kmodel("/sd/hand_detect.kmodel")

#需要将kmdel通过固件下载工具下载到0x300000的位置(3M偏移)

#kpu.load_kmodel(0x300000, 1438888)

kpu.init_yolo2(anchor, anchor_num=5, img_w=320, img_h=240, net_w=320 , net_h=256 ,layer_w=10 ,layer_h=8, threshold=0.7, nms_value=0.3, classes=1)

while True:

gc.collect()

clock.tick() # Update the FPS clock.

img = sensor.snapshot()

a = od_img.draw_image(img, 0,0)

od_img.pix_to_ai()

#将摄像头采集图片输送到KPU和yolo模型运算。

kpu.run_with_output(od_img)

dect = kpu.regionlayer_yolo2()

fps = clock.fps()

if len(dect) > 0: #识别到人手

print("dect:",dect)

for l in dect :#画矩形

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(0, 255, 0))

a = img.draw_string(0, 0, "%2.1ffps" %(fps), color=(0, 60, 128), scale=2.0)

lcd.display(img)

kpu.deinit()

?

?

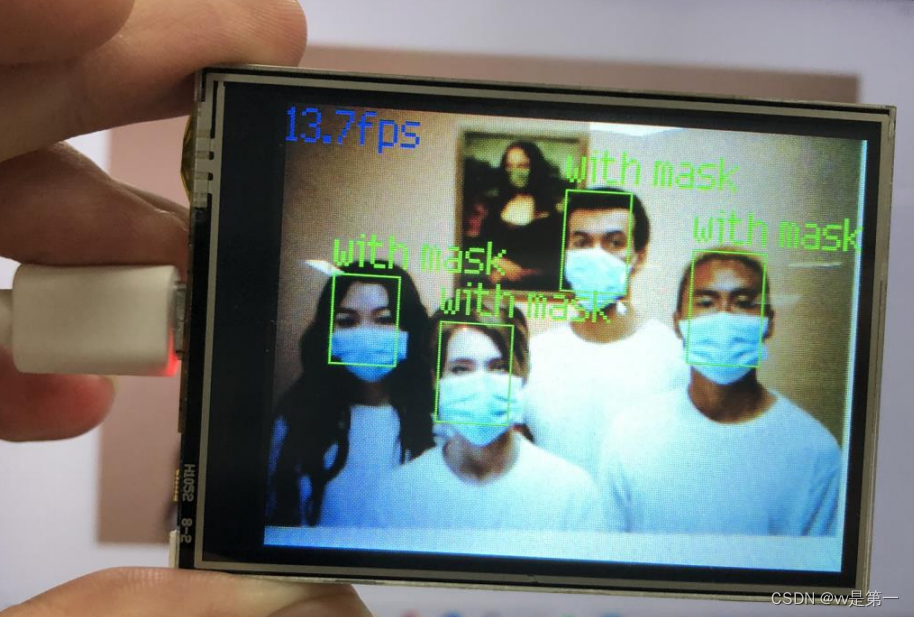

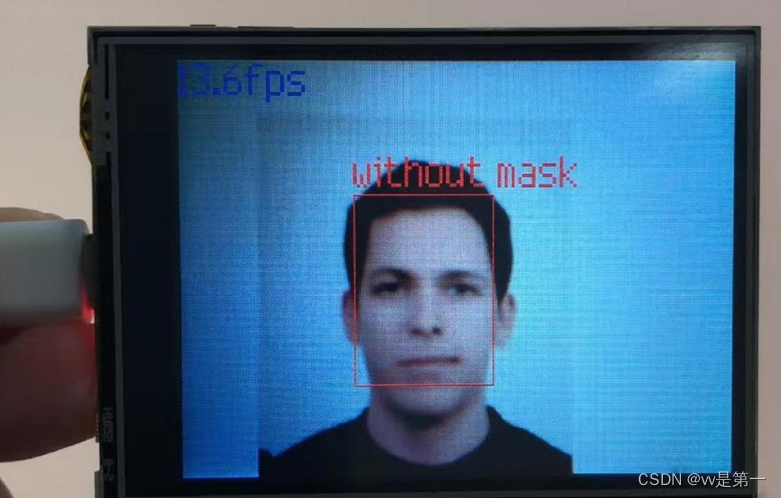

3:口罩识别

#实验名称:口罩检测

#翻译和注释:01Studio

#导入相关模块

import sensor, image, time, lcd

from maix import KPU

import gc

lcd.init()

sensor.reset()

sensor.set_pixformat(sensor.RGB565)

sensor.set_framesize(sensor.QVGA)

sensor.set_vflip(True) #摄像头后置

sensor.skip_frames(time = 1000)

clock = time.clock()

od_img = image.Image(size=(320,256), copy_to_fb=False)

#构建KPU对象

anchor = (0.156250, 0.222548, 0.361328, 0.489583, 0.781250, 0.983133, 1.621094, 1.964286, 3.574219, 3.94000)

kpu = KPU()

print("ready load model")

#加载KPU模型,放在SD卡根目录

kpu.load_kmodel("/sd/detect_5.kmodel")

#需要将kmdel通过固件下载工具下载到0x300000的位置(3M偏移)

#kpu.load_kmodel(0x300000, 555176)

kpu.init_yolo2(anchor, anchor_num=5, img_w=320, img_h=240, net_w=320 , net_h=256 ,layer_w=10 ,layer_h=8, threshold=0.7, nms_value=0.4, classes=2)

while True:

#print("mem free:",gc.mem_free())

clock.tick() # Update the FPS clock.

img = sensor.snapshot()

a = od_img.draw_image(img, 0,0)

od_img.pix_to_ai()

#将摄像头采集图片输送到KPU和yolo模型运算。

kpu.run_with_output(od_img)

dect = kpu.regionlayer_yolo2()

fps = clock.fps()

if len(dect) > 0: #识别到人脸

print("dect:",dect)

for l in dect :

if l[4] : #有带口罩

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(0, 255, 0))

a = img.draw_string(l[0],l[1]-24, "with mask", color=(0, 255, 0), scale=2)

else: #没有戴口罩

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(255, 0, 0))

a = img.draw_string(l[0],l[1]-24, "without mask", color=(255, 0, 0), scale=2)

a = img.draw_string(0, 0, "%2.1ffps" %(fps), color=(0, 60, 128), scale=2.0)

lcd.display(img)

gc.collect()

kpu.deinit()

?

?

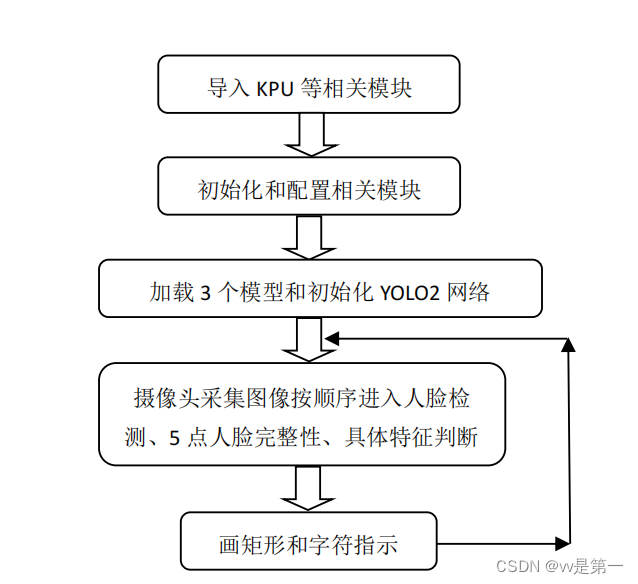

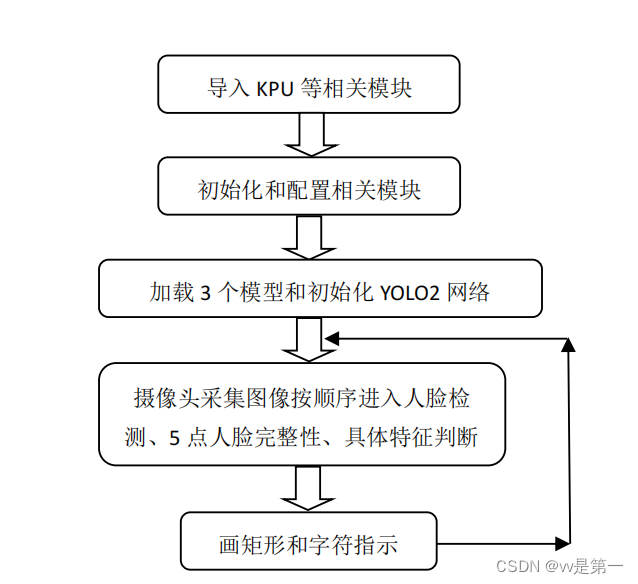

4:人脸特征

?

实验讲解:

本实验还是使用到

KPU + YOLO2

网络,与前面的区别是这次实验共使用了

3

模型来识别。分别是人脸检测模型、

5

点人脸识别(确认人脸完整性)和人脸具

体特征模型。层层递进。】

具体编程思路如下:

?

#实验名称:人脸特征

#翻译和注释:01Studio

#导入相关模块

import sensor, image, time, lcd

from maix import KPU

import gc

lcd.init()

sensor.reset() # Reset and initialize the sensor. It will

# run automatically, call sensor.run(0) to stop

sensor.set_pixformat(sensor.RGB565) # Set pixel format to RGB565 (or GRAYSCALE)

sensor.set_framesize(sensor.QVGA) # Set frame size to QVGA (320x240)

sensor.skip_frames(time = 1000) # Wait for settings take effect.

sensor.set_vflip(1) #摄像头后置模式

clock = time.clock() # Create a clock object to track the FPS.

#构建KPU对象和配置模型参数信息

#导入3个模型,放在SD卡根目录

anchor = (0.1075, 0.126875, 0.126875, 0.175, 0.1465625, 0.2246875, 0.1953125, 0.25375, 0.2440625, 0.351875, 0.341875, 0.4721875, 0.5078125, 0.6696875, 0.8984375, 1.099687, 2.129062, 2.425937)

kpu = KPU()

kpu.load_kmodel("/sd/face_detect_320x240.kmodel")

kpu.init_yolo2(anchor, anchor_num=9, img_w=320, img_h=240, net_w=320 , net_h=240 ,layer_w=10 ,layer_h=8, threshold=0.5, nms_value=0.2, classes=1)

ld5_kpu = KPU()

print("ready load model")

ld5_kpu.load_kmodel("/sd/ld5.kmodel")

fac_kpu = KPU()

print("ready load model")

fac_kpu.load_kmodel("/sd/fac.kmodel")

#人脸特征:性别、嘴巴是否张开、是否笑、是否戴眼镜 4个特征

pos_face_attr = ["Male ", "Mouth Open ", "Smiling ", "Glasses"]

neg_face_attr = ["Female ", "Mouth Closed", "No Smile", "No Glasses"]

# standard face key point position

FACE_PIC_SIZE = 128

dst_point =[(int(38.2946 * FACE_PIC_SIZE / 112), int(51.6963 * FACE_PIC_SIZE / 112)),

(int(73.5318 * FACE_PIC_SIZE / 112), int(51.5014 * FACE_PIC_SIZE / 112)),

(int(56.0252 * FACE_PIC_SIZE / 112), int(71.7366 * FACE_PIC_SIZE / 112)),

(int(41.5493 * FACE_PIC_SIZE / 112), int(92.3655 * FACE_PIC_SIZE / 112)),

(int(70.7299 * FACE_PIC_SIZE / 112), int(92.2041 * FACE_PIC_SIZE / 112)) ]

RATIO = 0.08

def extend_box(x, y, w, h, scale):

x1_t = x - scale*w

x2_t = x + w + scale*w

y1_t = y - scale*h

y2_t = y + h + scale*h

x1 = int(x1_t) if x1_t>1 else 1

x2 = int(x2_t) if x2_t<320 else 319

y1 = int(y1_t) if y1_t>1 else 1

y2 = int(y2_t) if y2_t<240 else 239

cut_img_w = x2-x1+1

cut_img_h = y2-y1+1

return x1, y1, cut_img_w, cut_img_h

while 1:

gc.collect()

#print("mem free:",gc.mem_free())

clock.tick() # Update the FPS clock.

img = sensor.snapshot()

kpu.run_with_output(img)

dect = kpu.regionlayer_yolo2()

fps = clock.fps()

#识别出人脸

if len(dect) > 0:

print("dect:",dect)

for l in dect :

x1, y1, cut_img_w, cut_img_h = extend_box(l[0], l[1], l[2], l[3], scale=RATIO) # 扩大人脸框

face_cut = img.cut(x1, y1, cut_img_w, cut_img_h)

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(0, 255, 0))

face_cut_128 = face_cut.resize(128, 128)

face_cut_128.pix_to_ai()

#五点人脸识别确认人脸完整

out = ld5_kpu.run_with_output(face_cut_128, getlist=True)

#print("out:",len(out))

face_key_point = []

for j in range(5):

x = int(KPU.sigmoid(out[2 * j])*cut_img_w + x1)

y = int(KPU.sigmoid(out[2 * j + 1])*cut_img_h + y1)

a = img.draw_cross(x, y, size=5, color=(0, 0, 255))

face_key_point.append((x,y))

T = image.get_affine_transform(face_key_point, dst_point)

a = image.warp_affine_ai(img, face_cut_128, T)

# face_cut_128.ai_to_pix()

# img.draw_image(face_cut_128, 0,0)

out2 = fac_kpu.run_with_output(face_cut_128, getlist=True)

del face_key_point

#判断特征数据

if out2 is not None:

for i in range(4):

th = KPU.sigmoid(out2[i])

if th >= 0.7:

a = img.draw_string(l[0]+l[2], l[1]+i*16, "%s" %(pos_face_attr[i]), color=(255, 0, 0), scale=1.5)

else:

a = img.draw_string(l[0]+l[2], l[1]+i*16, "%s" %(neg_face_attr[i]), color=(0, 0, 255), scale=1.5)

del (face_cut_128)

del (face_cut)

a = img.draw_string(0, 0, "%2.1ffps" %(fps), color=(0, 60, 255), scale=2.0)

lcd.display(img)

kpu.deinit()

ld5_kpu.deinit()

fac_kpu.deinit()

?

总结:

本节学习了人脸特征识别,可以看到

CanMV K210

通过

KPU+YOLO2+

模型轻

松实现识别,而且检测的准确率非常高,也就是结合

MicroPython

编程我们轻松

完成了实验

?

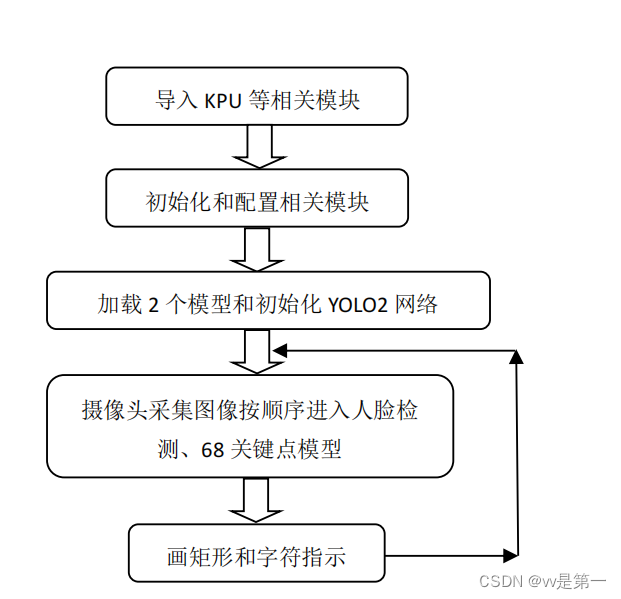

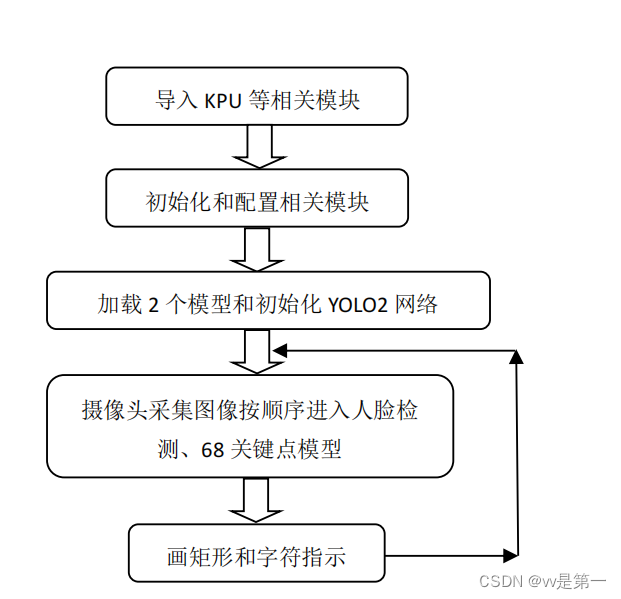

?5:68关键点

实验讲解:

本实验还是使用到

KPU + YOLO2

网络,这次实验共使用了

2

模型来识别。分

别是人脸检测模型和人脸 68 个关键点模型。层层递进,具体编程思路如下:

#实验名称:人脸检测_68关键点

#翻译和注释:01Studio

#导入相关模块

import sensor, image, time, lcd

from maix import KPU

import gc

lcd.init()

sensor.reset()

sensor.set_pixformat(sensor.RGB565)

sensor.set_framesize(sensor.QVGA)

sensor.set_vflip(True) #摄像头后置

sensor.skip_frames(time = 500)

clock = time.clock()

od_img = image.Image(size=(320,256), copy_to_fb=False)

#构建KPU对象

#需要导入2个模型,分别是人脸检测模型和68关键点检测模型

anchor = (0.893, 1.463, 0.245, 0.389, 1.55, 2.58, 0.375, 0.594, 3.099, 5.038, 0.057, 0.090, 0.567, 0.904, 0.101, 0.160, 0.159, 0.255)

kpu = KPU()

print("ready load model")

kpu.load_kmodel("/sd/face_detect.kmodel")

kpu.init_yolo2(anchor, anchor_num=9, img_w=320, img_h=240, net_w=320 , net_h=256 ,layer_w=10 ,layer_h=8, threshold=0.7, nms_value=0.2, classes=1)

lm68_kpu = KPU()

print("ready load model")

lm68_kpu.load_kmodel("/sd/landmark68.kmodel")

RATIO = 0.08

while 1:

gc.collect()

#print("mem free:",gc.mem_free())

clock.tick() # Update the FPS clock.

img = sensor.snapshot()

a = od_img.draw_image(img, 0,0)

od_img.pix_to_ai()

kpu.run_with_output(od_img)

dect = kpu.regionlayer_yolo2()

fps = clock.fps()

#识别到人脸

if len(dect) > 0:

print("dect:",dect)

for l in dect :

a = img.draw_rectangle(l[0],l[1],l[2],l[3], color=(0, 255, 0))

x1_t = l[0] - RATIO*l[2]

x2_t = l[0]+l[2] + RATIO*l[2]

y1_t = l[1] - RATIO*l[3]

y2_t = l[1]+l[3] + RATIO*l[3]

x1 = int(x1_t) if x1_t>1 else 1

x2 = int(x2_t) if x2_t<320 else 319

y1 = int(y1_t) if y1_t>1 else 1

y2 = int(y2_t) if y2_t<256 else 255

cut_img_w = x2-x1+1

cut_img_h = y2-y1+1

#print("cut img ",x1, y1, cut_img_w, cut_img_h)

face_cut = img.cut(x1,y1,cut_img_w,cut_img_h)

face_cut_128 = face_cut.resize(128, 128)

face_cut_128.pix_to_ai()

#识别68个关键点

out = lm68_kpu.run_with_output(face_cut_128, getlist=True)

if out is not None:

#print("out:",len(out))

for j in range(68):

x = int(KPU.sigmoid(out[2 * j])*cut_img_w + x1)

y = int(KPU.sigmoid(out[2 * j + 1])*cut_img_h + y1)

#a = img.draw_cross(x, y, size=1, color=(0, 0, 255))

a = img.draw_circle(x, y, 2, color=(0, 0, 255), fill=True)

del (face_cut_128)

del (face_cut)

a = img.draw_string(0, 0, "%2.1ffps" %(fps), color=(0, 60, 255), scale=2.0)

lcd.display(img)

gc.collect()

kpu.deinit()

lm68_kpu.deinit()

?

初步视觉使用介绍就到此结束了,拜拜?

文章来源:https://blog.csdn.net/hvbkbb/article/details/135447515

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- python中,将csv文件转换为txt文件的几种方法

- 【Proteus仿真】【51单片机】贪吃蛇游戏-LCD12864

- 计算机毕业设计-------JSP活动报名管理系统

- 打字练习(Python代码模拟打字练习软件效果)

- 新网域名外部入库流程

- docker——监控以及常用监控工具介绍和docker部署

- QKCP容器平台安装qkcp &paas deployment

- Java版商城:Spring Cloud+SpringBoot b2b2c实现多商家入驻直播带货及 免 费 小程序商城搭建的完整指南

- Cytoscape 3.10安装包下载及安装教程

- 2024年了,如何制作高水平简历?(附模板)