Nodes, Blocks, Clusters, and Nutanix Services

Nodes, Blocks, and Clusters

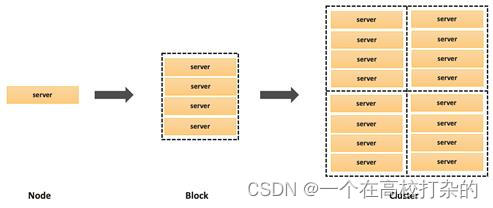

A node is an x86 server with compute and storage resources. A single cluster can have a maximum of 32 nodes for an AHV cluster and 48 nodes for an ESXi cluster. Different hardware platforms are available to address varying workload needs for compute and storage.

In a typical Nutanix cluster, a block is a chassis that holds one to four nodes, and contains power, cooling, and the backplane for the nodes. The number of nodes and drives depends on the hardware chosen for the solution.

- A Nutanix cluster is a logical grouping of physical and logical components.

- The nodes in a block can belong to the same or different clusters.

- Joining multiple nodes in a cluster allows for the pooling of resources.

- Acropolis presents storage as a single pool via the Controller VM (CVM).

- As part of the cluster creation process, all storage hardware (SSDs and HDDs) is presented as a single storage pool.

Nutanix Cluster Components

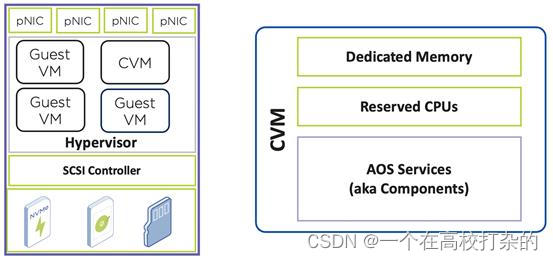

When Nutanix is installed on a server, a Controller VM is deployed (CVM). Every CVM has dedicated memory and reserved CPUs to allow the CVM to perform various services required by the cluster.

The Nutanix cluster has a distributed architecture, which means that each node in the cluster shares in the management of cluster resources and responsibilities. Within each node, there are software components (aka AOS Services) that perform specific tasks during cluster operation.

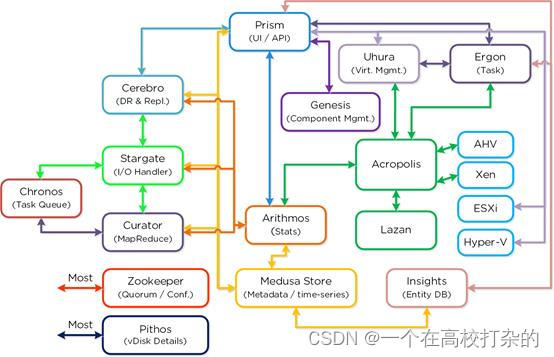

All components run on multiple nodes in the cluster and depend on connectivity between their peers that also run the component. Most components also depend on other components for information.

Acropolis

An Acropolis follower runs on every CVM with an elected Acropolis leader. The Acropolis follower is responsible for stat collection and publishing, and provides VNC proxy capabilities. The Acropolis leader is responsible for stat collection and publishing, task scheduling and execution, VM placement and scheduling, network controller, and VMC proxy.

Genesis

Genesis is a process which runs on each node and is responsible for any services interactions (start/stop/etc.) as well as for the initial configuration. Genesis is a process which runs independently of the cluster and does not require the cluster to be configured/running. The only requirement for Genesis to be running is that Zookeeper is up and running.

Zookeeper

Zookeeper stores information about all cluster components (both hardware and software), including their IP addresses, capacities, and data replication rules, in the cluster configuration.

Zookeeper is active on either three or five nodes, depending on the redundancy factor (number of data block copies) applied to the cluster. Zookeeper uses multiple nodes to prevent stale data from being returned to other components. An odd number provides a method for breaking ties if two nodes have different information.

Of these nodes, Zookeeper elects one node as the leader. The leader receives all requests for information and confers with its follower nodes. If the leader stops responding, a new leader is elected automatically.

Zookeeper has no dependencies, meaning that it can start without any other cluster components running.

Zeus

Zeus is an interface to access the information stored within Zookeeper and is the Nutanix library that all other components use to access the cluster configuration.

A key element of a distributed system is a method for all nodes to store and update the cluster's configuration. This configuration includes details about the physical components in the cluster, such as hosts and disks, and logical components, like storage containers.

Medusa

Distributed systems that store data for other systems (for example, a hypervisor that hosts virtual machines) must have a way to keep track of where that data is. In the case of a Nutanix cluster, it is also important to track where the replicas of that data are stored.

Medusa is a Nutanix abstraction layer that sits in front of the database that holds metadata. The database is distributed in a ring topology across multiple nodes in the cluster for resiliency, using a modified form of Apache Cassandra.

Cassandra

Nutanix's implementation of Cassandra uses a version of Apache Cassandra that has been modified for high performance and automatic, on-demand scaling. Cassandra stores all metadata about the guest VM data in a Nutanix storage container.

Cassandra runs on all nodes of the cluster. Cassandra monitor Level-2 periodically sends heartbeat to the daemon, that include information about the load, schema, and health of all the nodes in the ring. Cassandra monitor L2 depends on Zeus/Zk for this information.

A distributed system that presents storage to other systems (such as a hypervisor) needs a unified component for receiving and processing data that it receives. The Nutanix cluster has a software component called Stargate that manages this responsibility.

All read and write requests are sent across an internal vSwitch to the Stargate process running on that node.

Stargate depends on Medusa to gather metadata and Zeus to gather cluster configuration data.

From the perspective of the hypervisor, Stargate is the main point of contact for the Nutanix cluster.

Curator

A Curator leader node periodically scans the metadata database and identifies cleanup and optimization tasks that Stargate should perform. Curator shares analyzed metadata across other Curator nodes.

Curator depends on Zeus to learn which nodes are available, and Medusa to gather metadata. Based on that analysis, it sends commands to Stargate.

帮助理解参见

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 用Python跨年烟花秀

- 牛客网编程题——“求IBSN码”

- 文件操作(与文件相关)相关笔记

- 把文件从iPhone传到电脑,至少有三种方法,总有一种适合你

- C/C++面向对象(OOP)编程-位运算详解

- antd pro项目部署到gitpage白屏

- 2023 China DevOpsDays(DOD) & DXCon 国际数字化转型与创新管理企业峰会:核心内容与学习收获(附大会核心PPT下载)

- 机器学习(八) — K-means

- 1308:【例1.5】高精除

- 知识笔记(八十六)———Unity 实现双击交互