Autogen4j: the Java version of Microsoft AutoGen

https://github.com/HamaWhiteGG/autogen4j

Java version of Microsoft AutoGen, Enable Next-Gen Large Language Model Applications.

1. What is AutoGen

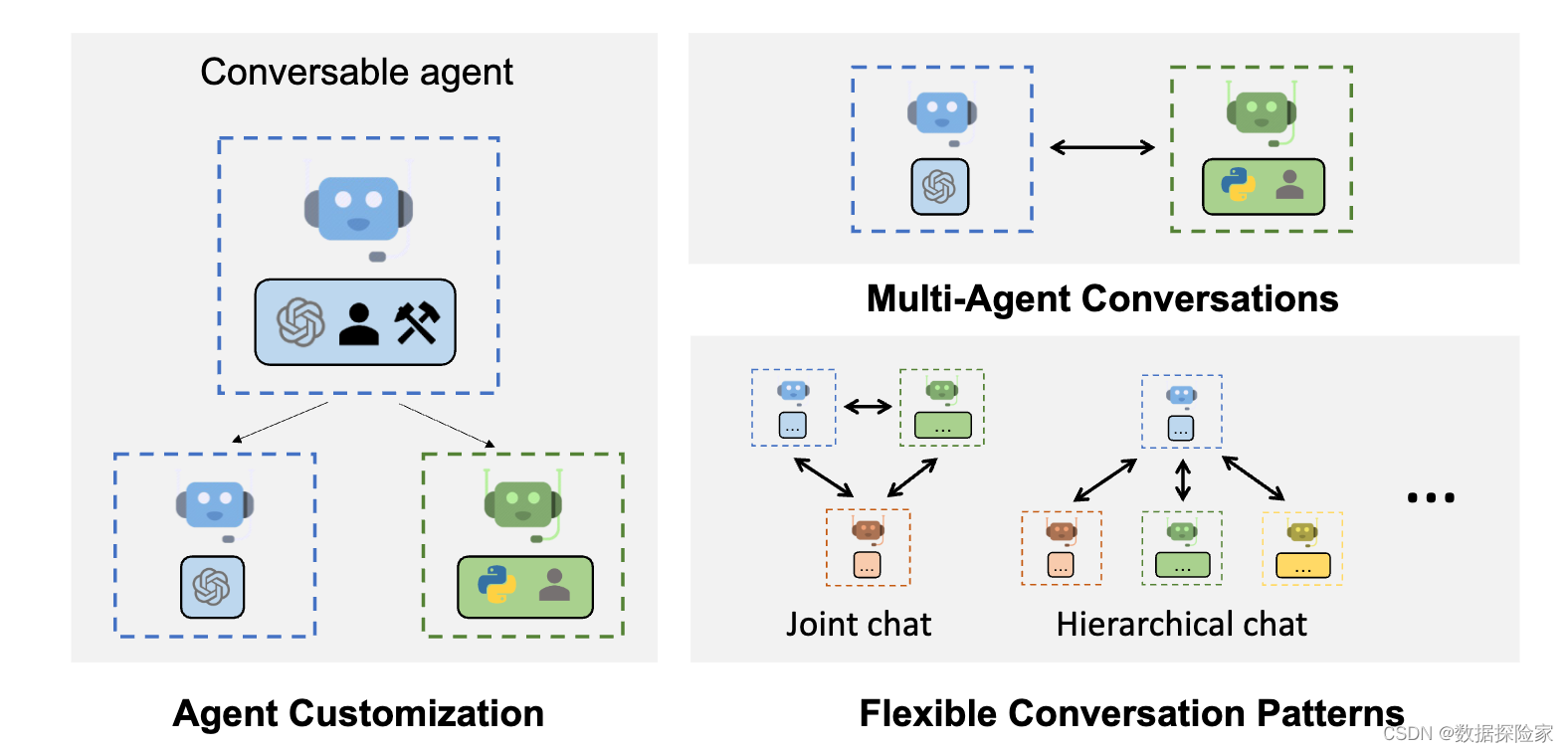

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

The following example in the autogen4j-example.

2. Quickstart

2.1 Maven Repository

Prerequisites for building:

- Java 17 or later

- Unix-like environment (we use Linux, Mac OS X)

- Maven (we recommend version 3.8.6 and require at least 3.5.4)

<dependency>

<groupId>io.github.hamawhitegg</groupId>

<artifactId>autogen4j-core</artifactId>

<version>0.1.0</version>

</dependency>

2.2 Environment Setup

Using Autogen4j requires OpenAI’s APIs, you need to set the environment variable.

export OPENAI_API_KEY=xxx

3. Multi-Agent Conversation Framework

Autogen enables the next-gen LLM applications with a generic multi-agent conversation framework. It offers customizable and conversable agents that integrate LLMs, tools, and humans.

By automating chat among multiple capable agents, one can easily make them collectively perform tasks autonomously or with human feedback, including tasks that require using tools via code.

Features of this use case include:

- Multi-agent conversations: AutoGen agents can communicate with each other to solve tasks. This allows for more complex and sophisticated applications than would be possible with a single LLM.

- Customization: AutoGen agents can be customized to meet the specific needs of an application. This includes the ability to choose the LLMs to use, the types of human input to allow, and the tools to employ.

- Human participation: AutoGen seamlessly allows human participation. This means that humans can provide input and feedback to the agents as needed.

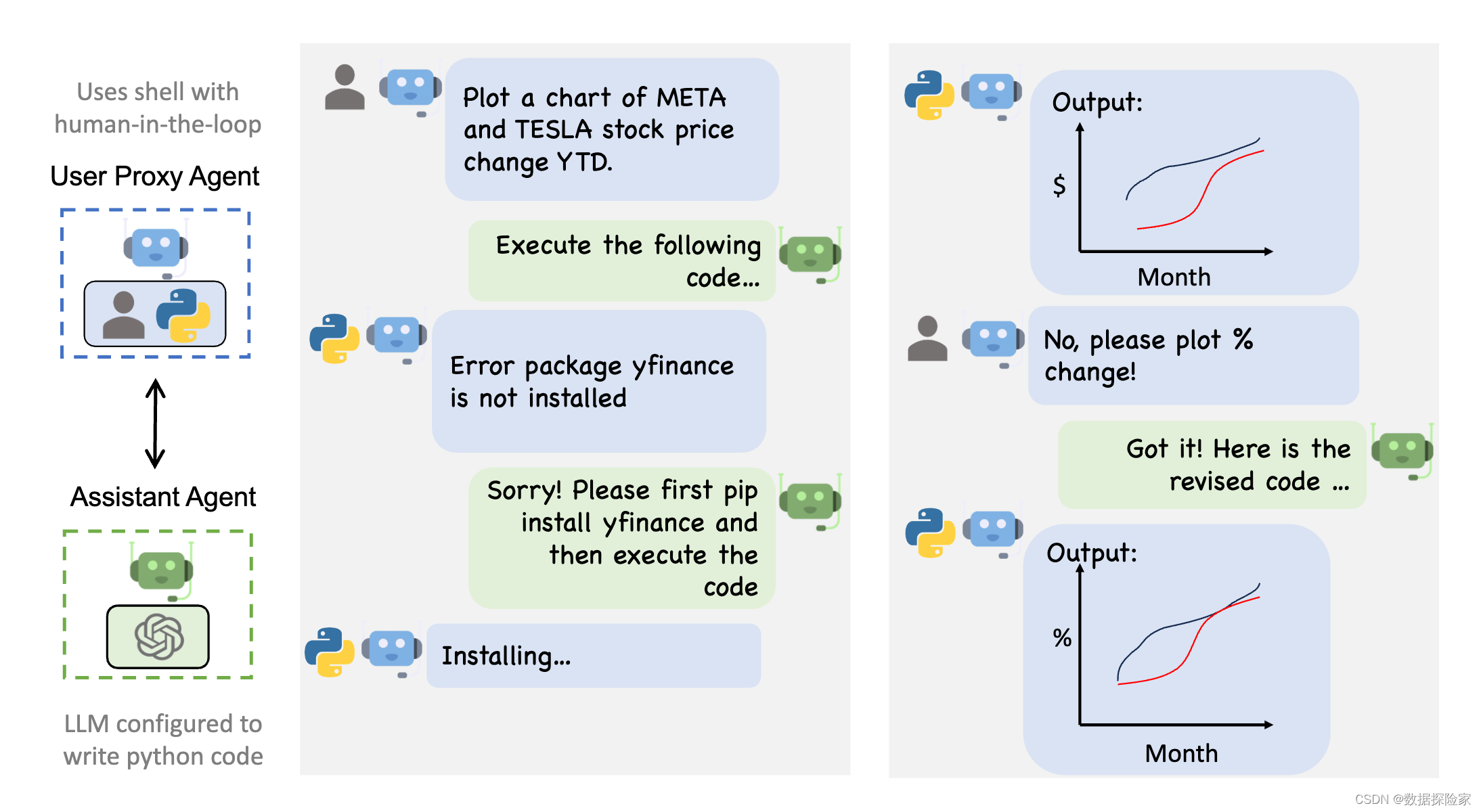

3.1 Auto Feedback From Code Execution Example

Auto Feedback From Code Execution Example

// create an AssistantAgent named "assistant"

var assistant = AssistantAgent.builder()

.name("assistant")

.build();

var codeExecutionConfig = CodeExecutionConfig.builder()

.workDir("data/coding")

.build();

// create a UserProxyAgent instance named "user_proxy"

var userProxy = UserProxyAgent.builder()

.name("user_proxy")

.humanInputMode(NEVER)

.maxConsecutiveAutoReply(10)

.isTerminationMsg(e -> e.getContent().strip().endsWith("TERMINATE"))

.codeExecutionConfig(codeExecutionConfig)

.build();

// the assistant receives a message from the user_proxy, which contains the task description

userProxy.initiateChat(assistant,

"What date is today? Compare the year-to-date gain for META and TESLA.");

// followup of the previous question

userProxy.send(assistant,

"Plot a chart of their stock price change YTD and save to stock_price_ytd.png.");

The figure below shows an example conversation flow with Autogen4j.

After running, you can check the file coding_output.log for the output logs.

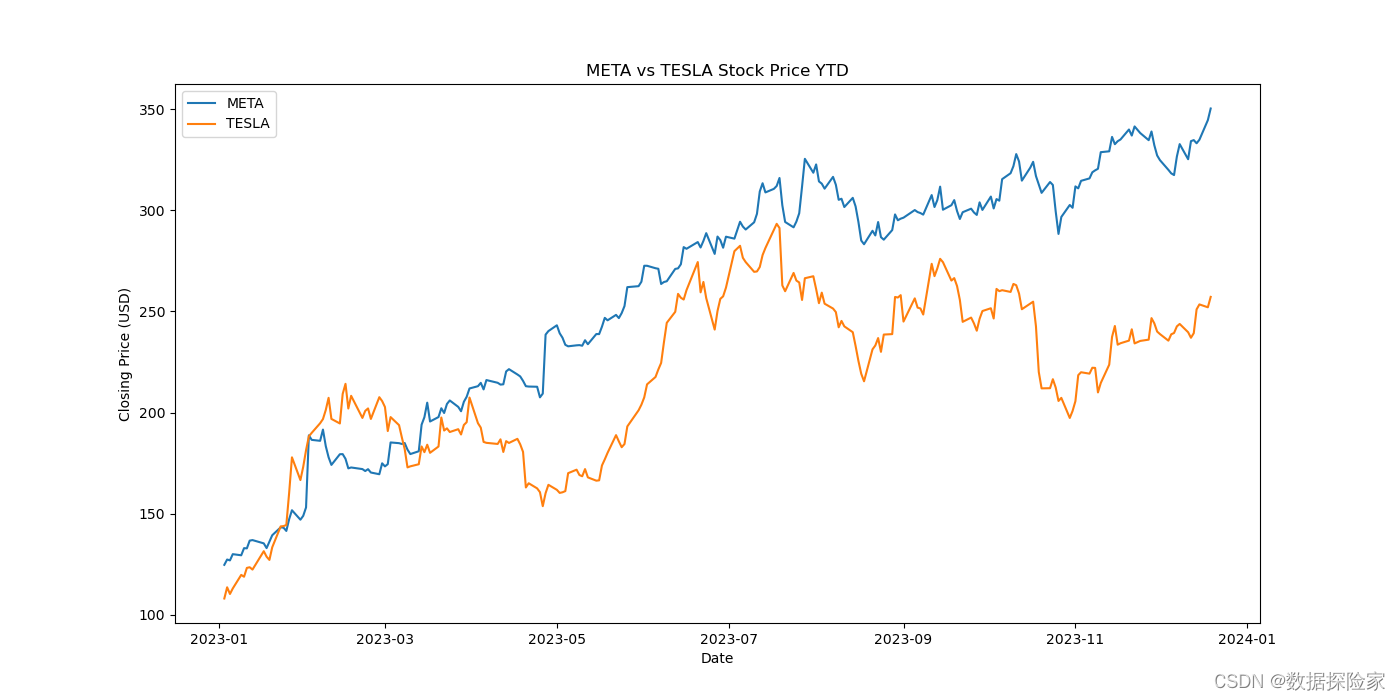

The final output is as shown in the following picture.

3.2 Group Chat Example

var codeExecutionConfig = CodeExecutionConfig.builder()

.workDir("data/group_chat")

.lastMessagesNumber(2)

.build();

// create a UserProxyAgent instance named "user_proxy"

var userProxy = UserProxyAgent.builder()

.name("user_proxy")

.systemMessage("A human admin.")

.humanInputMode(TERMINATE)

.codeExecutionConfig(codeExecutionConfig)

.build();

// create an AssistantAgent named "coder"

var coder = AssistantAgent.builder()

.name("coder")

.build();

// create an AssistantAgent named "pm"

var pm = AssistantAgent.builder()

.name("product_manager")

.systemMessage("Creative in software product ideas.")

.build();

var groupChat = GroupChat.builder()

.agents(List.of(userProxy, coder, pm))

.maxRound(12)

.build();

// create an GroupChatManager named "manager"

var manager = GroupChatManager.builder()

.groupChat(groupChat)

.build();

userProxy.initiateChat(manager,

"Find a latest paper about gpt-4 on arxiv and find its potential applications in software.");

After running, you can check the file group_chat_output.log for the output logs.

4. Run Test Cases from Source

git clone https://github.com/HamaWhiteGG/autogen4j.git

cd autogen4j

# export JAVA_HOME=JDK17_INSTALL_HOME && mvn clean test

mvn clean test

This project uses Spotless to format the code.

If you make any modifications, please remember to format the code using the following command.

# export JAVA_HOME=JDK17_INSTALL_HOME && mvn spotless:apply

mvn spotless:apply

5. Support

Don’t hesitate to ask!

Open an issue if you find a bug or need any help.

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 美国化妆品FDA认证被强制要求,出口企业该这么办!!!

- re模块中match函数的使用

- 清理docker 无用数据

- python&Pandas五:数据分析与统计

- 计算机网络中的基本概念解释

- Hotspot源码解析-第23章--Java Class的初始化

- es数据建模

- 如何进行高效过滤器检漏:法规标准对比及检漏步骤指南

- Arduino开发实例-LoRa通信(基于SX1278 LoRa)

- IC工程师级别与薪资是怎样的?资深工程师一文带你了解清楚