利用GPU训练神经网络

发布时间:2024年01月12日

一

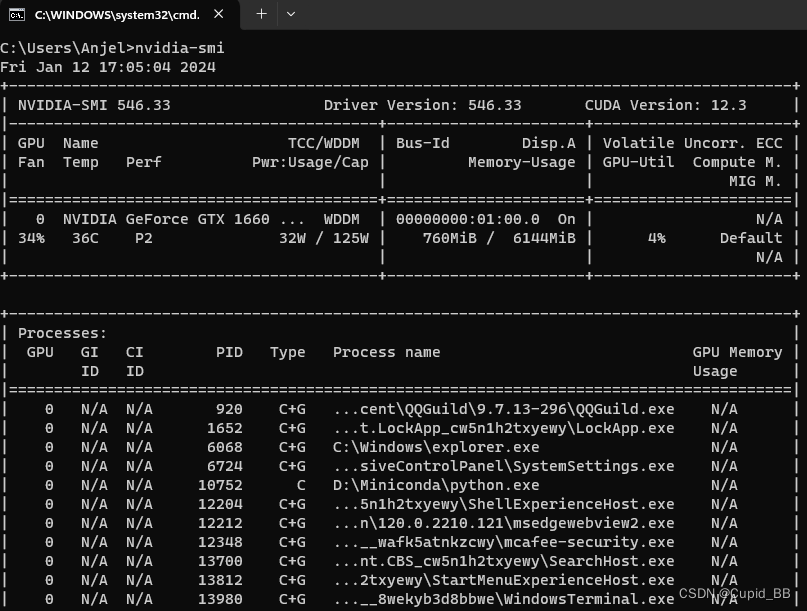

首先,查看GPU情况

nvidia-smi

确实训练得要快多了

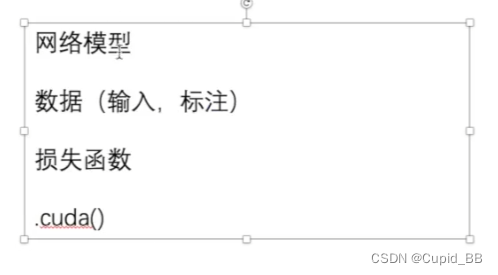

在网络模型后添加cuda方法

tudui = Tudui()

tudui = tudui.cuda()

损失函数

loss_fn = nn.CrossEntropyLoss()

loss_fn = loss_fn.cuda()

训练

imgs, targets = data

imgs = imgs.cuda()

targets = targets.cuda()

测试

imgs, targets = data

imgs = imgs.cuda()

targets = targets.cuda()

如果不确定是否有GPU,这种写法更好

判断一下,如果有再执行;这样才在CPU或者GPU上都能跑,优先GPU

tudui = Tudui()

if torch.cuda.is_available():

tudui = tudui.cuda()

记录时间进行比较

import time

start_time = time.time()

end_time = time.time()

print(end_time - start_time)

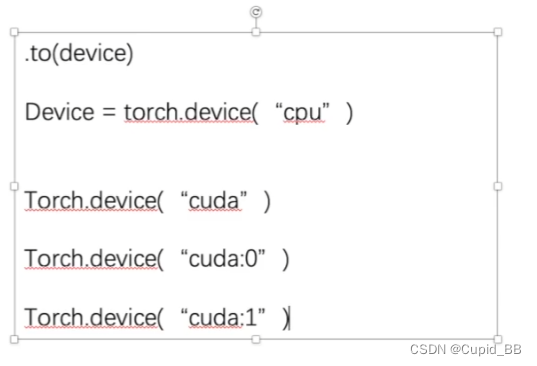

二

定义训练的设备

device = torch.device("cpu")

#device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

修改部分代码

tudui = Tudui()

tudui = tudui.to(device)

loss_fn = loss_fn.to(device)

#训练+测试部分

imgs = imgs.to(device)

targets = targets.to(device)

其实模型和损失函数不需另外赋值,但训练和测试部分需要

tudui = Tudui()

tudui.to(device)

loss_fn.to(device)

#训练+测试部分

imgs = imgs.to(device)

targets = targets.to(device)

文章来源:https://blog.csdn.net/weixin_51788042/article/details/135557633

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!