C# Open Vocabulary Object Detection 部署开放域目标检测

目录

C# Open Vocabulary Object Detection 部署开放域目标检测

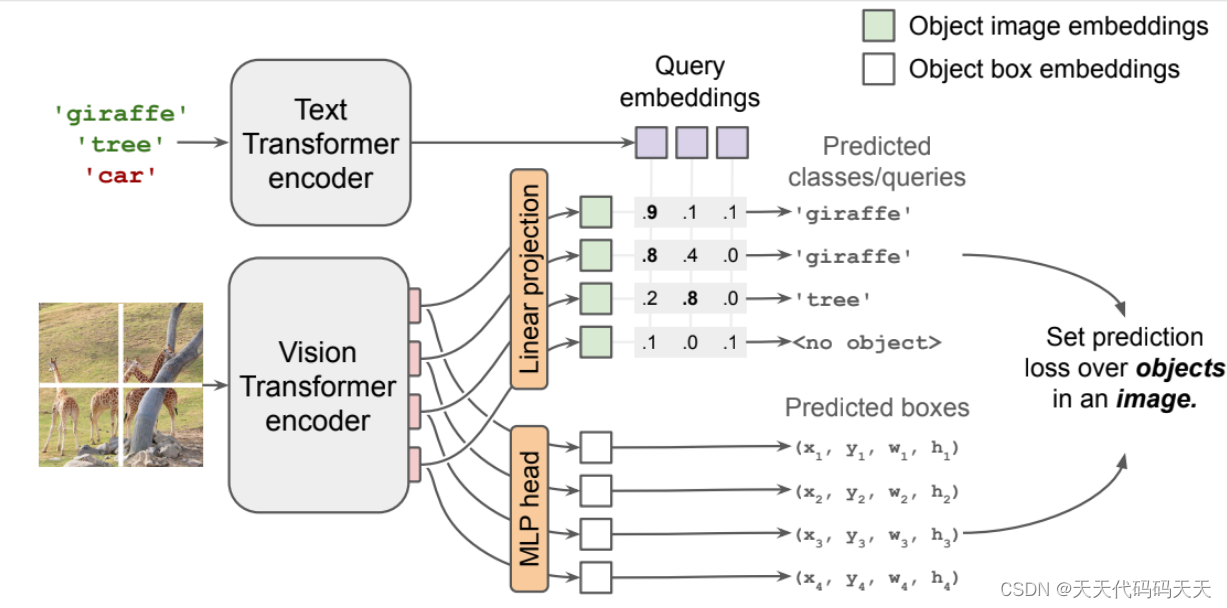

介绍

训练源码地址:https://github.com/google-research/scenic/tree/main/scenic/projects/owl_vit

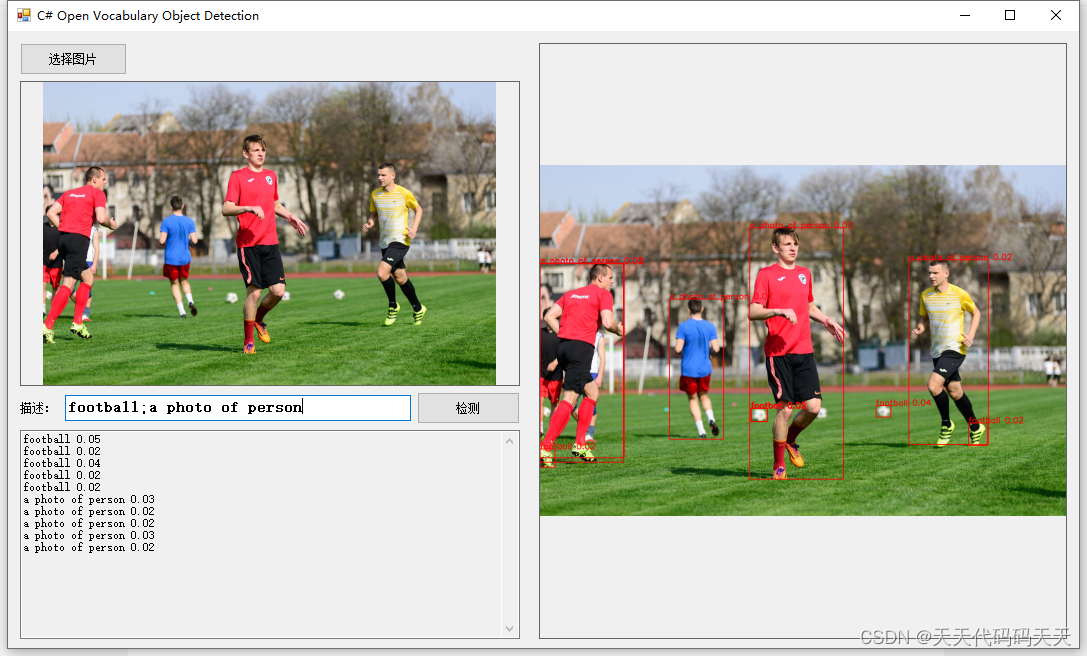

效果

模型信息

owlvit-image.onnx

Inputs

-------------------------

name:pixel_values

tensor:Float[1, 3, 768, 768]

---------------------------------------------------------------

Outputs

-------------------------

name:image_embeds

tensor:Float[1, 24, 24, 768]

name:pred_boxes

tensor:Float[1, 576, 4]

---------------------------------------------------------------

owlvit-post.onnx

Inputs

-------------------------

name:image_embeds

tensor:Float[1, 24, 24, 768]

name:/owlvit/Div_output_0

tensor:Float[1, 512]

name:input_ids

tensor:Int64[1, 16]

---------------------------------------------------------------

Outputs

-------------------------

name:logits

tensor:Float[-1, 576, 1]

---------------------------------------------------------------

owlvit-text.onnx

Inputs

-------------------------

name:input_ids

tensor:Int64[1, 16]

name:attention_mask

tensor:Int64[1, 16]

---------------------------------------------------------------

Outputs

-------------------------

name:text_embeds

tensor:Float[1, 1, 512]

---------------------------------------------------------------

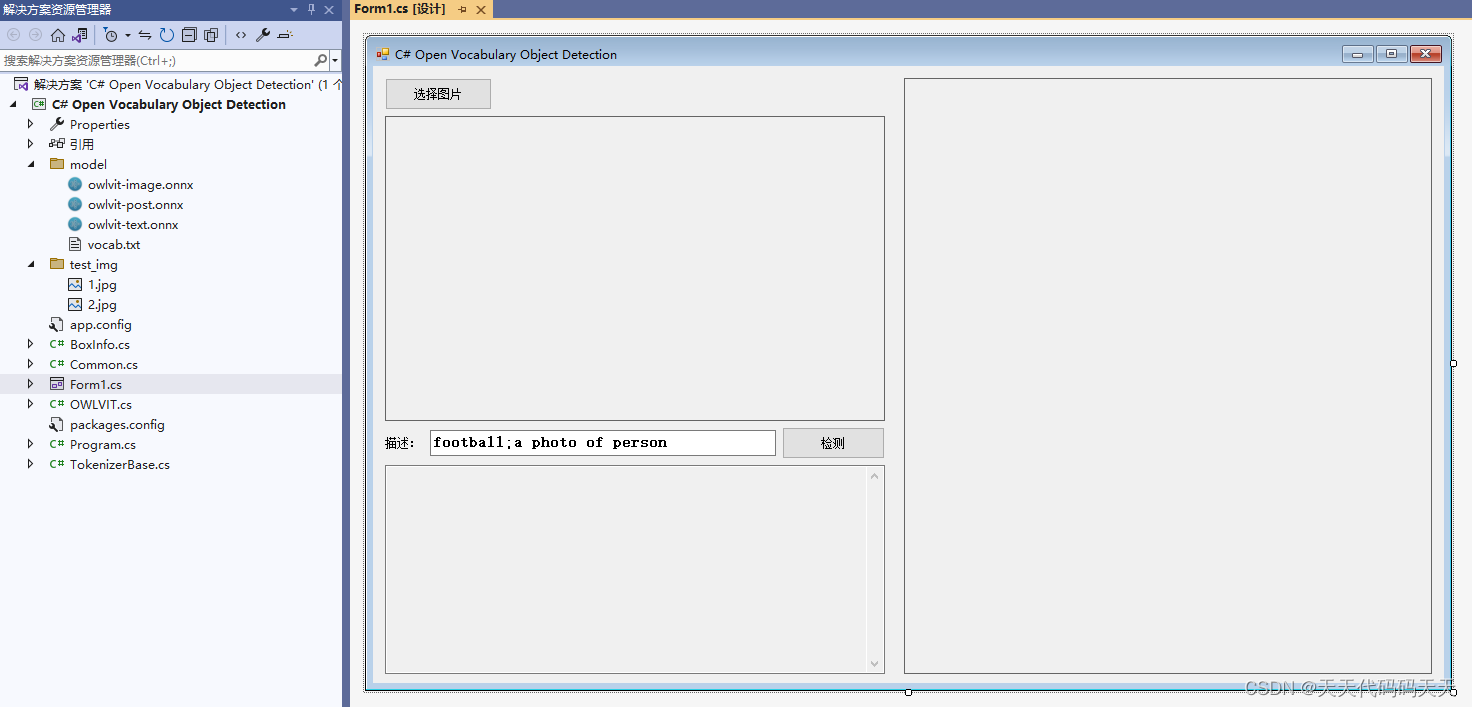

项目

代码

Form1.cs

using OpenCvSharp;

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Windows.Forms;

namespace Onnx_Demo

{

? ? public partial class Form1 : Form

? ? {

? ? ? ? public Form1()

? ? ? ? {

? ? ? ? ? ? InitializeComponent();

? ? ? ? }

? ? ? ? OWLVIT owlvit = new OWLVIT("model/owlvit-image.onnx", "model/owlvit-text.onnx", "model/owlvit-post.onnx", "model/vocab.txt");

? ? ? ? string image_path = "";

? ? ? ? string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

? ? ? ? StringBuilder sb = new StringBuilder();

? ? ? ? Mat image;

? ? ? ? Mat result_image;

? ? ? ? private void button2_Click(object sender, EventArgs e)

? ? ? ? {

? ? ? ? ? ? OpenFileDialog ofd = new OpenFileDialog();

? ? ? ? ? ? ofd.Filter = fileFilter;

? ? ? ? ? ? if (ofd.ShowDialog() != DialogResult.OK) return;

? ? ? ? ? ? pictureBox1.Image = null;

? ? ? ? ? ? pictureBox2.Image = null;

? ? ? ? ? ? txtInfo.Text = "";

? ? ? ? ? ? image_path = ofd.FileName;

? ? ? ? ? ? pictureBox2.Image = new Bitmap(image_path);

? ? ? ? ? ? image = new Mat(image_path);

? ? ? ? }

? ? ? ? private void button3_Click(object sender, EventArgs e)

? ? ? ? {

? ? ? ? ? ? if (image_path == "")

? ? ? ? ? ? {

? ? ? ? ? ? ? ? return;

? ? ? ? ? ? }

? ? ? ? ? ? if (String.IsNullOrEmpty(txt_input_text.Text))

? ? ? ? ? ? {

? ? ? ? ? ? ? ? return;

? ? ? ? ? ? }

? ? ? ? ? ? pictureBox1.Image = null;

? ? ? ? ? ? txtInfo.Text = "检测中,请稍等……";

? ? ? ? ? ? button3.Enabled=false;

? ? ? ? ? ? if (pictureBox1.Image!=null)

? ? ? ? ? ? {

? ? ? ? ? ? ? ? pictureBox1.Image.Dispose();

? ? ? ? ? ? ? ? pictureBox1.Image = null; ??

? ? ? ? ? ? }

? ? ? ? ? ? Application.DoEvents();

? ? ? ? ? ? List<string> texts = txt_input_text.Text.Split(new char[] { ';' }, StringSplitOptions.RemoveEmptyEntries).ToList();

? ? ? ? ? ? owlvit.encode_texts(texts);

? ? ? ? ? ? List<BoxInfo> objects = owlvit.detect(image, texts);

? ? ? ? ? ? result_image = image.Clone();

? ? ? ? ? ? sb.Clear();

? ? ? ? ? ? for (int i = 0; i < objects.Count; i++)

? ? ? ? ? ? {

? ? ? ? ? ? ? ? Cv2.Rectangle(result_image, objects[i].box, new Scalar(0, 0, 255), 2);

? ? ? ? ? ? ? ? Cv2.PutText(result_image, objects[i].text + " " + objects[i].prob.ToString("F2"), new OpenCvSharp.Point(objects[i].box.X, objects[i].box.Y), HersheyFonts.HersheySimplex, 1, new Scalar(0, 0, 255), 2); ;

? ? ? ? ? ? ? ? sb.AppendLine(objects[i].text + " " + objects[i].prob.ToString("F2"));

? ? ? ? ? ? }

? ? ? ? ? ? pictureBox1.Image = new Bitmap(result_image.ToMemoryStream());

? ? ? ? ? ? button3.Enabled = true;

? ? ? ? ? ? txtInfo.Text = sb.ToString();

? ? ? ? }

? ? ? ? private void Form1_Load(object sender, EventArgs e)

? ? ? ? {

? ? ? ? ? ? image_path = "test_img/2.jpg";

? ? ? ? ? ? pictureBox2.Image = new Bitmap(image_path);

? ? ? ? ? ? image = new Mat(image_path);

? ? ? ? ? ? owlvit.encode_image(image);

? ? ? ? }

? ? }

}

using OpenCvSharp;

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Windows.Forms;

namespace Onnx_Demo

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

OWLVIT owlvit = new OWLVIT("model/owlvit-image.onnx", "model/owlvit-text.onnx", "model/owlvit-post.onnx", "model/vocab.txt");

string image_path = "";

string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

StringBuilder sb = new StringBuilder();

Mat image;

Mat result_image;

private void button2_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox1.Image = null;

pictureBox2.Image = null;

txtInfo.Text = "";

image_path = ofd.FileName;

pictureBox2.Image = new Bitmap(image_path);

image = new Mat(image_path);

}

private void button3_Click(object sender, EventArgs e)

{

if (image_path == "")

{

return;

}

if (String.IsNullOrEmpty(txt_input_text.Text))

{

return;

}

pictureBox1.Image = null;

txtInfo.Text = "检测中,请稍等……";

button3.Enabled=false;

if (pictureBox1.Image!=null)

{

pictureBox1.Image.Dispose();

pictureBox1.Image = null;

}

Application.DoEvents();

List<string> texts = txt_input_text.Text.Split(new char[] { ';' }, StringSplitOptions.RemoveEmptyEntries).ToList();

owlvit.encode_texts(texts);

List<BoxInfo> objects = owlvit.detect(image, texts);

result_image = image.Clone();

sb.Clear();

for (int i = 0; i < objects.Count; i++)

{

Cv2.Rectangle(result_image, objects[i].box, new Scalar(0, 0, 255), 2);

Cv2.PutText(result_image, objects[i].text + " " + objects[i].prob.ToString("F2"), new OpenCvSharp.Point(objects[i].box.X, objects[i].box.Y), HersheyFonts.HersheySimplex, 1, new Scalar(0, 0, 255), 2); ;

sb.AppendLine(objects[i].text + " " + objects[i].prob.ToString("F2"));

}

pictureBox1.Image = new Bitmap(result_image.ToMemoryStream());

button3.Enabled = true;

txtInfo.Text = sb.ToString();

}

private void Form1_Load(object sender, EventArgs e)

{

image_path = "test_img/2.jpg";

pictureBox2.Image = new Bitmap(image_path);

image = new Mat(image_path);

owlvit.encode_image(image);

}

}

}

OWLVIT.cs?

using Microsoft.ML.OnnxRuntime;

using Microsoft.ML.OnnxRuntime.Tensors;

using OpenCvSharp;

using OpenCvSharp.Dnn;

using System;

using System.Collections.Generic;

using System.Linq;

namespace Onnx_Demo

{

public class OWLVIT

{

float bbox_threshold = 0.02f;

int inpWidth = 768;

int inpHeight = 768;

float[] mean = new float[] { 0.48145466f, 0.4578275f, 0.40821073f };

float[] std = new float[] { 0.26862954f, 0.26130258f, 0.27577711f };

Net net;

float[] image_features_input;

SessionOptions options;

InferenceSession onnx_session;

List<NamedOnnxValue> input_container;

IDisposableReadOnlyCollection<DisposableNamedOnnxValue> result_infer;

DisposableNamedOnnxValue[] results_onnxvalue;

Tensor<float> result_tensors;

TokenizerBase tokenizer;

SessionOptions options_transformer;

InferenceSession onnx_session_transformer;

float[] image_features;

List<long[]> input_ids = new List<long[]>();

List<float[]> text_features = new List<float[]>();

long[] attention_mask;

int len_image_feature = 24 * 24 * 768;

int cnt_pred_boxes = 576;

int len_text_token = 16;

int context_length = 52;

int len_text_feature = 512;

int[] image_features_shape = { 1, 24, 24, 768 };

int[] text_features_shape = { 1, 512 };

public int imgnum = 0;

public List<string> imglist = new List<string>();

List<Rect2f> pred_boxes = new List<Rect2f>();

public OWLVIT(string image_modelpath, string text_modelpath, string decoder_model_path, string vocab_path)

{

net = CvDnn.ReadNetFromOnnx(image_modelpath);

input_container = new List<NamedOnnxValue>();

options = new SessionOptions();

options.LogSeverityLevel = OrtLoggingLevel.ORT_LOGGING_LEVEL_INFO;

options.AppendExecutionProvider_CPU(0);

onnx_session = new InferenceSession(text_modelpath, options);

options_transformer = new SessionOptions();

options_transformer.LogSeverityLevel = OrtLoggingLevel.ORT_LOGGING_LEVEL_INFO;

options_transformer.AppendExecutionProvider_CPU(0);

onnx_session_transformer = new InferenceSession(decoder_model_path, options);

load_tokenizer(vocab_path);

}

void load_tokenizer(string vocab_path)

{

tokenizer = new TokenizerClip();

tokenizer.load_tokenize(vocab_path);

}

Mat normalize_(Mat src)

{

Cv2.CvtColor(src, src, ColorConversionCodes.BGR2RGB);

Mat[] bgr = src.Split();

for (int i = 0; i < bgr.Length; ++i)

{

bgr[i].ConvertTo(bgr[i], MatType.CV_32FC1, 1.0 / (255.0 * std[i]), (0.0 - mean[i]) / std[i]);

}

Cv2.Merge(bgr, src);

foreach (Mat channel in bgr)

{

channel.Dispose();

}

return src;

}

float sigmoid(float x)

{

return (float)(1.0f / (1.0f + Math.Exp(-x)));

}

public unsafe void encode_image(Mat srcimg)

{

pred_boxes.Clear();

Mat temp_image = new Mat();

Cv2.Resize(srcimg, temp_image, new Size(inpWidth, inpHeight));

Mat normalized_mat = normalize_(temp_image);

Mat blob = CvDnn.BlobFromImage(normalized_mat);

net.SetInput(blob);

//模型推理,读取推理结果

Mat[] outs = new Mat[2] { new Mat(), new Mat() };

string[] outBlobNames = net.GetUnconnectedOutLayersNames().ToArray();

net.Forward(outs, outBlobNames);

float* ptr_feat = (float*)outs[0].Data;

image_features = new float[len_image_feature];

for (int i = 0; i < len_image_feature; i++)

{

image_features[i] = ptr_feat[i];

}

float* ptr_box = (float*)outs[1].Data;

Rect2f temp;

for (int i = 0; i < cnt_pred_boxes; i++)

{

float xc = ptr_box[i * 4 + 0] * inpWidth;

float yc = ptr_box[i * 4 + 1] * inpHeight;

temp = new Rect2f();

temp.Width = ptr_box[i * 4 + 2] * inpWidth;

temp.Height = ptr_box[i * 4 + 3] * inpHeight;

temp.X = (float)(xc - temp.Width * 0.5);

temp.Y = (float)(yc - temp.Height * 0.5);

pred_boxes.Add(temp);

}

}

public unsafe void encode_texts(List<string> texts)

{

List<List<int>> text_token = new List<List<int>>(texts.Count);

for (int i = 0; i < texts.Count; i++)

{

text_token.Add(new List<int>());

}

text_features.Clear();

input_ids.Clear();

for (int i = 0; i < texts.Count; i++)

{

tokenizer.encode_text(texts[i], text_token[i]);

int len_ids = text_token[i].Count;

long[] temp_ids = new long[len_text_token];

attention_mask = new long[len_text_token];

for (int j = 0; j < len_text_token; j++)

{

if (j < len_ids)

{

temp_ids[j] = text_token[i][j];

attention_mask[j] = 1;

}

else

{

temp_ids[j] = 0;

attention_mask[j] = 0;

}

}

input_ids.Add(temp_ids);

input_container.Clear();

Tensor<long> input_tensor = new DenseTensor<long>(input_ids[i], new[] { 1, len_text_token });

Tensor<long> input_tensor_mask = new DenseTensor<long>(attention_mask, new[] { 1, attention_mask.Length });

input_container.Add(NamedOnnxValue.CreateFromTensor("input_ids", input_tensor));

input_container.Add(NamedOnnxValue.CreateFromTensor("attention_mask", input_tensor));

result_infer = onnx_session.Run(input_container);

results_onnxvalue = result_infer.ToArray();

result_tensors = results_onnxvalue[0].AsTensor<float>();

float[] temp_text_features = results_onnxvalue[0].AsTensor<float>().ToArray();

text_features.Add(temp_text_features);

}

}

List<float> decode(float[] input_image_feature, float[] input_text_feature, long[] input_id)

{

input_container.Clear();

Tensor<float> input_tensor_image_embeds = new DenseTensor<float>(input_image_feature, image_features_shape);

Tensor<float> input_tensor_Div_output_0 = new DenseTensor<float>(input_text_feature, text_features_shape);

Tensor<long> input_ids = new DenseTensor<long>(input_id, new[] { 1, 16 });

/*

name:image_embeds

tensor:Float[1, 24, 24, 768]

name:/owlvit/Div_output_0

tensor:Float[1, 512]

name:input_ids

tensor:Int64[1, 16]

*/

input_container.Add(NamedOnnxValue.CreateFromTensor("image_embeds", input_tensor_image_embeds));

input_container.Add(NamedOnnxValue.CreateFromTensor("/owlvit/Div_output_0", input_tensor_Div_output_0));

input_container.Add(NamedOnnxValue.CreateFromTensor("input_ids", input_ids));

result_infer = onnx_session_transformer.Run(input_container);

results_onnxvalue = result_infer.ToArray();

result_tensors = results_onnxvalue[0].AsTensor<float>();

return results_onnxvalue[0].AsTensor<float>().ToList();

}

public List<BoxInfo> detect(Mat srcimg, List<string> texts)

{

float ratioh = 1.0f * srcimg.Rows / inpHeight;

float ratiow = 1.0f * srcimg.Cols / inpWidth;

List<float> confidences = new List<float>();

List<Rect> boxes = new List<Rect>();

List<string> className = new List<string>();

for (int i = 0; i < input_ids.Count; i++)

{

List<float> logits = decode(image_features, text_features[i], input_ids[i]);

for (int j = 0; j < logits.Count; j++)

{

float score = sigmoid(logits[j]);

if (score >= bbox_threshold)

{

//还原回到原图

int xmin = (int)(pred_boxes[j].X * ratiow);

int ymin = (int)(pred_boxes[j].Y * ratioh);

int xmax = (int)((pred_boxes[j].X + pred_boxes[j].Width) * ratiow);

int ymax = (int)((pred_boxes[j].Y + pred_boxes[j].Height) * ratioh);

//越界检查保护

xmin = Math.Max(Math.Min(xmin, srcimg.Cols - 1), 0);

ymin = Math.Max(Math.Min(ymin, srcimg.Rows - 1), 0);

xmax = Math.Max(Math.Min(xmax, srcimg.Cols - 1), 0);

ymax = Math.Max(Math.Min(ymax, srcimg.Rows - 1), 0);

boxes.Add(new Rect(xmin, ymin, xmax - xmin, ymax - ymin));

confidences.Add(score);

className.Add(texts[i]);

}

}

}

float nmsThreshold = 0.5f;

int[] indices;

CvDnn.NMSBoxes(boxes, confidences, bbox_threshold, nmsThreshold, out indices);

List<BoxInfo> objects = new List<BoxInfo>();

for (int i = 0; i < indices.Length; ++i)

{

BoxInfo temp = new BoxInfo();

temp.text = className[i];

temp.prob = confidences[i];

temp.box = boxes[i];

objects.Add(temp);

}

return objects;

}

}

}

下载?

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 【人工智能】最详尽的产生式系统实验报告:动物类型产生式推理系统

- 单调栈|907.子数组的最小值之和

- ?C语言顺序查找算法以及代码?

- 羊大师解答,小孩是喝羊奶好还是牛奶好

- 为什么pmp证书只能对标cspm二级证书?

- DshanMCU-R128s2 R128 EVT 开发套件

- 【无标题】Unlocking the Power of BMW ICOM Next: A Mini Guide

- DNS欺骗

- 中间人攻击如何进行防护

- 原生微信小程序-使用 阿里字体图标 详解