contos7下ELK单机版搭建-干货满满

contos7下ELK单机版搭建

服务器配置

基础信息

contos7系统 8核 32G内存 200G硬盘空间

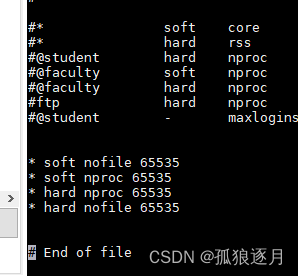

调整服务器文件打开数量,使用 ulimit -n 查看

命令打开配置,拉倒最下方 添加如下代码

vi /etc/security/limits.conf

* soft nofile 65535

* soft nproc 65535

* hard nproc 65535

* hard nofile 65535

重启服务器使配置生效

reboot

tar包准备

服务器创建目录mkdir /home/elk 将tar包放入该目录下

tar包下载 因资源太大 分两次上传

elk全家桶1

elk全家桶2

使用版本为8.6.2

es

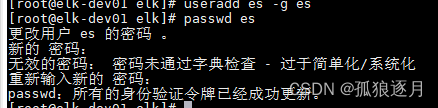

ElasticSearch5.0之后不能使用root账号启动,所以需要创建ES用户并设置密码

groupadd es

useradd es -g es

passwd es

进入/home/elk目录解压tar包

tar -zxvf elasticsearch-8.6.2-linux-x86_64.tar.gz

调整es解压文件位置,创建数据目录

mv elasticsearch-8.6.2 /home/es/

cd /home/es/elasticsearch-8.6.2/

mkdir data

备份配置文件并进行编辑

cd config/

cp elasticsearch.yml elasticsearch.ymlbak001

vi elasticsearch.yml

调整的配置, 配置中都有放开注释即可,或在最下方新增也可

node.name: node-es

path.data: /home/es/elasticsearch-8.6.2/data

path.logs: /home/es/elasticsearch-8.6.2/logs

network.host: 0.0.0.0

http.port: 9200

修改es用户权限 es不能使用root用户启动

chown -R es:es /home/es/elasticsearch-8.6.2/

调整用户并启动es ./elasticsearch -h为后台启动

cd ../bin/

su es

./elasticsearch

打开网址 要https

才可 如下图所示即为成功 https://172.27.6.44:9200/

扩展 配置中可关闭自动更新

cd /home/es/elasticsearch-8.6.2/config

vi elasticsearch.yml

#关闭更新配置

ingest.geoip.downloader.enabled: false

- 可修改elastic密码,在启动elasticsearch情况下,进入es的bin目录下执行

./elasticsearch-reset-password --username elastic -i

kibana–重新打开一个shell窗口

解压缩并指定目录

cd /home/elk/

tar -zxvf kibana-8.6.2-linux-x86_64.tar.gz

groupadd kibana

useradd -g kibana kibana

passwd kibana

mv kibana-8.6.2 /home/kibana/

编辑配置文件

cd /home/kibana/kibana-8.6.2/config

cp kibana.yml kibana.ymlbak001

vi kibana.yml

server.port: 5601

server.host: "0.0.0.0"

server.name: kibana

elasticsearch.hosts: ["http://172.27.6.44:9200/"]

#中文显示

i18n.locale: "zh-CN"

修改权限并启动

chown -R kibana:kibana /home/kibana/

su kibana

cd /home/kibana/kibana-8.6.2/bin

./kibana

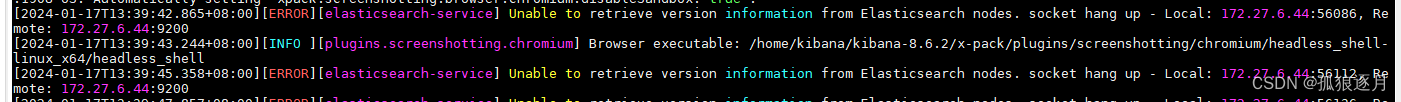

错误(1)

Unable to retrieve version information from Elasticsearch nodes. socket hang up

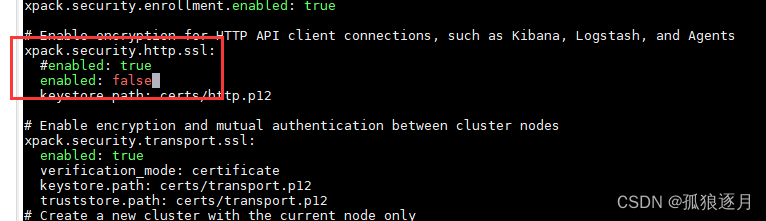

解决:返回elasticsearch窗口, 修改配置文件将xpack.security.http.ssl.enabled改为false即可,修改后启动

vi /home/es/elasticsearch-8.6.2/config/elasticsearch.yml

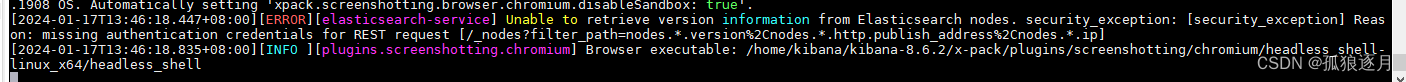

错误(2)

Unable to retrieve version information from Elasticsearch nodes. security_exception: [security_exception] Reason: missing authentication credentials for REST request [/_nodes?filter_path=nodes..version%2Cnodes..http.publish_address%2Cnodes.*.ip]

解决 在elasticsearch中创建kibana用户

此时elasticsearch已启动,新打开shell窗口,进入用户es,进入bin目录

su es

cd /home/es/elasticsearch-8.6.2/bin/

./elasticsearch-reset-password --username kibana -i

添加用户密码后修改配置文件

vi /home/kibana/kibana-8.6.2/config/kibana.yml

elasticsearch.username: "kibana"

elasticsearch.password: "你设置的密码"

重启后登录网址http://172.27.6.44:5601/app/discover查看

输入elastic的账号密码

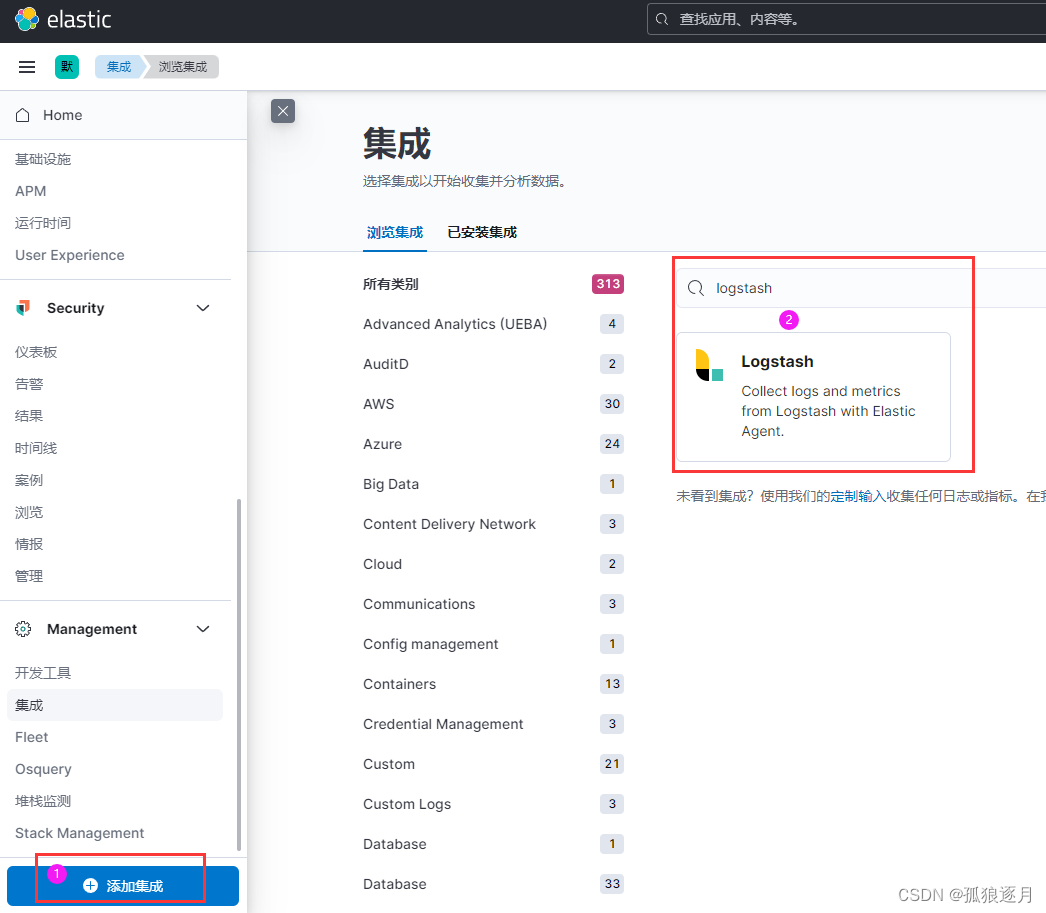

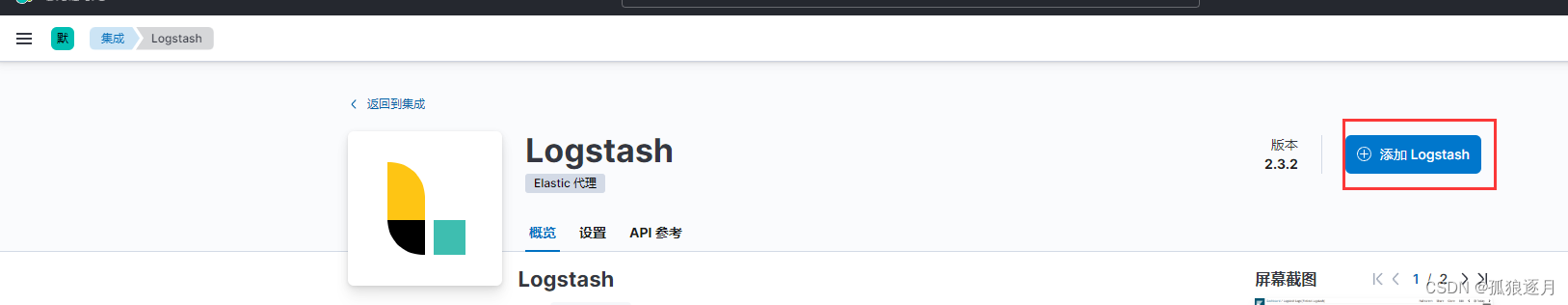

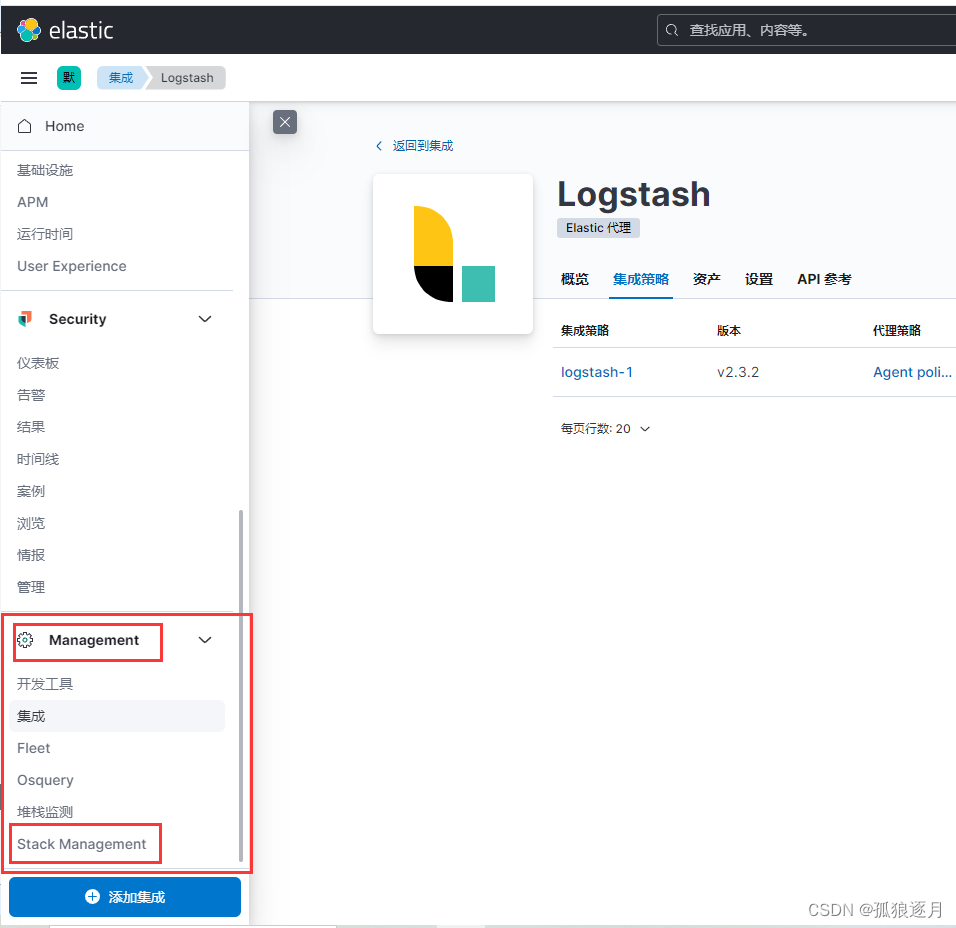

添加logstash集成

logstash

解压缩并指定目录

cd /home/elk/

tar -zxvf kibana-8.6.2-linux-x86_64.tar.gz

groupadd logstash

useradd -g logstash logstash

passwd logstash

mv logstash-8.6.2 /home/logstash/

cd /home/logstash/logstash-8.6.2

编写配置文件

cd config

vi filetoes.conf

input {

file{

path => "/home/testFile.txt"

type => "log-file"

}

}

output {

elasticsearch {

hosts => ["http://172.27.6.44:9200"]

index => "log-file-%{+YYYY.MM.dd}"

user => "elastic"

password => "设置的密码"

}

}

切换用户启动该配置文件

chown -R logstash:logstash /home/logstash/

su logstash

/home/logstash/logstash-8.6.2/bin/logstash -f /home/logstash/logstash-8.6.2/config/filetoes.conf

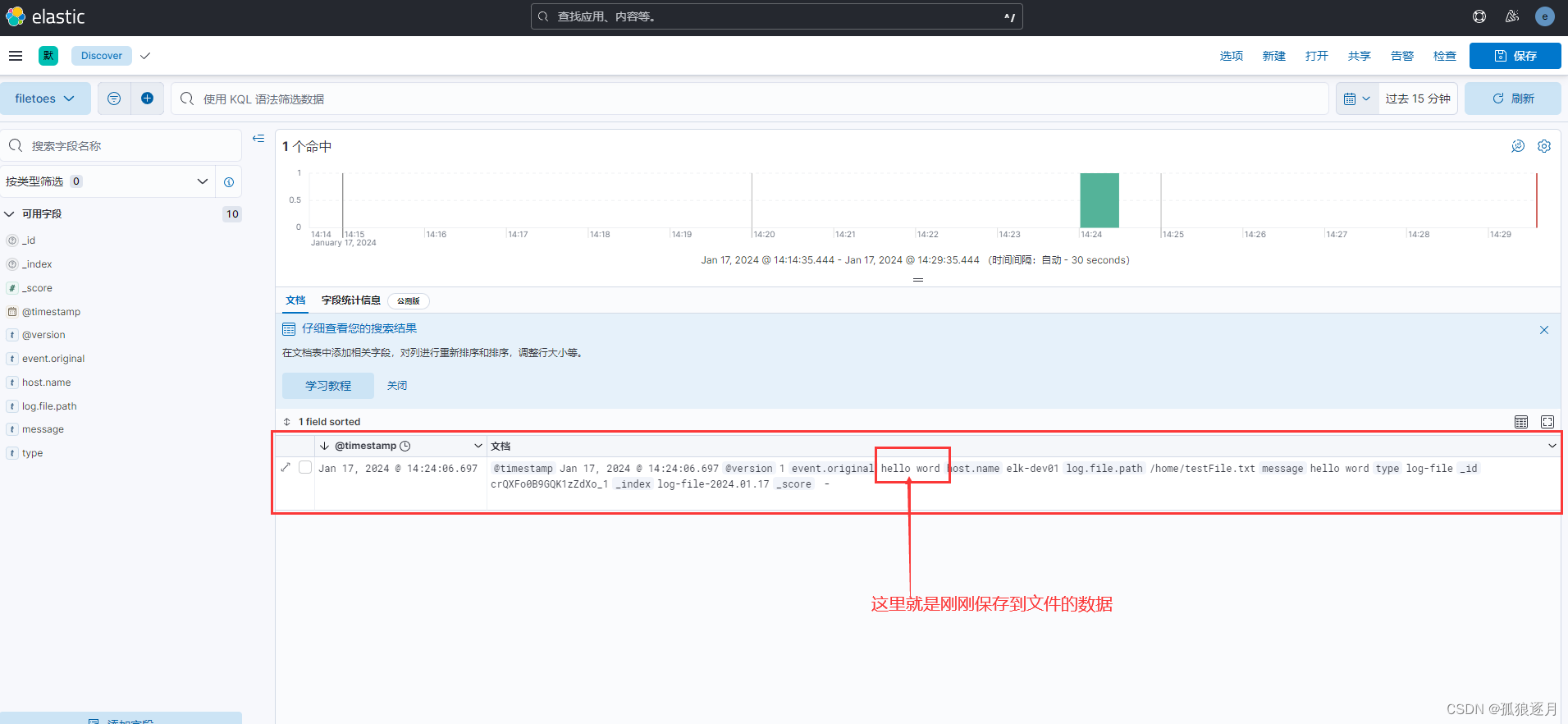

添加测试内容看是否监听

新开shell窗口

cd /home

touch testFile.txt

使用 echo 添加内容

echo "hello word" >> testFile.txt

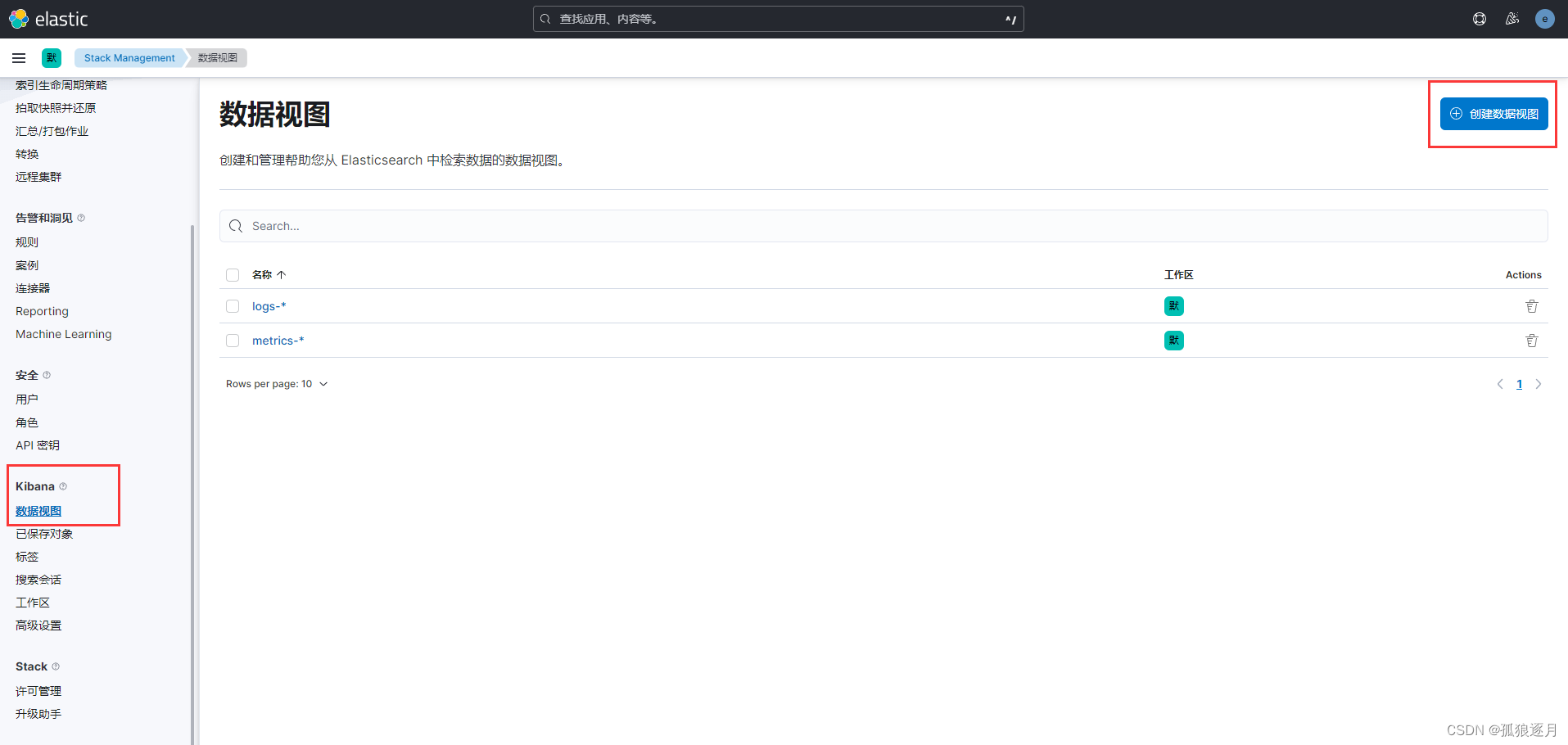

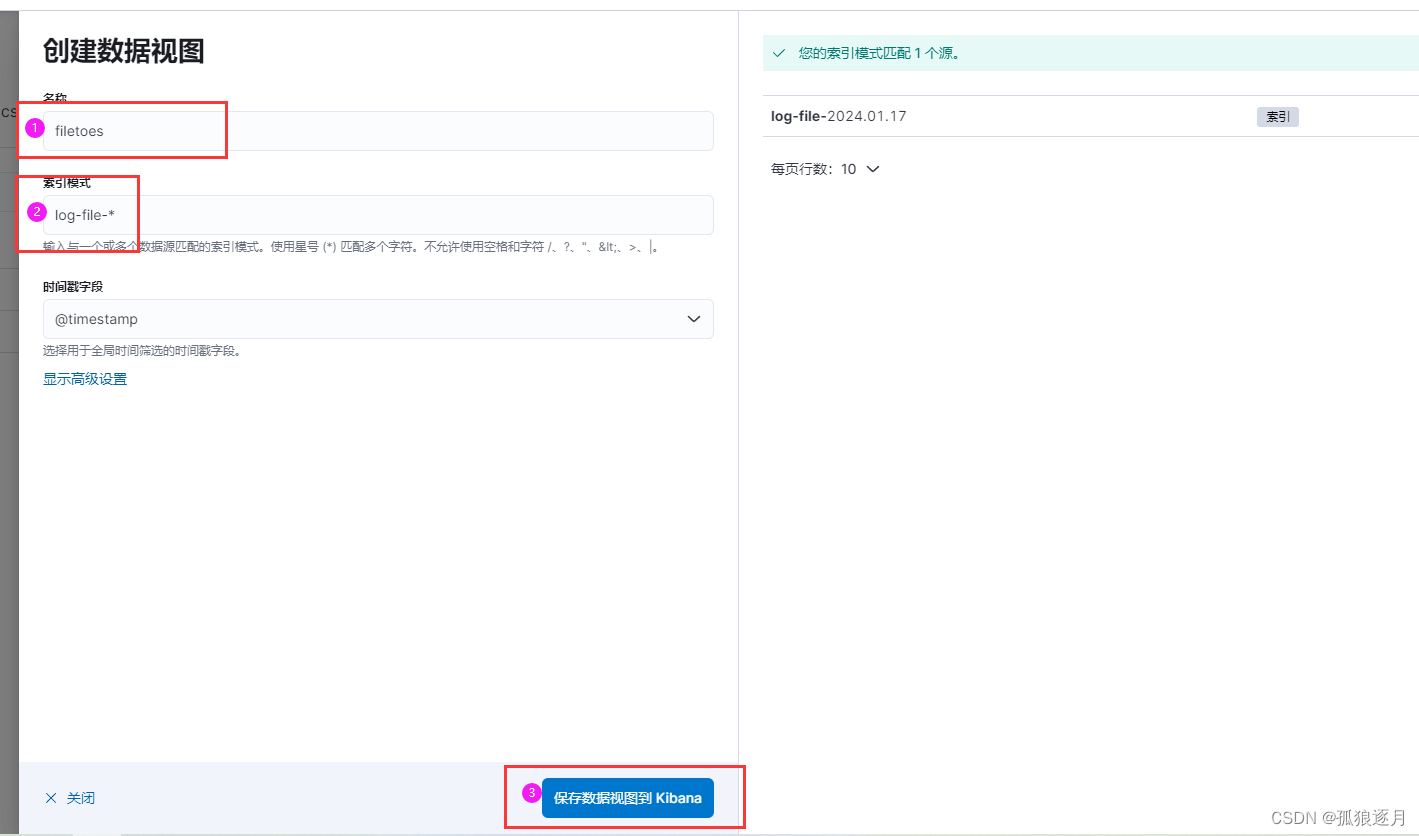

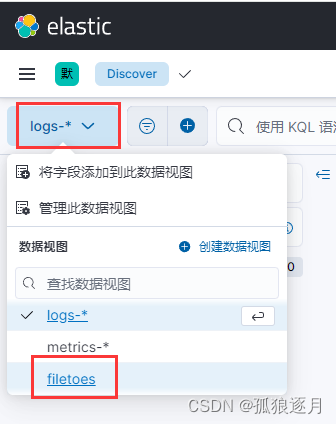

查看日志数据

登录 http://172.27.6.44:5601/app/discover 添加策略

至此es-kibana-logstash 联通,可直接使用(在logstash配置中配置不同数据源即可)或使用kafka作为数据中转

Zookeeper

配置jdk环境变量

cd /home/elk

tar -zxvf jdk-8u331-linux-x64.tar.gz

mkdir /home/java

mv jdk1.8.0_331 /home/java/

vi /etc/profile

export JAVA_HOME=/home/java/jdk1.8.0_331

export JRE_HOME=$JAVA_HOME/jre

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

source /etc/profile

解压缩zookeeper并编辑配置文件

cd /home/elk

tar -zxvf apache-zookeeper-3.8.3-bin.tar.gz

mkdir /home/zookeeper

mv apache-zookeeper-3.8.3-bin /home/zookeeper/

cd /home/zookeeper/apache-zookeeper-3.8.3-bin/

mkdir data logs

cd conf/

cp zoo_sample.cfg zoo.cfg

vi zoo.cfg

修改 dataDir 地址为 /home/zookeeper/apache-zookeeper-3.8.3-bin/data

# 修改 dataDir 地址为 /home/zookeeper/apache-zookeeper-3.8.3-bin/data

# 新增日志地址

datalogDir=/home/zookeeper/apache-zookeeper-3.8.3-bin/logs

# 新增服务器地址

server.1=172.27.6.44:2888:3888

进入bin目录启动zookeeper

./zkServer.sh start 启动zookeeper

./zkServer.sh stop 关闭zookeeper

./zkServer.sh status 查看zookeeper状态

kafka

解压缩kafka并编辑配置文件

cd /home/elk

tar -zxvf kafka_2.13-3.6.0.tgz

mkdir /home/kafka

mv kafka_2.13-3.6.0 /home/kafka/

cd /home/kafka/kafka_2.13-3.6.0/

mkdir logs

cd config/

cp server.properties server.propertiesbak001

vi server.properties

添加或修改如下字段–不能直接在最后添加

listeners=PLAINTEXT://172.27.6.44:9092

advertised.listeners=PLAINTEXT://172.27.6.44:9092

auto.create.topics.enable=true

log.dirs=/home/kafka/kafka_2.13-3.6.0/logs

zookeeper.connect=172.27.6.44:2181

进入kafka的bin目录 启动

cd ../bin/

./kafka-server-start.sh -daemon ../config/server.properties

冷知识 该版本命令为bootstrap-server

查看topic

# 原命令

./kafka-topics.sh --list --zookeeper 172.27.6.44:2181

# 当前版本使用命令

./kafka-topics.sh --list --bootstrap-server 172.27.6.44:9092

创建topic

./kafka-topics.sh --create --bootstrap-server 172.27.6.44:9092 --replication-factor 1 --partitions 1 --topic test

生产着

./kafka-console-producer.sh -bootstrap-server 172.27.6.44:9092 --topic test

消费者

./kafka-console-consumer.sh -bootstrap-server 172.27.6.44:9092 --topic test

logstash配置kafka到es

新开shell窗口

su logstash

cd /home/logstash/logstash-8.6.2/config

vi kafkatoes.conf

input {

kafka {

bootstrap_servers => "172.27.6.44:9092"

topics => ["test"]

client_id => "kafka-test"

group_id => "kafka-test-group"

consumer_threads => 2

decorate_events => true

type => "log-kafka-test"

codec => json {

charset => "UTF-8"

}

}

}

output {

elasticsearch {

hosts => ["http://172.27.6.44:9200"]

index => "log-kafka-test-%{+YYYY.MM.dd}"

user => "elastic"

password => "sxpt123456"

}

}

kafka配置了codec => json 后 进入kafka的数据需均为json格式

启动logstash

/home/logstash/logstash-8.6.2/bin/logstash -f /home/logstash/logstash-8.6.2/config/kafkatoes.conf

kafka开启生产者模式,输入一条json数据

{"username":"张三","age":18}

进入http://172.27.6.44:5601/app/discover 添加策略并查看

看到数据显示了,至此 elk单机版集成kafka全部完成

开机自启动和启动脚本

确定开机启动的服务为es、kibana、logstash、zookeepre、kafka

zookeeper注册为服务并自启动

cd /etc/init.d

vi zookeeper

#!/bin/bash

#chkconfig:2345 20 90

#description:zookeeper

#processname:zookeeper

export JAVA_HOME=/home/java/jdk1.8.0_331

case $1 in

start) su root /home/zookeeper/apache-zookeeper-3.8.3-bin/bin/zkServer.sh start;;

stop) su root /home/zookeeper/apache-zookeeper-3.8.3-bin/bin/zkServer.sh stop;;

status) su root /home/zookeeper/apache-zookeeper-3.8.3-bin/bin/zkServer.sh status;;

restart) su /home/zookeeper/apache-zookeeper-3.8.3-bin/bin/zkServer.sh restart;;

*) echo "require start|stop|status|restart" ;;

esac

给脚本添加执行权限

chmod +x zookeeper

执行脚本,验证一下

service zookeeper status

service zookeeper stop

service zookeeper start

添加到开机自启列表

# 添加开机自启

chkconfig --add zookeeper

# 状态设置为启动

chkconfig zookeeper on

kafka注册为服务并自启动

cd /etc/init.d

vi kafka

#!/bin/bash

# chkconfig: 2345 10 90

# description: kafkaService ....

export JRE_HOME=/home/java/jdk1.8.0_331/jre

export JAVA_HOME=/home/java/jdk1.8.0_331

export PATH=$JAVA_HOME/bin:$PATH

case "$1" in

start)

/home/kafka/kafka_2.13-3.6.0/bin/kafka-server-start.sh -daemon /home/kafka/kafka_2.13-3.6.0/bin/config/server.properties

;;

stop)

/home/kafka/kafka_2.13-3.6.0/bin/kafka-server-stop.sh

;;

restart)

/home/kafka/kafka_2.13-3.6.0/bin/kafka-server-stop.sh

sleep 3

/home/kafka/kafka_2.13-3.6.0/bin/kafka-server-start.sh -daemon /home/kafka/kafka_2.13-3.6.0/bin/config/server.properties

;;

*)

echo $"Usage: $0 {start|stop|restart}"

exit 1

esac

给脚本添加执行权限

chmod +x kafka

执行脚本,验证一下

service kafka stop

service kafka start

添加到开机自启列表

# 添加开机自启

chkconfig --add kafka

# 状态设置为启动

chkconfig kafka on

elasticsearch注册为服务并自启动

cd /etc/init.d

vi elasticsearch

#!/bin/sh

# chkconfig: - 85 15

#description: elasticsearch

export ES_HOME=/home/es/elasticsearch-8.6.2

case "$1" in

start)

su es<<!

cd $ES_HOME

./bin/elasticsearch -d -p pid

!

echo "elasticsearch startup"

;;

stop)

kill -9 `cat $ES_HOME/pid`

echo "elasticsearch stopped"

;;

restart)

kill -9 `cat $ES_HOME/pid`

echo "elasticsearch stopped"

su es<<!

cd $ES_HOME

./bin/elasticsearch -d -p pid

!

echo "elasticsearch startup"

;;

*)

echo "start|stop|restart"

;;

esac

exit $?

给脚本添加执行权限

chmod +x elasticsearch

执行脚本,验证一下

service elasticsearch start

service elasticsearch stop

添加到开机自启列表

# 添加开机自启

chkconfig --add elasticsearch

# 状态设置为启动

chkconfig elasticsearch on

kibana注册为服务并自启动

在kibana.yml中配置pid文件

pid.file: /home/kibana/kibana-8.6.2/pid

cd /etc/init.d

vi kibana

#!/bin/bash

#chkconfig: 2345 55 27

#description: kibana

#processname: kibana

KIBANA_HOME=/home/kibana/kibana-8.6.2

start(){

su - kibana -c "nohup $KIBANA_HOME/bin/kibana >>/home/kibana/kibana-8.6.2/logs/kibana.log 2>&1 &"

echo "kibana is started"

}

stop(){

kill -9 `cat $KIBANA_HOME/pid`

echo "kibana is stopped"

}

status(){

ps aux | grep $KIBANA_HOME

}

restart(){

stop

sleep 1

start

}

case "$1" in

"start")

start

;;

"stop")

stop

;;

"status")

status

;;

"restart")

restart

;;

*)

echo "支持指令:$0 start|stop|restart|status"

;;

esac

给脚本添加执行权限

chmod +x kibana

执行脚本,验证一下

service kibana start

service kibana stop

添加到开机自启列表

# 添加开机自启

chkconfig --add kibana

# 状态设置为启动

chkconfig kibana on

logstash注册为服务并自启动

cd /etc/init.d

vi logstash

#!/bin/bash

#chkconfig: 2345 60 35

#description: logstash

#processname: logstash

LOGSTASH_HOME=/home/logstash/logstash-8.6.2

start(){

su - logstash -c "nohup $LOGSTASH_HOME/bin/logstash -f $LOGSTASH_HOME/config/kafkatoes.conf >> /dev/null 2>&1 & echo \$! > $LOGSTASH_HOME/pid"

echo "logstash is started"

}

stop(){

kill -9 `cat $LOGSTASH_HOME/pid`

echo "logstash is stopped"

}

status(){

ps aux | grep $LOGSTASH_HOME

}

restart(){

stop

sleep 1

start

}

case "$1" in

"start")

start

;;

"stop")

stop

;;

"status")

status

;;

"restart")

restart

;;

*)

echo "支持指令:$0 start|stop|restart|status"

;;

esac

给脚本添加执行权限

chmod +x logstash

执行脚本,验证一下

service logstash start

service logstash stop

添加到开机自启列表

# 添加开机自启

chkconfig --add logstash

# 状态设置为启动

chkconfig logstash on

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- IPQ5018: Low-Cost OFDMA Supported WiFi 6 IIoT Solution DR5018

- uniapp开发微信小程序中图片大小显示不正常

- 【实战教程】防火墙常见NAT技术,让你一次看个够!

- linux-6.0 内核存储栈全景图

- 1050. 鸣人的影分身(dp划分)

- Java 基础学习(十一)File类与I/O操作

- 非比较排序

- GIT和SVN

- 诚实性对齐

- 免费PHP完美运营的最新短视频打赏系统学习版