droupout(Pytorch中的代码实现)

发布时间:2024年01月15日

图片来源:

【Pytorch】torch.nn.Dropout()的两种用法:防止过拟合 & 数据增强-CSDN博客

注意:

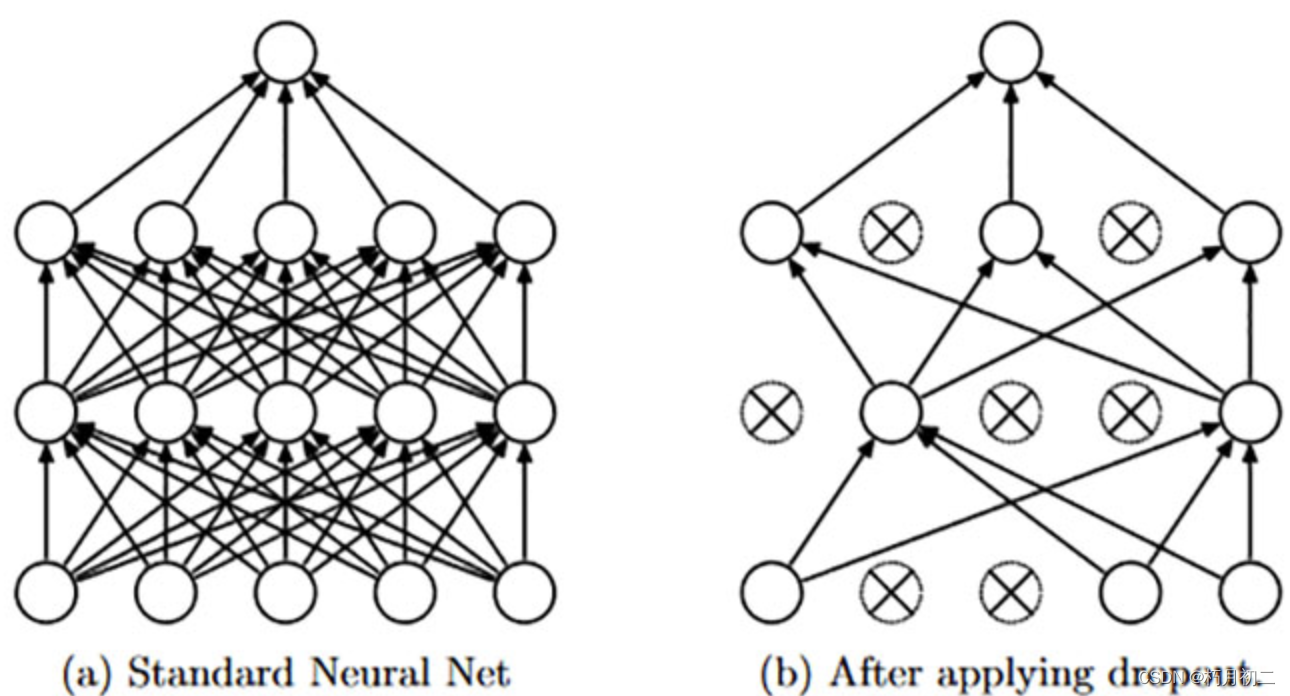

droupout可以进行数据增强或者防止数据过拟合。

droupout在全连接层之后,使得部分神经元失活,但是为什么能保持数据分布不变呢?

因为在训练时,nn.Dropout()不仅对每个神经元参数以概率p变为0,还会将剩下不为0的参数进行rescale(缩放)。这样就能够保持期望不变,缩放比例是1/(1-p)。

nn.Dropout()的输入可以是任意形状,输出的形状与输入形状相同

dropout方法是用于训练的,因此在pytorch中,nn.Dropout()层只在model.train()模型下有效,在model.eval()模式下会自动失效

?

?代码:(不替换原数据,

也就是说

inplace=False

默认

)

import torch

import torch.nn as nn

import torch.autograd as autograd

m = nn.Dropout(p=0.5)

n = nn.Dropout2d(p=0.5)

input = autograd.Variable(torch.randn(1, 2, 6, 3)) ## 对dim=1维进行随机置为0

print(input)

print('****************************************************')

print(m(input))

print('****************************************************')

print(n(input))

?运行结果

tensor([[[[ 0.2964, -0.6547, -0.2419],

[-0.2579, 0.2458, -2.2578],

[ 1.0282, -0.5122, 0.3879],

[ 0.0062, -1.1403, 0.6484],

[-0.4762, 0.5283, 1.0279],

[-0.2974, 1.2620, -0.9349]],

[[ 1.4709, -0.2073, -0.0272],

[ 0.1338, 0.4217, -0.0054],

[ 0.9678, 2.6442, 1.1054],

[-1.2418, 0.3227, 0.6570],

[ 0.0729, 0.1963, 1.5051],

[-0.2248, -1.7239, 0.1560]]]])

****************************************************

tensor([[[[ 0.5929, -0.0000, -0.4839],

[-0.5159, 0.4917, -0.0000],

[ 0.0000, -1.0245, 0.0000],

[ 0.0000, -0.0000, 1.2969],

[-0.0000, 1.0566, 2.0557],

[-0.5949, 0.0000, -0.0000]],

[[ 0.0000, -0.0000, -0.0545],

[ 0.2676, 0.0000, -0.0108],

[ 0.0000, 0.0000, 2.2108],

[-2.4836, 0.6454, 0.0000],

[ 0.1458, 0.3925, 0.0000],

[-0.0000, -3.4478, 0.0000]]]])

****************************************************

tensor([[[[ 0.5929, -1.3094, -0.4839],

[-0.5159, 0.4917, -4.5156],

[ 2.0564, -1.0245, 0.7759],

[ 0.0124, -2.2806, 1.2969],

[-0.9523, 1.0566, 2.0557],

[-0.5949, 2.5240, -1.8699]],

[[ 0.0000, -0.0000, -0.0000],

[ 0.0000, 0.0000, -0.0000],

[ 0.0000, 0.0000, 0.0000],

[-0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000],

[-0.0000, -0.0000, 0.0000]]]])代码:(替换原数据,

也就是说

inplace=True

默认

)

import torch

import torch.nn as nn

import torch.autograd as autograd

m = nn.Dropout(p=0.5,inplace=True)

n = nn.Dropout2d(p=0.5,inplace=True)

input = autograd.Variable(torch.randn(1, 2, 6, 3)) ## 对dim=1维进行随机置为0

print(input)

print('****************************************************')

print(m(input))

print('****************************************************')

print(n(input))

print('****************************************************')

print(input)结果:

tensor([[[[ 0.2302, -0.5433, 0.0764],

[ 0.1808, 0.0388, 0.7478],

[-0.5275, 0.0267, 0.1992],

[-0.8679, -0.4964, 1.0551],

[-0.0639, -0.7051, 0.2459],

[ 0.8896, -0.1499, -0.3867]],

[[-0.9636, 0.5765, -1.6191],

[-0.8224, 0.5951, 0.7729],

[ 1.4425, -0.9612, -0.2738],

[ 0.9648, 0.7734, 1.4985],

[ 0.0044, -1.0005, -1.3330],

[-2.4997, -1.5649, -0.6146]]]])

****************************************************

tensor([[[[ 0.0000, -1.0867, 0.1528],

[ 0.0000, 0.0000, 1.4956],

[-1.0549, 0.0000, 0.0000],

[-1.7358, -0.0000, 2.1101],

[-0.0000, -1.4102, 0.0000],

[ 0.0000, -0.0000, -0.0000]],

[[-0.0000, 1.1530, -0.0000],

[-1.6448, 1.1901, 0.0000],

[ 0.0000, -0.0000, -0.0000],

[ 0.0000, 1.5467, 2.9970],

[ 0.0089, -0.0000, -0.0000],

[-0.0000, -3.1298, -1.2292]]]])

****************************************************

tensor([[[[ 0.0000, -2.1734, 0.3055],

[ 0.0000, 0.0000, 2.9912],

[-2.1099, 0.0000, 0.0000],

[-3.4716, -0.0000, 4.2202],

[-0.0000, -2.8204, 0.0000],

[ 0.0000, -0.0000, -0.0000]],

[[-0.0000, 0.0000, -0.0000],

[-0.0000, 0.0000, 0.0000],

[ 0.0000, -0.0000, -0.0000],

[ 0.0000, 0.0000, 0.0000],

[ 0.0000, -0.0000, -0.0000],

[-0.0000, -0.0000, -0.0000]]]])

****************************************************

tensor([[[[ 0.0000, -2.1734, 0.3055],

[ 0.0000, 0.0000, 2.9912],

[-2.1099, 0.0000, 0.0000],

[-3.4716, -0.0000, 4.2202],

[-0.0000, -2.8204, 0.0000],

[ 0.0000, -0.0000, -0.0000]],

[[-0.0000, 0.0000, -0.0000],

[-0.0000, 0.0000, 0.0000],

[ 0.0000, -0.0000, -0.0000],

[ 0.0000, 0.0000, 0.0000],

[ 0.0000, -0.0000, -0.0000],

[-0.0000, -0.0000, -0.0000]]]])

文章来源:https://blog.csdn.net/qq_46012097/article/details/135606843

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!