Model-based value iteration and policy iteration pseudocode

发布时间:2023年12月19日

Note that the symbols used in the?pseudocode below have the following meanings:

MDP: Markov Decision Process;V(s): Value function, the avg reture of one state;π(s): Policy, in the sense that?for a given states,π(s)represents the action that the agent will take in that state according to the policy, ?usually can be divided into a?random manner or a?deterministic manner;R(s,a): Immediate reward when taking actionain states;P(s'|s,a): Transition probability from statesto states'under an actiona;γ: Discount factor for future reward.

Value iteration:

function ValueIteration(MDP):

? ? // MDP is a Markov Decision Process

? ? V(s) = 0 for all states s ?// Initialization

? ? repeat until convergence:

? ? ? ? delta = 0

? ? ? ? for each state s:

? ? ? ? ? ? v = V(s)

? ? ? ? ? ? V(s) = max over all actions a of [ R(s, a) + γ * Σ P(s' | s, a) * V(s') ]

? ? ? ? ? ? delta = max(delta, |v - V(s)|)

? ? return V ?// Optimal value function

function ExtractOptimalPolicy(MDP, V):

? ? // MDP is a Markov Decision Process, V is the optimal value function

? ? for each state s:

? ? ? ? π(s) = argmax over all actions a of [ R(s, a) + γ * Σ P(s' | s, a) * V(s') ]

? ? return π ?// Optimal policyPolicy iteration:

function PolicyIteration(MDP):

// MDP is a Markov Decision Process

Initialize a policy π arbitrarily

repeat until policy converges:

// Policy Evaluation

V = EvaluatePolicy(MDP, π)

// Policy Improvement

π' = GreedyPolicyImprovement(MDP, V)

if π' = π:

break // Policy has converged

π = π'

return π // Optimal policy

function EvaluatePolicy(MDP, π):

// MDP is a Markov Decision Process, π is a policy

V(s) = 0 for all states s // Initialization

repeat until convergence:

delta = 0

for each state s:

v = V(s)

V(s) = Σ P(s' | s, π(s)) * [ R(s, π(s)) + γ * V(s') ]

delta = max(delta, |v - V(s)|)

return V // Value function under the given policy

function GreedyPolicyImprovement(MDP, V):

// MDP is a Markov Decision Process, V is a value function

for each state s:

π(s) = argmax over all actions a of [ R(s, a) + γ * Σ P(s' | s, a) * V(s') ]

return π // Improved policy

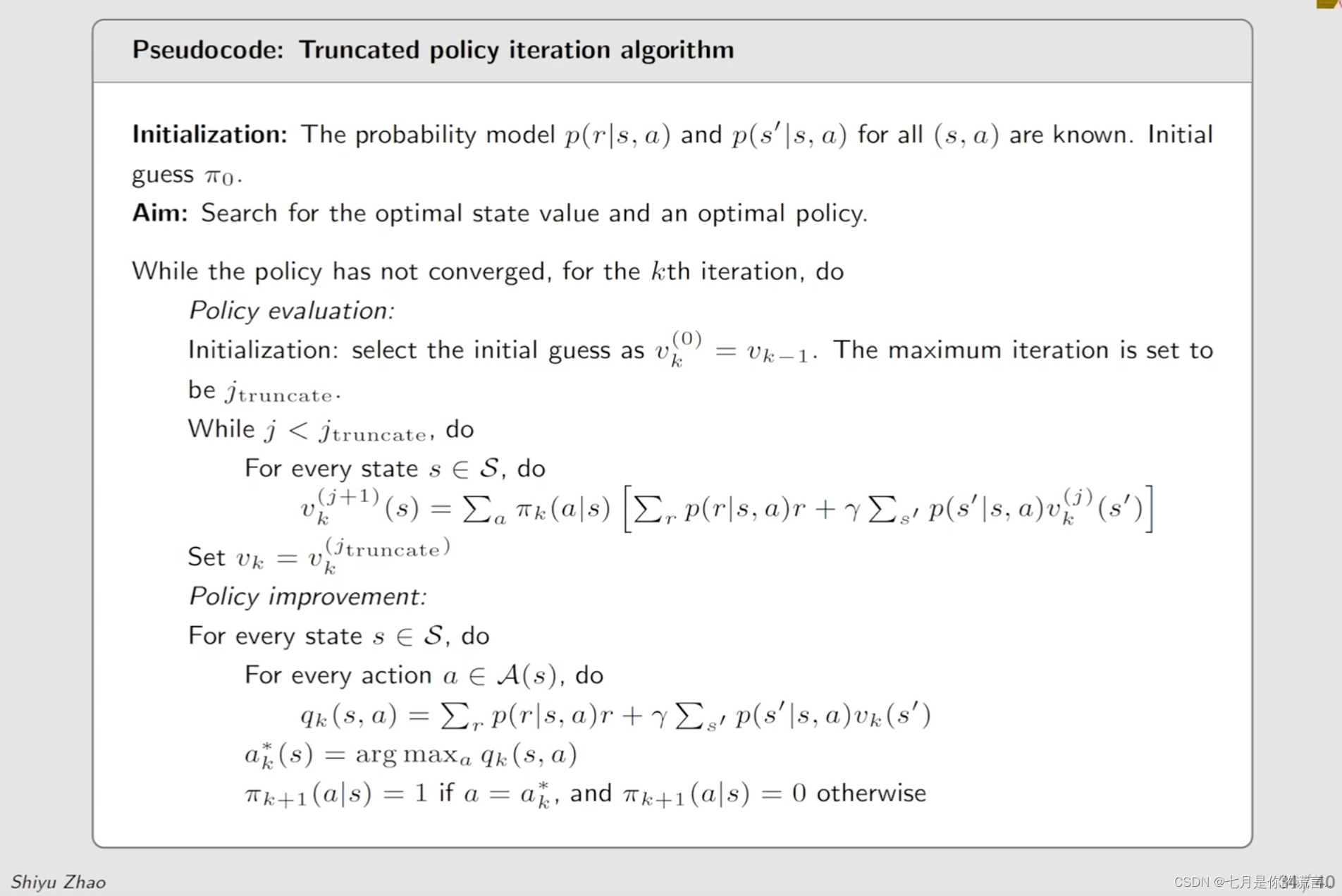

given the?shiyu Zhao's course [1] ppt :

References:

文章来源:https://blog.csdn.net/ambitiousssssss/article/details/135094307

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- P1029 [NOIP2001 普及组] 最大公约数和最小公倍数问题

- 定位Linux下写句柄错误问题

- 华为OD机试 - 字符串拼接(Java & JS & Python & C)

- 随记-nginx docker + SSL 配置 - 配置等资源挂宿主机

- Webpack打包简单的js文件

- 一文读懂「Transformer」

- Linux Zabbix企业级监控平台本地部署并实现远程访问

- 海外云手机助力企业拓展海外市场

- 向华为学习:基于BLM模型的战略规划研讨会实操的详细说明,含研讨表单(五)

- 德思特干货丨如何使用SBench6软件对数字化仪采集信号进行处理?(一)——波形算术运算与直方图功能