ML Design Pattern——Hyperparameter Tuning

发布时间:2023年12月25日

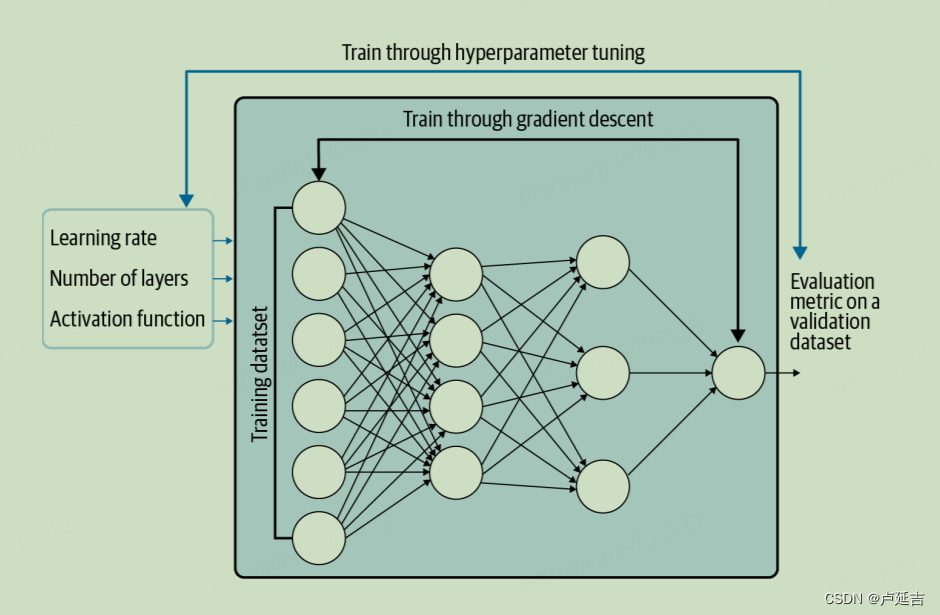

Hyperparameter tuning is the process of finding the optimal set of hyperparameters for a machine learning model. Hyperparameters are settings that control the learning process, but aren't learned from the model's training data itself. They govern aspects like model complexity, how quickly it learns, and how sensitive it is to outliers.

Key concepts:

- Hyperparameters:?Settings like learning rate, number of neurons in a neural network, or tree depth in a decision tree.

- Model performance:?Measured using metrics like accuracy, precision, recall, or F1-score on a validation set (not part of the training data).

- Search space:?The range of possible hyperparameter values.

- Search strategy:?The method used to explore the search space (e.g., grid search, random search, Bayesian optimization).

Visualizing hyperparameter tuning:

Common hyperparameter tuning techniques:

- Grid search:?Exhaustively evaluates every combination of hyperparameters within a specified grid.

- Random search:?Randomly samples combinations of hyperparameters from the search space.

- Bayesian optimization:?Uses a probabilistic model to guide the search, focusing on more promising areas.

Importance of hyperparameter tuning:

- Significantly impacts model performance

- Ensures model generalizes well to unseen data

- Can be computationally expensive, but often worth the effort

Additional considerations:

- Early stopping:?Monitor validation performance during training and stop when it starts to degrade, preventing overfitting.

- Regularization:?Techniques to reduce model complexity and prevent overfitting, often controlled by hyperparameters.

Breakpoints in Decision Trees:

- Definition:?Breakpoints are the specific values of a feature that partition the data into different branches of a decision tree.

- Function:?They determine the decision-making rules at each node of the tree.

- Visualization:?Imagine a tree with branches for different outcomes based on feature values (e.g., "age > 30" leads to one branch, "age <= 30" to another).

- Key points:

- Chosen to maximize information gain or purity in each branch.

- Location significantly impacts model complexity and accuracy.

Weights in Neural Networks:

- Definition:?Numerical values associated with connections between neurons, representing the strength and importance of each connection.

- Function:?Determine how much influence one neuron's output has on another's activation.

- Visualization:?Picture a network of interconnected nodes with varying strengths of connections (like thicker or thinner wires).

- Key points:

- Learned during training to minimize error and optimize model performance.

- Encoded knowledge of the model, capturing patterns in the data.

- Adjusting weights is the core of neural network learning.

Support Vectors in SVMs:

- Definition:?Data points that lie closest to the decision boundary in SVMs, crucial for defining the margin that separates classes.

- Function:?Determine the optimal hyperplane that best separates classes in high-dimensional space.

- Visualization:?Imagine points near a dividing line acting as "fence posts" to define the boundary.

- Key points:

- Only a small subset of training data points become support vectors, making SVMs memory efficient.

- Removing non-support vectors doesn't affect the decision boundary.

- Highly influential in model predictions.

KerasTuner

KerasTuner is a library that automates hyperparameter tuning for Keras models, making it easier to find optimal configurations.

文章来源:https://blog.csdn.net/weixin_38233104/article/details/135184350

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- ELF文件格式解析二

- 【Linux】【操作】Linux操作集锦系列之五——Linux各种格式压缩与解压缩命令

- 【华为OD机试真题 Javascript】伐木工|代码解析

- 【ARM 嵌入式 编译系列 3.7 -- newlib 库文件与存根函数 stubs 详细介绍】

- 深入理解Java源码:提升技术功底,深度掌握技术框架,快速定位线上问题

- 云安全资源管理定义以及实现方法

- 高速CAN总线 C 或 B 节点发送 A节点接收 电压分析

- Date怎么转localDate和localDate转Date

- [python] 基于Dataset库操作数据库

- 使用自动化测试获取手机短信验证码