k8s的对外服务---ingress

service的作用体现在两个方面:

-

集群内部:不断追踪pod的变化。他会更新endpoint中的pod对象,基于pod的IP地址不断变化的一种服务发现机制。

-

集群外部:类似负载均衡器,把流量IP+端口,不涉及转发url(http、https)。把请求转发到pod当中。

service有四种类型:

ClusterIP:创建service的默认类型

NodePort:容器端口 > service端口 > nodeport。 设定了nodeport后,每个节点都会有一个端口被打开。 端口范围:30000-32767。 访问:节点ip+30000-32767实现负载均衡

loadbalancer:云平台上的一种service服务。云平台提供负载均衡的IP地址

extrenal:域名映射。

什么是ingress?

ingress是一个API对象,通过yaml文件进行配置。

ingress的作用:是定义请求如何转发到service的规则。相当于一个配置模板

ingress通过http和https暴露集群内部的service。

ingress给service提供外部的URL域名,负载均衡以及ssl/tls(加密的https)的能力。实现一个基于域名的负载均衡。

ingress-controller

ingress-controller的作用就是具体的实现反向代理和负载均衡的程序。对ingress定义的规则进行解析。根据ingress的配置规则进行请求转发。

ingress-controlle是以pod的方式运行在集群中

ingress-controller不是k8s自带的组件功能。他是一个统称,只要这个组件可以实现反向代理和负载均衡可以对ingress进行解析的、可以根据规则请求转发的都是ingress-controller

nginx-ingress-controller、traefik都是开源的ingress-controller

ingress资源的定义项

1、 定义外部流量的路由规则

2、 定义了服务的暴露方式、主机名、访问路径和其他的选项。

3、 实现负载均衡。这是由ingress-controller实现的。

ingress暴露服务的方式

1、 deployment+LoadBalancer模式:这种模式是将ingress部署在公有云。例如:华为云、阿里云、腾讯云等

在ingress的配置文件内会有一个type。type会以键值对形式设置 type: LoadBalancer

公有云平台会为 LoadBalancer 的service创建一个负载均衡器。绑定一个公网地址。通过域名指向公网地址。就可以实现集群对外暴露。

2、 DaemonSet+hostnetwork+nodeSelector模式:

其中DaemonSet会在每个节点创建一个pod。

hostnetwork表示pod和节点主机共享网络命名空间。容器内可以直接使用节点主机的IP+端口。pod中的容器可以直接访问主机上的网络资源。

nodeSelector根据标签来选择部署的节点。nginx-ingress-controller部署的节点。

缺点:直接利用节点主机的网络和端口。一个node之内部署一个ingress-controller pod。

DaemonSet+hostnetwork+nodeSelector模式性能最好,比较适合大并发的生产环境。

ingress的数据流向图:

-

客户端发起请求域名将先到DNS

-

DNS开始解析域名。映射到ingress-controller所在的节点

-

ingress-controller以pod形式运行在节点上。hostnetwork可以和节点主机共享网络

-

ingress的配置来定义URL的地址

-

根据ingress的标签匹配将请求转发到service

-

service寻找endpoints发现匹配能够转发的pod

-

最终还是由ingress-controller将(http/https)请求转发到不同的pod上。实现负载均衡

service和endpoints在这里起发现和监控的总用

实际的负载均衡由ingress-controller实现

DaemonSet+hostnetwork+nodeSelector模式如何实现?

实验部署:

master01 20.0.0.32

node01 20.0.0.34

node02 20.0.0.35

master01--

修改ingress的yaml文件

vim mandatory.yaml

191 #kind: Deployment

192 kind: DaemonSet

200 # replicas: 1

215 hostNetwork: true

220 test1: "true"

每台节点主机都添加nginx-ingress-controller镜像

tar -xf ingree.contro-0.30.0.tar.gz

docker load -i ingree.contro-0.30.0.tar

master01--

kubectal get pod -n ingress-nginx

kubectl label nodes node02 ingress=true

kubectl apply -f mandatory.yaml

master01---

vim nginx.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: nfs-client-storageclass

resources:

requests:

storage: 2Gi

---

#定义pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app

labels:

app: nginx1

spec:

replicas: 3

selector:

matchLabels:

app: nginx1

template:

metadata:

labels:

app: nginx1

spec:

containers:

- name: nginx

image: nginx:1.22

volumeMounts:

- name: nfs-pvc

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: nfs-pvc

---

#定义service

apiVersion: v1

kind: Service

metadata:

name: nginx-app-svc

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: nginx1

---

#定义ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-app-ingress

spec:

rules:

- host: www.test1.com

http:

paths:

- path: /

#匹配工作目录的根目录

pathType: Prefix

#根据前缀进行匹配 只要是/开头的都可以匹配到例如/ www.test1.com/www1/www2/www3

backend:

#指定后台服务器

service:

name: nginx-app-svc

port:

number: 80

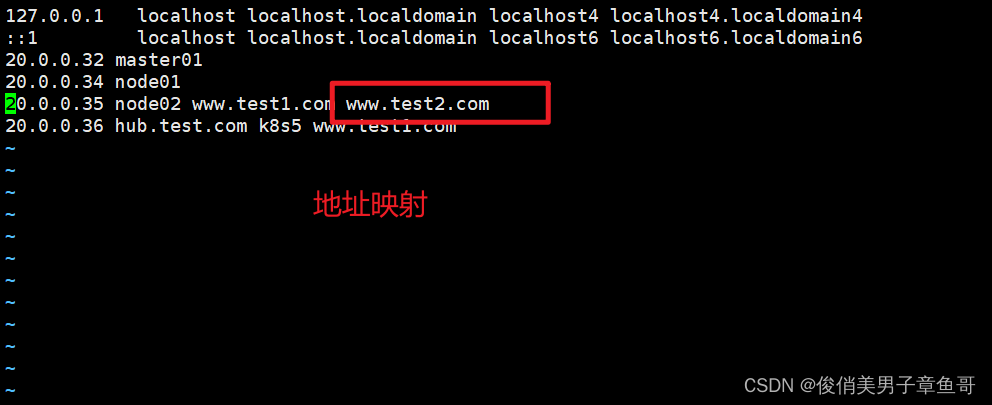

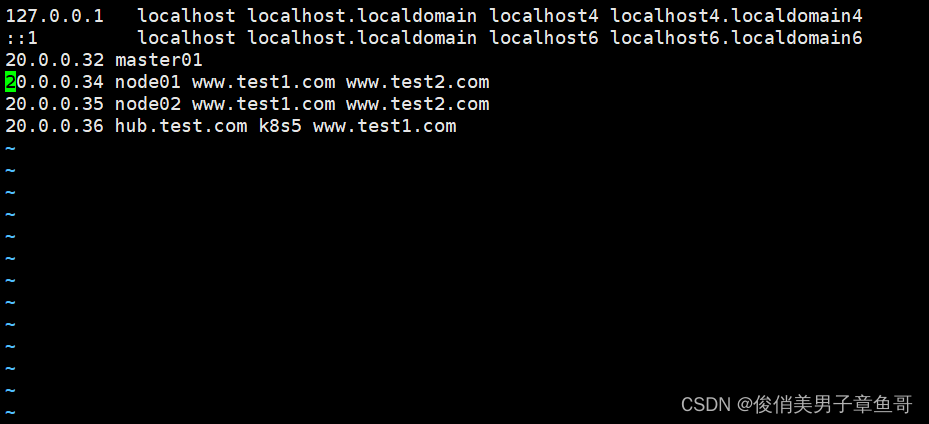

vim /etc/hosts

20.0.0.32 master01

20.0.0.34 node01

20.0.0.35 node02 www.test1.com

20.0.0.36 hub.test.com k8s5

#做域名和地址映射

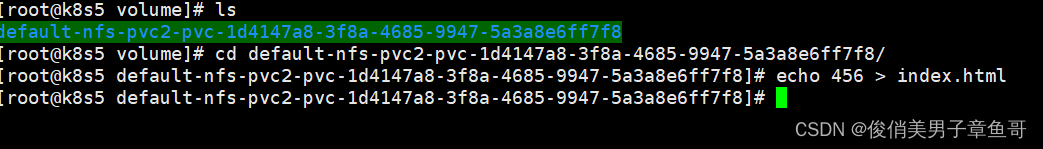

k8s5---

进入挂载卷之后

echo 123 > index.html

master01---

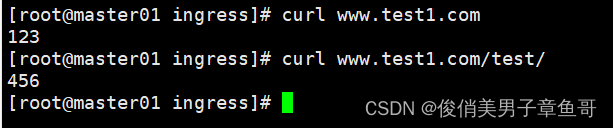

curl www.test1.com

#测试网页是否可以访问

实验完成!创建地址映射

?

?

?回到k8s主机查看共享目录是否生成

测试访问是否成功

8181端口,nginx-controller默认配置的一个bachend。反向代理的端口。

所有请求当中。只要是不符合ingress配置的请求就会转发到8181端口

deployment+NodePort模式

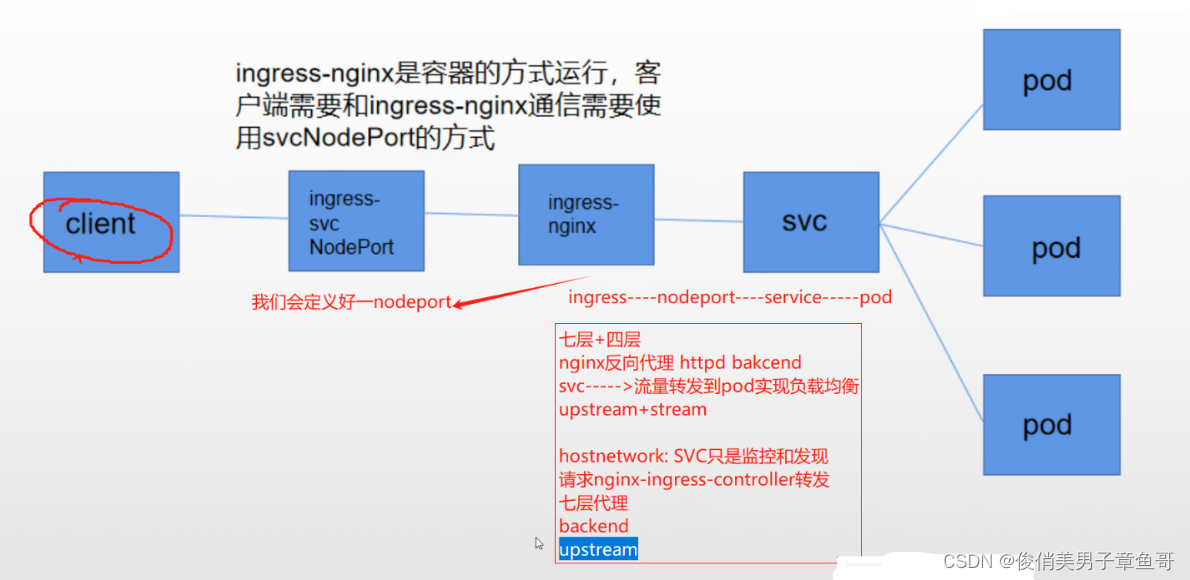

deployment+NodePort的数据流向图:

实验部署:

master01---

vim mandatory.yaml

191 kind: Deployment

215 #hostNetwork: true

200 replicas: 1

219 kubernetes.io/os: linux

220 #test1: "true"

kubectl apply -f mandatory.yaml

wget https://gitee.com/mirrors/ingress-nginx/raw/nginx-0.30.0/deploy/static/provider/baremetal/service-nodeport.yaml

#获取service.yaml文件

vim service-nodeport.yaml

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

- name: https

port: 443

targetPort: 443

protocol: TCP

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

#执行这个yaml文件,会生成一个service。在ingress-nginx这个命名空间生成一个service。

#所有的controller的请求都会从这个定义的service的nodeport的端口。

#把请求转发到自定义的service的pod

kubectl apply -f service-nodeport.yaml

vim nodeport.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc2

spec:

accessModes:

- ReadWriteMany

storageClassName: nfs-client-storageclass

resources:

requests:

storage: 2Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app2

labels:

app: nginx2

spec:

replicas: 3

selector:

matchLabels:

app: nginx2

template:

metadata:

labels:

app: nginx2

spec:

containers:

- name: nginx

image: nginx:1.22

volumeMounts:

- name: nfs-pvc2

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-pvc2

persistentVolumeClaim:

claimName: nfs-pvc2

---

apiVersion: v1

kind: Service

metadata:

name: nginx-app-svc1

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: nginx2

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-app-ingress

spec:

rules:

- host: www.test2.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-app-svc1

port:

number: 80

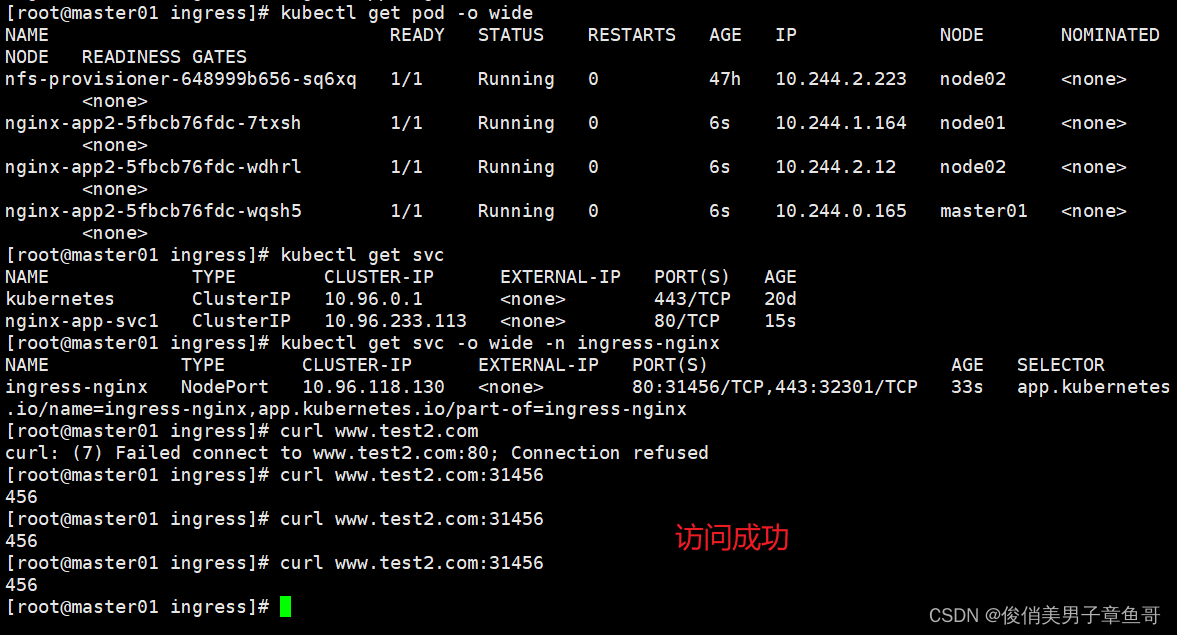

kubectl apply -f nodeport.yaml

k8s5---

查看挂载目录

echo 123 > index.html

master01---

vim /etc/hosts

20.0.0.32 master01

20.0.0.34 node01

20.0.0.35 node02 www.test1.com www.test2.com

20.0.0.36 hub.test.com k8s5 www.test1.com

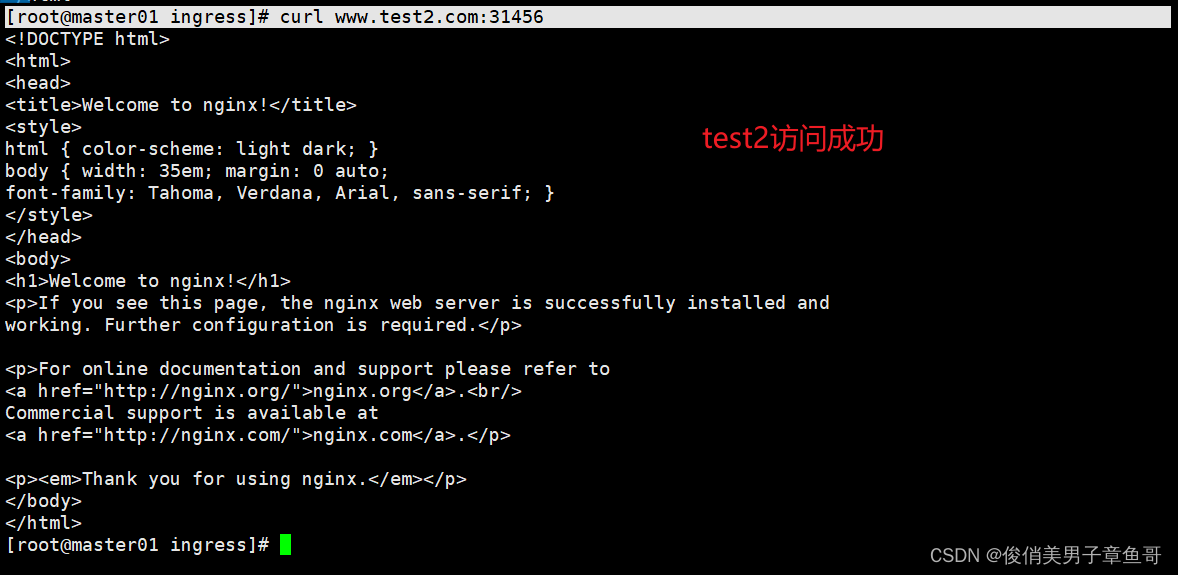

curl www.test2.com:31456

实验完成!?

nodeport不再是deployment的service创建的

而是由ingress创建的

-

url请求先到ingress

-

ingress根据标签匹配ingress-nodeport

-

通过标签寻找指定的service

-

最终由service找到deployment

核心的控制组件时nginx-ingress-controller

host----ingress的配置找到pod----controller----把请求发到pod

nodeport-----controller-----ingress----service----pod

nodeport暴露端口的方式是最简单的。nodeport多了一层net(地址转换)

并发量大的对性能会有一定影响。内部都会用nodeport

通过虚拟主机的方式实现http代理

通过ingress的方式实现:一个ingress可以访问不同的主机

实验举例:

vim pod1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment1

labels:

test: nginx1

spec:

replicas: 1

selector:

matchLabels:

test: nginx1

template:

metadata:

labels:

test: nginx1

spec:

containers:

- name: nginx1

image: nginx:1.22

---

apiVersion: v1

kind: Service

metadata:

name: svc-1

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

test: nginx1

vim pod2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment1

labels:

test2: nginx2

spec:

replicas: 1

selector:

matchLabels:

test2: nginx2

template:

metadata:

labels:

test2: nginx2

spec:

containers:

- name: nginx2

image: nginx:1.22

---

apiVersion: v1

kind: Service

metadata:

name: svc-2

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

test2: nginx2

vim pod-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress1

spec:

rules:

- host: www.test1.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc-1

port:

number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress2

spec:

rules:

- host: www.test2.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc-2

port:

number: 80

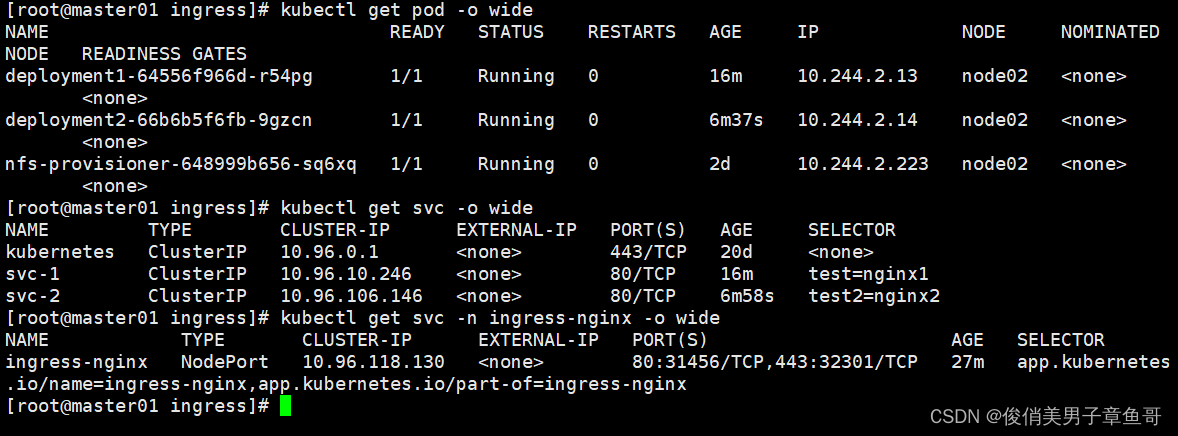

kubectl apply -f pod.yaml

kubectl apply -f pod2.yaml

kubectl apply -f pod-ingress.yaml

vim /etc/hosts

20.0.0.32 master01

20.0.0.34 node01 www.test1.com www.test2.com

20.0.0.35 node02 www.test1.com www.test2.com

20.0.0.36 hub.test.com k8s5 www.test1.com

curl www.test1.com:31456

curl www.test2.com:31456

访问成功实验完成!

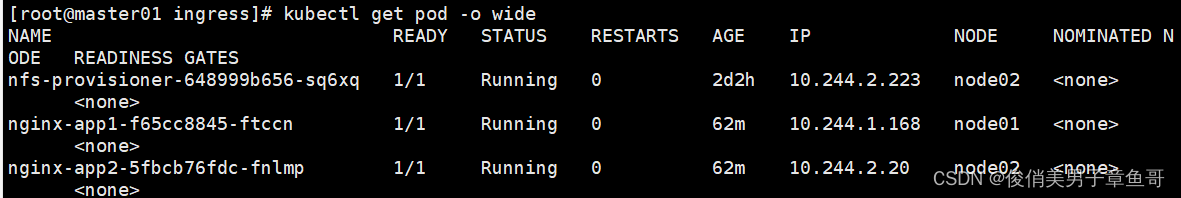

daemonset+hostnetwork+nodeselector实现访问多个主机

实验举例:

vim daemoset-hostnetwork-nodeselector1.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc1

spec:

accessModes:

- ReadWriteMany

storageClassName: nfs-client-storageclass

resources:

requests:

storage: 2Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app1

labels:

app: nginx1

spec:

replicas: 1

selector:

matchLabels:

app: nginx1

template:

metadata:

labels:

app: nginx1

spec:

containers:

- name: nginx

image: nginx:1.22

volumeMounts:

- name: nfs-pvc1

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-pvc1

persistentVolumeClaim:

claimName: nfs-pvc1

---

apiVersion: v1

kind: Service

metadata:

name: nginx-app-svc1

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: nginx1

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-app-ingress1

spec:

rules:

- host: www.test1.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-app-svc1

port:

number: 80

kubectl apply -f daemoset-hostnetwork-nodeselector1.yaml

vim daemoset-hostnetwork-nodeselector2.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc2

spec:

accessModes:

- ReadWriteMany

storageClassName: nfs-client-storageclass

resources:

requests:

storage: 2Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app2

labels:

app: nginx2

spec:

replicas: 1

selector:

matchLabels:

app: nginx2

template:

metadata:

labels:

app: nginx2

spec:

containers:

- name: nginx

image: nginx:1.22

volumeMounts:

- name: nfs-pvc2

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-pvc2

persistentVolumeClaim:

claimName: nfs-pvc2

---

apiVersion: v1

kind: Service

metadata:

name: nginx-app-svc2

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: nginx2

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-app-ingress2

spec:

rules:

- host: www.test2.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-app-svc2

port:

number: 80

kubectl apply -f daemoset-hostnetwork-nodeselector2.yaml

vim mandatory.yaml

192 kind: DaemonSet

200 #replicas: 1

215 hostNetwork: true

219 #kubernetes.io/os: linux

220 test1: "true"

kubectl apply -f mandatory.yaml

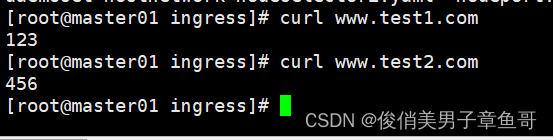

curl www.test1.com

curl www.test2.com

实验完成!!

?

?

总结

ingress-controller两种方式:nginx-ingress-controller、traefik

ingress-controller的三种工作模式:

deployment+loadbalancer:需要云平台提供一个负载均衡的公网地址。公有云上做。需要收费

daemonset+hostnetwork+nodeselector:一般都会指定节点部署controller。缺点就是和宿主机共享网络,只能是一个controller的pod

hostnetwork会和宿主机共享网络。所以需要指定标签

deployment+NodePort:这是最常用最简单的方式。他会集中一个nodeport端口,所有ingress的请求都会转发到nodeport。然后由service将流量转发到pod

一个ingress的nodeport可以实现访问多个虚拟主机。和nginx类似。同一个端口下可以有多个域名

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 项目管理十大知识领域之风险管理

- k8s的二进制部署和网络类型

- 【MySQL】打开科技创新的第一生产力

- C++ 类构造函数 & 析构函数

- 阶段三-Day05-JDBC

- Flutter在Android Studio上创建项目与构建模式

- <string.h>头文件: C 语言字符串处理函数详解

- PLAN B KRYPTO ASSETS GMBH & CO. KG 普兰资产管理公司

- LeetCode 2765.最长交替子数组:O(n)的做法(两次遍历)

- redis 如何保证缓存和数据库一致性?