FPN网络的实现原理详解

发布时间:2024年01月11日

1 前言

FPN网络是一种常见的特征融合模块,在很多模型中都有运用,今天我们就结合代码和论文详细的搞清楚它到底是怎么一回事。

2 原理

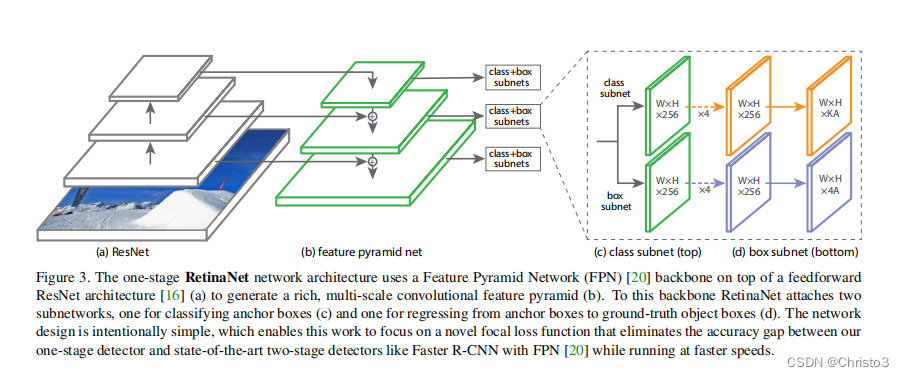

原理直接看这一张图就可以了,很直观主要就是把对不同层的特征进行融合,重点还是在于代码的理解。

3 代码详解

import torch.nn as nn

import torch

import math

import torch.utils.model_zoo as model_zoo

from torchvision.ops import nms

# from retinanet.utils import BasicBlock, Bottleneck, BBoxTransform, ClipBoxes

# from retinanet.anchors import Anchors

# from retinanet import losses

class PyramidFeatures(nn.Module):

def __init__(self, C3_size, C4_size, C5_size, feature_size=256):

super(PyramidFeatures, self).__init__()

# upsample C5 to get P5 from the FPN paper

self.P5_1 = nn.Conv2d(C5_size, feature_size, kernel_size=1, stride=1, padding=0)

self.P5_upsampled = nn.Upsample(scale_factor=2, mode='nearest')

#将C5的特征图尺寸放大2倍用于跟C4相加

self.P5_2 = nn.Conv2d(feature_size, feature_size, kernel_size=3, stride=1, padding=1)

# add P5 elementwise to C4

self.P4_1 = nn.Conv2d(C4_size, feature_size, kernel_size=1, stride=1, padding=0)

self.P4_upsampled = nn.Upsample(scale_factor=2, mode='nearest')

self.P4_2 = nn.Conv2d(feature_size, feature_size, kernel_size=3, stride=1, padding=1)

# add P4 elementwise to C3

self.P3_1 = nn.Conv2d(C3_size, feature_size, kernel_size=1, stride=1, padding=0)

self.P3_2 = nn.Conv2d(feature_size, feature_size, kernel_size=3, stride=1, padding=1)

# "P6 is obtained via a 3x3 stride-2 conv on C5"

self.P6 = nn.Conv2d(C5_size, feature_size, kernel_size=3, stride=2, padding=1)

# "P7 is computed by applying ReLU followed by a 3x3 stride-2 conv on P6"

self.P7_1 = nn.ReLU()

self.P7_2 = nn.Conv2d(feature_size, feature_size, kernel_size=3, stride=2, padding=1)

def forward(self, inputs):

#注意理解这里的inputs,其其表示的是一个列表

C3, C4, C5 = inputs

P5_x = self.P5_1(C5)

P5_upsampled_x = self.P5_upsampled(P5_x)

P5_x = self.P5_2(P5_x)

P4_x = self.P4_1(C4)

P4_x = P5_upsampled_x + P4_x

P4_upsampled_x = self.P4_upsampled(P4_x)

P4_x = self.P4_2(P4_x)

P3_x = self.P3_1(C3)

P3_x = P3_x + P4_upsampled_x

P3_x = self.P3_2(P3_x)

P6_x = self.P6(C5)

P7_x = self.P7_1(P6_x)

P7_x = self.P7_2(P7_x)

return [P3_x, P4_x, P5_x, P6_x, P7_x]

if __name__ == '__main__':

model = PyramidFeatures(32, 64, 96)

print(model)

##这里假设输入是三层不同尺寸的特征图,输入的形状是[batch_size, 256, height, width]

input = [torch.randn(1, 32, 640, 640), torch.randn(1, 64, 320, 320), torch.randn(1, 96, 160, 160)]

out = model(input)

print(out)

文章来源:https://blog.csdn.net/weixin_41552975/article/details/135530473

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 爆满!!TikTok Shop美区闭门研讨会完美收官,座无虚席!

- Minitab的单因子方差分析的结果

- 【Linux】shell命令以及运行原理

- 鸿蒙开发实战--DevEco Studio真机调试方法

- 关于uview-ui的u-tabs标签滑块不居中的问题

- 20240109-补 二叉树被感染的时间

- 解锁绝对安全:上海迅软DSE加密系统,让盗窃者措手不及

- 【温故而知新】HTML5新标签canvas、MathML

- PTA QQ帐户的申请与登陆

- 7 Series FPGAs Transceivers Wizard(3.6) ip核接收数据正确性判断