案例系列:便利店销售预测_基于Dart时间序列深度学习模型

文章目录

关键指标

📌 基准:

- 对每个系列应用指数平滑可以生成一个很好的基准预测 - RMSLE: 0.40578

📌 目标:

- 利用大量时间序列通过全局模型使用更复杂、灵活的方法**(Boosted Trees, Neural Networks)**

📌 深度学习模型:

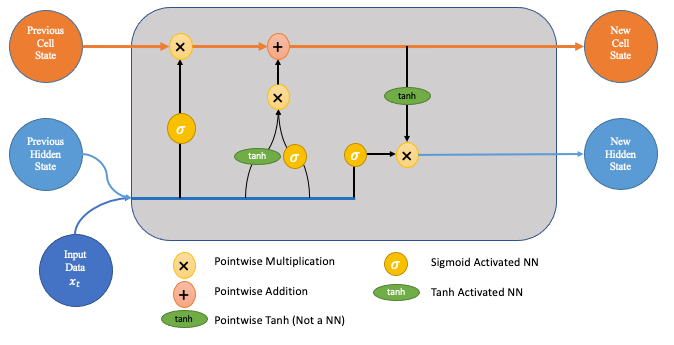

- 本笔记本使用Pytorch通过TS库Darts实现了神经网络模型N-HiTS, LSTM和TFT

📌 最佳模型:

- 33个全局LightGBM模型,每个产品族一个

- 每个模型训练了54个时间序列(对应54个商店)

🏆 -> RMSLE: 0.38558 -> #1 排行榜(2022年9月21日),本笔记本的V24版本

这里发生了什么?

在这个笔记本中,我尝试测试和学习使用机器学习进行时间序列预测的不同方法。我想呈现一个全面的预测工作流程。我的主要重点是探索神经网络模型(如LSTM、NBEATS、TCN、TFT、N-HiTS)。

我基本的理解是:这些复杂而灵活的方法需要大量的数据才能表现良好。对于单个、单变量的时间序列,通常情况下并不是这样,具有更多结构的统计方法往往表现更好。然而,在这里,我们有1782个并行和相关的时间序列 - 54个商店的33个产品类别的销售数据。

我想尝试的方法:

- 全局模型(训练多个时间序列)

- 层次预测(预测协调)

- 集成

- 以上所有方法的组合

我使用 Darts库 进行时间序列建模 - 对于像我这样的初级程序员来说,它简化了工作流程,并且实现了最新的深度学习预测方法。

然而到目前为止,简单的指数平滑基准模型证明优于我的(全局)神经网络模型。基于提升树的全局模型表现良好 - 到目前为止是最好的。但我相信还有很大的优化空间。

1. 库

我在这里使用Darts库进行所有的建模工作。它是一个在Python中进行预测的优秀且直观的选择,特别是对于神经网络模型。开发团队非常乐于助人,并在他们的公共交流渠道上回答问题,所以我强烈推荐这个库用于时间序列预测!

# DARTS预测库

# 安装所需依赖库

!pip install pyyaml==5.4.1

!pip install darts

# 导入所需库

import darts

print(darts.__version__)

# 安装optuna库

!pip install -U optuna==2.0.0

# 导入所需库

import numpy as np

import time

from darts import TimeSeries

from darts.utils.timeseries_generation import gaussian_timeseries, linear_timeseries, sine_timeseries

from darts.models import LightGBMModel, CatBoostModel, Prophet, RNNModel, TFTModel, NaiveSeasonal, ExponentialSmoothing, NHiTSModel

from darts.metrics import mape, smape, rmse, rmsle

from darts.dataprocessing import Pipeline

from darts.dataprocessing.transformers import Scaler, StaticCovariatesTransformer, MissingValuesFiller, InvertibleMapper

from darts.utils.timeseries_generation import datetime_attribute_timeseries

from darts.utils.statistics import check_seasonality, plot_acf, plot_residuals_analysis, plot_hist

from darts.utils.likelihood_models import QuantileRegression

from darts.utils.missing_values import fill_missing_values

from darts.models import MovingAverage

import optuna

from optuna.integration import PyTorchLightningPruningCallback

from optuna.visualization import (

plot_optimization_history,

plot_contour,

plot_param_importances,

)

from pytorch_lightning.callbacks.early_stopping import EarlyStopping

from tqdm import tqdm

import sklearn

from sklearn import preprocessing

import pandas as pd

import torch

import matplotlib.pyplot as plt

import gc

%matplotlib inline

torch.manual_seed(1); np.random.seed(1) # 设置随机种子以便复现结果

Collecting pyyaml==5.4.1

Downloading PyYAML-5.4.1-cp37-cp37m-manylinux1_x86_64.whl (636 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m636.6/636.6 kB[0m [31m5.5 MB/s[0m eta [36m0:00:00[0m

[?25hInstalling collected packages: pyyaml

Attempting uninstall: pyyaml

Found existing installation: PyYAML 6.0

Uninstalling PyYAML-6.0:

Successfully uninstalled PyYAML-6.0

[31mERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

dask-cudf 21.10.1 requires cupy-cuda114, which is not installed.

pandas-profiling 3.1.0 requires markupsafe~=2.0.1, but you have markupsafe 2.1.1 which is incompatible.

flax 0.6.0 requires rich~=11.1, but you have rich 12.1.0 which is incompatible.

dask-cudf 21.10.1 requires dask==2021.09.1, but you have dask 2022.2.0 which is incompatible.

dask-cudf 21.10.1 requires distributed==2021.09.1, but you have distributed 2022.2.0 which is incompatible.

allennlp 2.10.0 requires protobuf==3.20.0, but you have protobuf 3.19.4 which is incompatible.[0m[31m

[0mSuccessfully installed pyyaml-5.4.1

[33mWARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv[0m[33m

[0mCollecting darts

Downloading darts-0.22.0-py3-none-any.whl (451 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m451.9/451.9 kB[0m [31m4.6 MB/s[0m eta [36m0:00:00[0m

[?25hRequirement already satisfied: scipy>=1.3.2 in /opt/conda/lib/python3.7/site-packages (from darts) (1.7.3)

Requirement already satisfied: scikit-learn>=1.0.1 in /opt/conda/lib/python3.7/site-packages (from darts) (1.0.2)

Requirement already satisfied: numpy>=1.19.0 in /opt/conda/lib/python3.7/site-packages (from darts) (1.21.6)

Requirement already satisfied: catboost>=1.0.6 in /opt/conda/lib/python3.7/site-packages (from darts) (1.0.6)

Requirement already satisfied: requests>=2.22.0 in /opt/conda/lib/python3.7/site-packages (from darts) (2.28.1)

Requirement already satisfied: lightgbm>=2.2.3 in /opt/conda/lib/python3.7/site-packages (from darts) (3.3.2)

Requirement already satisfied: joblib>=0.16.0 in /opt/conda/lib/python3.7/site-packages (from darts) (1.0.1)

Collecting statsforecast>=1.0.0

Downloading statsforecast-1.3.0-py3-none-any.whl (85 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m85.2/85.2 kB[0m [31m8.9 MB/s[0m eta [36m0:00:00[0m

[?25hRequirement already satisfied: torch>=1.8.0 in /opt/conda/lib/python3.7/site-packages (from darts) (1.11.0)

Collecting nfoursid>=1.0.0

Downloading nfoursid-1.0.1-py3-none-any.whl (16 kB)

Requirement already satisfied: pandas>=1.0.5 in /opt/conda/lib/python3.7/site-packages (from darts) (1.3.5)

Requirement already satisfied: xarray>=0.17.0 in /opt/conda/lib/python3.7/site-packages (from darts) (0.20.2)

Collecting tbats>=1.1.0

Downloading tbats-1.1.1-py3-none-any.whl (43 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m43.8/43.8 kB[0m [31m3.5 MB/s[0m eta [36m0:00:00[0m

[?25hRequirement already satisfied: pytorch-lightning>=1.5.0 in /opt/conda/lib/python3.7/site-packages (from darts) (1.7.2)

Requirement already satisfied: tqdm>=4.60.0 in /opt/conda/lib/python3.7/site-packages (from darts) (4.64.0)

Collecting pmdarima>=1.8.0

Downloading pmdarima-2.0.1-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.manylinux_2_28_x86_64.whl (1.8 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m1.8/1.8 MB[0m [31m25.9 MB/s[0m eta [36m0:00:00[0m

[?25hCollecting prophet>=1.1.1

Downloading prophet-1.1.1-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (8.9 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m8.9/8.9 MB[0m [31m63.6 MB/s[0m eta [36m0:00:00[0m

[?25hRequirement already satisfied: shap>=0.40.0 in /opt/conda/lib/python3.7/site-packages (from darts) (0.41.0)

Requirement already satisfied: ipython>=5.0.0 in /opt/conda/lib/python3.7/site-packages (from darts) (7.33.0)

Requirement already satisfied: holidays>=0.11.1 in /opt/conda/lib/python3.7/site-packages (from darts) (0.15)

Requirement already satisfied: statsmodels>=0.13.0 in /opt/conda/lib/python3.7/site-packages (from darts) (0.13.2)

Requirement already satisfied: matplotlib>=3.3.0 in /opt/conda/lib/python3.7/site-packages (from darts) (3.5.3)

Requirement already satisfied: six in /opt/conda/lib/python3.7/site-packages (from catboost>=1.0.6->darts) (1.15.0)

Requirement already satisfied: plotly in /opt/conda/lib/python3.7/site-packages (from catboost>=1.0.6->darts) (5.10.0)

Requirement already satisfied: graphviz in /opt/conda/lib/python3.7/site-packages (from catboost>=1.0.6->darts) (0.8.4)

Requirement already satisfied: hijri-converter in /opt/conda/lib/python3.7/site-packages (from holidays>=0.11.1->darts) (2.2.4)

Requirement already satisfied: korean-lunar-calendar in /opt/conda/lib/python3.7/site-packages (from holidays>=0.11.1->darts) (0.2.1)

Requirement already satisfied: python-dateutil in /opt/conda/lib/python3.7/site-packages (from holidays>=0.11.1->darts) (2.8.2)

Requirement already satisfied: convertdate>=2.3.0 in /opt/conda/lib/python3.7/site-packages (from holidays>=0.11.1->darts) (2.4.0)

Requirement already satisfied: decorator in /opt/conda/lib/python3.7/site-packages (from ipython>=5.0.0->darts) (5.1.1)

Requirement already satisfied: jedi>=0.16 in /opt/conda/lib/python3.7/site-packages (from ipython>=5.0.0->darts) (0.18.1)

Requirement already satisfied: setuptools>=18.5 in /opt/conda/lib/python3.7/site-packages (from ipython>=5.0.0->darts) (59.8.0)

Requirement already satisfied: prompt-toolkit!=3.0.0,!=3.0.1,<3.1.0,>=2.0.0 in /opt/conda/lib/python3.7/site-packages (from ipython>=5.0.0->darts) (3.0.30)

Requirement already satisfied: matplotlib-inline in /opt/conda/lib/python3.7/site-packages (from ipython>=5.0.0->darts) (0.1.3)

Requirement already satisfied: pickleshare in /opt/conda/lib/python3.7/site-packages (from ipython>=5.0.0->darts) (0.7.5)

Requirement already satisfied: traitlets>=4.2 in /opt/conda/lib/python3.7/site-packages (from ipython>=5.0.0->darts) (5.3.0)

Requirement already satisfied: backcall in /opt/conda/lib/python3.7/site-packages (from ipython>=5.0.0->darts) (0.2.0)

Requirement already satisfied: pexpect>4.3 in /opt/conda/lib/python3.7/site-packages (from ipython>=5.0.0->darts) (4.8.0)

Requirement already satisfied: pygments in /opt/conda/lib/python3.7/site-packages (from ipython>=5.0.0->darts) (2.12.0)

Requirement already satisfied: wheel in /opt/conda/lib/python3.7/site-packages (from lightgbm>=2.2.3->darts) (0.37.1)

Requirement already satisfied: pyparsing>=2.2.1 in /opt/conda/lib/python3.7/site-packages (from matplotlib>=3.3.0->darts) (3.0.9)

Requirement already satisfied: fonttools>=4.22.0 in /opt/conda/lib/python3.7/site-packages (from matplotlib>=3.3.0->darts) (4.33.3)

Requirement already satisfied: packaging>=20.0 in /opt/conda/lib/python3.7/site-packages (from matplotlib>=3.3.0->darts) (21.3)

Requirement already satisfied: cycler>=0.10 in /opt/conda/lib/python3.7/site-packages (from matplotlib>=3.3.0->darts) (0.11.0)

Requirement already satisfied: pillow>=6.2.0 in /opt/conda/lib/python3.7/site-packages (from matplotlib>=3.3.0->darts) (9.1.1)

Requirement already satisfied: kiwisolver>=1.0.1 in /opt/conda/lib/python3.7/site-packages (from matplotlib>=3.3.0->darts) (1.4.3)

Requirement already satisfied: pytz>=2017.3 in /opt/conda/lib/python3.7/site-packages (from pandas>=1.0.5->darts) (2022.1)

Requirement already satisfied: urllib3 in /opt/conda/lib/python3.7/site-packages (from pmdarima>=1.8.0->darts) (1.26.12)

Requirement already satisfied: Cython!=0.29.18,!=0.29.31,>=0.29 in /opt/conda/lib/python3.7/site-packages (from pmdarima>=1.8.0->darts) (0.29.32)

Requirement already satisfied: setuptools-git>=1.2 in /opt/conda/lib/python3.7/site-packages (from prophet>=1.1.1->darts) (1.2)

Requirement already satisfied: cmdstanpy>=1.0.4 in /opt/conda/lib/python3.7/site-packages (from prophet>=1.1.1->darts) (1.0.4)

Requirement already satisfied: LunarCalendar>=0.0.9 in /opt/conda/lib/python3.7/site-packages (from prophet>=1.1.1->darts) (0.0.9)

Requirement already satisfied: fsspec[http]!=2021.06.0,>=2021.05.0 in /opt/conda/lib/python3.7/site-packages (from pytorch-lightning>=1.5.0->darts) (2022.7.1)

Requirement already satisfied: pyDeprecate>=0.3.1 in /opt/conda/lib/python3.7/site-packages (from pytorch-lightning>=1.5.0->darts) (0.3.2)

Requirement already satisfied: typing-extensions>=4.0.0 in /opt/conda/lib/python3.7/site-packages (from pytorch-lightning>=1.5.0->darts) (4.3.0)

Requirement already satisfied: PyYAML>=5.4 in /opt/conda/lib/python3.7/site-packages (from pytorch-lightning>=1.5.0->darts) (5.4.1)

Requirement already satisfied: torchmetrics>=0.7.0 in /opt/conda/lib/python3.7/site-packages (from pytorch-lightning>=1.5.0->darts) (0.9.3)

Requirement already satisfied: tensorboard>=2.9.1 in /opt/conda/lib/python3.7/site-packages (from pytorch-lightning>=1.5.0->darts) (2.10.0)

Requirement already satisfied: charset-normalizer<3,>=2 in /opt/conda/lib/python3.7/site-packages (from requests>=2.22.0->darts) (2.1.0)

Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/lib/python3.7/site-packages (from requests>=2.22.0->darts) (2022.6.15)

Requirement already satisfied: idna<4,>=2.5 in /opt/conda/lib/python3.7/site-packages (from requests>=2.22.0->darts) (3.3)

Requirement already satisfied: threadpoolctl>=2.0.0 in /opt/conda/lib/python3.7/site-packages (from scikit-learn>=1.0.1->darts) (3.1.0)

Requirement already satisfied: slicer==0.0.7 in /opt/conda/lib/python3.7/site-packages (from shap>=0.40.0->darts) (0.0.7)

Requirement already satisfied: cloudpickle in /opt/conda/lib/python3.7/site-packages (from shap>=0.40.0->darts) (2.1.0)

Requirement already satisfied: numba in /opt/conda/lib/python3.7/site-packages (from shap>=0.40.0->darts) (0.55.2)

Requirement already satisfied: patsy>=0.5.2 in /opt/conda/lib/python3.7/site-packages (from statsmodels>=0.13.0->darts) (0.5.2)

Requirement already satisfied: importlib-metadata in /opt/conda/lib/python3.7/site-packages (from xarray>=0.17.0->darts) (4.12.0)

Requirement already satisfied: ujson in /opt/conda/lib/python3.7/site-packages (from cmdstanpy>=1.0.4->prophet>=1.1.1->darts) (5.3.0)

Requirement already satisfied: pymeeus<=1,>=0.3.13 in /opt/conda/lib/python3.7/site-packages (from convertdate>=2.3.0->holidays>=0.11.1->darts) (0.5.11)

Requirement already satisfied: aiohttp in /opt/conda/lib/python3.7/site-packages (from fsspec[http]!=2021.06.0,>=2021.05.0->pytorch-lightning>=1.5.0->darts) (3.8.1)

Requirement already satisfied: parso<0.9.0,>=0.8.0 in /opt/conda/lib/python3.7/site-packages (from jedi>=0.16->ipython>=5.0.0->darts) (0.8.3)

Requirement already satisfied: ephem>=3.7.5.3 in /opt/conda/lib/python3.7/site-packages (from LunarCalendar>=0.0.9->prophet>=1.1.1->darts) (4.1.3)

Requirement already satisfied: llvmlite<0.39,>=0.38.0rc1 in /opt/conda/lib/python3.7/site-packages (from numba->shap>=0.40.0->darts) (0.38.1)

Requirement already satisfied: ptyprocess>=0.5 in /opt/conda/lib/python3.7/site-packages (from pexpect>4.3->ipython>=5.0.0->darts) (0.7.0)

Requirement already satisfied: wcwidth in /opt/conda/lib/python3.7/site-packages (from prompt-toolkit!=3.0.0,!=3.0.1,<3.1.0,>=2.0.0->ipython>=5.0.0->darts) (0.2.5)

Requirement already satisfied: google-auth-oauthlib<0.5,>=0.4.1 in /opt/conda/lib/python3.7/site-packages (from tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (0.4.6)

Requirement already satisfied: markdown>=2.6.8 in /opt/conda/lib/python3.7/site-packages (from tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (3.3.7)

Requirement already satisfied: werkzeug>=1.0.1 in /opt/conda/lib/python3.7/site-packages (from tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (2.2.2)

Requirement already satisfied: tensorboard-plugin-wit>=1.6.0 in /opt/conda/lib/python3.7/site-packages (from tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (1.8.1)

Requirement already satisfied: grpcio>=1.24.3 in /opt/conda/lib/python3.7/site-packages (from tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (1.43.0)

Requirement already satisfied: protobuf<3.20,>=3.9.2 in /opt/conda/lib/python3.7/site-packages (from tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (3.19.4)

Requirement already satisfied: google-auth<3,>=1.6.3 in /opt/conda/lib/python3.7/site-packages (from tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (1.35.0)

Requirement already satisfied: tensorboard-data-server<0.7.0,>=0.6.0 in /opt/conda/lib/python3.7/site-packages (from tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (0.6.1)

Requirement already satisfied: absl-py>=0.4 in /opt/conda/lib/python3.7/site-packages (from tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (0.15.0)

Requirement already satisfied: zipp>=0.5 in /opt/conda/lib/python3.7/site-packages (from importlib-metadata->xarray>=0.17.0->darts) (3.8.0)

Requirement already satisfied: tenacity>=6.2.0 in /opt/conda/lib/python3.7/site-packages (from plotly->catboost>=1.0.6->darts) (8.0.1)

Requirement already satisfied: rsa<5,>=3.1.4 in /opt/conda/lib/python3.7/site-packages (from google-auth<3,>=1.6.3->tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (4.8)

Requirement already satisfied: cachetools<5.0,>=2.0.0 in /opt/conda/lib/python3.7/site-packages (from google-auth<3,>=1.6.3->tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (4.2.4)

Requirement already satisfied: pyasn1-modules>=0.2.1 in /opt/conda/lib/python3.7/site-packages (from google-auth<3,>=1.6.3->tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (0.2.7)

Requirement already satisfied: requests-oauthlib>=0.7.0 in /opt/conda/lib/python3.7/site-packages (from google-auth-oauthlib<0.5,>=0.4.1->tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (1.3.1)

Requirement already satisfied: MarkupSafe>=2.1.1 in /opt/conda/lib/python3.7/site-packages (from werkzeug>=1.0.1->tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (2.1.1)

Requirement already satisfied: multidict<7.0,>=4.5 in /opt/conda/lib/python3.7/site-packages (from aiohttp->fsspec[http]!=2021.06.0,>=2021.05.0->pytorch-lightning>=1.5.0->darts) (6.0.2)

Requirement already satisfied: frozenlist>=1.1.1 in /opt/conda/lib/python3.7/site-packages (from aiohttp->fsspec[http]!=2021.06.0,>=2021.05.0->pytorch-lightning>=1.5.0->darts) (1.3.0)

Requirement already satisfied: asynctest==0.13.0 in /opt/conda/lib/python3.7/site-packages (from aiohttp->fsspec[http]!=2021.06.0,>=2021.05.0->pytorch-lightning>=1.5.0->darts) (0.13.0)

Requirement already satisfied: yarl<2.0,>=1.0 in /opt/conda/lib/python3.7/site-packages (from aiohttp->fsspec[http]!=2021.06.0,>=2021.05.0->pytorch-lightning>=1.5.0->darts) (1.7.2)

Requirement already satisfied: async-timeout<5.0,>=4.0.0a3 in /opt/conda/lib/python3.7/site-packages (from aiohttp->fsspec[http]!=2021.06.0,>=2021.05.0->pytorch-lightning>=1.5.0->darts) (4.0.2)

Requirement already satisfied: aiosignal>=1.1.2 in /opt/conda/lib/python3.7/site-packages (from aiohttp->fsspec[http]!=2021.06.0,>=2021.05.0->pytorch-lightning>=1.5.0->darts) (1.2.0)

Requirement already satisfied: attrs>=17.3.0 in /opt/conda/lib/python3.7/site-packages (from aiohttp->fsspec[http]!=2021.06.0,>=2021.05.0->pytorch-lightning>=1.5.0->darts) (21.4.0)

Requirement already satisfied: pyasn1<0.5.0,>=0.4.6 in /opt/conda/lib/python3.7/site-packages (from pyasn1-modules>=0.2.1->google-auth<3,>=1.6.3->tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (0.4.8)

Requirement already satisfied: oauthlib>=3.0.0 in /opt/conda/lib/python3.7/site-packages (from requests-oauthlib>=0.7.0->google-auth-oauthlib<0.5,>=0.4.1->tensorboard>=2.9.1->pytorch-lightning>=1.5.0->darts) (3.2.0)

Installing collected packages: nfoursid, statsforecast, prophet, pmdarima, tbats, darts

Attempting uninstall: prophet

Found existing installation: prophet 1.1

Uninstalling prophet-1.1:

Successfully uninstalled prophet-1.1

Successfully installed darts-0.22.0 nfoursid-1.0.1 pmdarima-2.0.1 prophet-1.1.1 statsforecast-1.3.0 tbats-1.1.1

[33mWARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv[0m[33m

[0m0.22.0

Collecting optuna==2.0.0

Downloading optuna-2.0.0.tar.gz (226 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m226.9/226.9 kB[0m [31m3.2 MB/s[0m eta [36m0:00:00[0m

[?25h Preparing metadata (setup.py) ... [?25l- done

[?25hRequirement already satisfied: alembic in /opt/conda/lib/python3.7/site-packages (from optuna==2.0.0) (1.8.1)

Requirement already satisfied: cliff in /opt/conda/lib/python3.7/site-packages (from optuna==2.0.0) (3.10.1)

Requirement already satisfied: cmaes>=0.5.1 in /opt/conda/lib/python3.7/site-packages (from optuna==2.0.0) (0.8.2)

Requirement already satisfied: colorlog in /opt/conda/lib/python3.7/site-packages (from optuna==2.0.0) (6.6.0)

Requirement already satisfied: joblib in /opt/conda/lib/python3.7/site-packages (from optuna==2.0.0) (1.0.1)

Requirement already satisfied: numpy in /opt/conda/lib/python3.7/site-packages (from optuna==2.0.0) (1.21.6)

Requirement already satisfied: packaging in /opt/conda/lib/python3.7/site-packages (from optuna==2.0.0) (21.3)

Requirement already satisfied: scipy!=1.4.0 in /opt/conda/lib/python3.7/site-packages (from optuna==2.0.0) (1.7.3)

Requirement already satisfied: sqlalchemy>=1.1.0 in /opt/conda/lib/python3.7/site-packages (from optuna==2.0.0) (1.4.39)

Requirement already satisfied: tqdm in /opt/conda/lib/python3.7/site-packages (from optuna==2.0.0) (4.64.0)

Requirement already satisfied: greenlet!=0.4.17 in /opt/conda/lib/python3.7/site-packages (from sqlalchemy>=1.1.0->optuna==2.0.0) (1.1.2)

Requirement already satisfied: importlib-metadata in /opt/conda/lib/python3.7/site-packages (from sqlalchemy>=1.1.0->optuna==2.0.0) (4.12.0)

Requirement already satisfied: Mako in /opt/conda/lib/python3.7/site-packages (from alembic->optuna==2.0.0) (1.2.1)

Requirement already satisfied: importlib-resources in /opt/conda/lib/python3.7/site-packages (from alembic->optuna==2.0.0) (5.8.0)

Requirement already satisfied: PyYAML>=3.12 in /opt/conda/lib/python3.7/site-packages (from cliff->optuna==2.0.0) (5.4.1)

Requirement already satisfied: stevedore>=2.0.1 in /opt/conda/lib/python3.7/site-packages (from cliff->optuna==2.0.0) (3.5.0)

Requirement already satisfied: cmd2>=1.0.0 in /opt/conda/lib/python3.7/site-packages (from cliff->optuna==2.0.0) (2.4.2)

Requirement already satisfied: pbr!=2.1.0,>=2.0.0 in /opt/conda/lib/python3.7/site-packages (from cliff->optuna==2.0.0) (5.10.0)

Requirement already satisfied: pyparsing>=2.1.0 in /opt/conda/lib/python3.7/site-packages (from cliff->optuna==2.0.0) (3.0.9)

Requirement already satisfied: PrettyTable>=0.7.2 in /opt/conda/lib/python3.7/site-packages (from cliff->optuna==2.0.0) (3.3.0)

Requirement already satisfied: autopage>=0.4.0 in /opt/conda/lib/python3.7/site-packages (from cliff->optuna==2.0.0) (0.5.1)

Requirement already satisfied: typing-extensions in /opt/conda/lib/python3.7/site-packages (from cmd2>=1.0.0->cliff->optuna==2.0.0) (4.3.0)

Requirement already satisfied: attrs>=16.3.0 in /opt/conda/lib/python3.7/site-packages (from cmd2>=1.0.0->cliff->optuna==2.0.0) (21.4.0)

Requirement already satisfied: wcwidth>=0.1.7 in /opt/conda/lib/python3.7/site-packages (from cmd2>=1.0.0->cliff->optuna==2.0.0) (0.2.5)

Requirement already satisfied: pyperclip>=1.6 in /opt/conda/lib/python3.7/site-packages (from cmd2>=1.0.0->cliff->optuna==2.0.0) (1.8.2)

Requirement already satisfied: zipp>=0.5 in /opt/conda/lib/python3.7/site-packages (from importlib-metadata->sqlalchemy>=1.1.0->optuna==2.0.0) (3.8.0)

Requirement already satisfied: MarkupSafe>=0.9.2 in /opt/conda/lib/python3.7/site-packages (from Mako->alembic->optuna==2.0.0) (2.1.1)

Building wheels for collected packages: optuna

Building wheel for optuna (setup.py) ... [?25l- \ | done

[?25h Created wheel for optuna: filename=optuna-2.0.0-py3-none-any.whl size=312977 sha256=5de6cd631001e876e38b60703e242c8e2eae27b51546131dd83783da6e81f976

Stored in directory: /root/.cache/pip/wheels/22/8b/08/d32553e8cd416e1974ae704d41102b5a691c9612ad982b7991

Successfully built optuna

Installing collected packages: optuna

Attempting uninstall: optuna

Found existing installation: optuna 2.10.1

Uninstalling optuna-2.10.1:

Successfully uninstalled optuna-2.10.1

Successfully installed optuna-2.0.0

[33mWARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv[0m[33m

[0m

2. 数据

2.1. 预处理

数据来源:https://www.kaggle.com/competitions/store-sales-time-series-forecasting

现取决于任务的需求和文本的特点。在进行自然语言处理任务之前,进行适当的预处理可以提高模型的性能和准确性。

在加载数据之后,我创建了Darts特定的TimeSeries对象。对于销售数据,我为每个系列生成了所谓的静态协变量(店铺编号、产品系列、城市、地区、类型和集群)。这些协变量可能会在Darts中的某些模型中使用。我还创建了一组基于时间的协变量,如星期几、月份和年份。所有协变量系列都被堆叠在一起。

此外,我将所有系列缩放到0和1之间,然后对它们应用对数变换。我从这个有用的笔记本中得到了这个想法。

# 加载所有数据集

df_train = pd.read_csv('../input/store-sales-time-series-forecasting/train.csv') # 加载训练集

df_test = pd.read_csv('../input/store-sales-time-series-forecasting/test.csv') # 加载测试集

df_holidays_events = pd.read_csv('../input/store-sales-time-series-forecasting/holidays_events.csv') # 加载节假日事件数据集

df_oil = pd.read_csv('../input/store-sales-time-series-forecasting/oil.csv') # 加载油价数据集

df_stores = pd.read_csv('../input/store-sales-time-series-forecasting/stores.csv') # 加载商店数据集

df_transactions = pd.read_csv('../input/store-sales-time-series-forecasting/transactions.csv') # 加载交易数据集

df_sample_submission = pd.read_csv('../input/store-sales-time-series-forecasting/sample_submission.csv') # 加载样本提交数据集

# 销售数据(目标)

family_list = df_train['family'].unique() # 获取唯一的家庭列表

family_list

store_list = df_stores['store_nbr'].unique() # 获取唯一的商店编号列表

store_list

train_merged = pd.merge(df_train, df_stores, on ='store_nbr') # 将训练集和商店数据集按商店编号合并

train_merged = train_merged.sort_values(["store_nbr","family","date"]) # 按商店编号、家庭和日期排序

train_merged = train_merged.astype({"store_nbr":'str', "family":'str', "city":'str',

"state":'str', "type":'str', "cluster":'str'}) # 将商店编号、家庭、城市、州、类型和集群转换为字符串类型

df_test_dropped = df_test.drop(['onpromotion'], axis=1) # 删除测试集中的'onpromotion'列

df_test_sorted = df_test_dropped.sort_values(by=['store_nbr','family']) # 按商店编号和家庭排序

# 创建时间序列对象(Darts)并按产品家族分组存储在字典中

family_TS_dict = {}

for family in family_list:

df_family = train_merged.loc[train_merged['family'] == family] # 选择特定家族的数据

list_of_TS_family = TimeSeries.from_group_dataframe(

df_family,

time_col="date",

group_cols=["store_nbr","family"], # 按商店编号和家庭分组提取单独的时间序列

static_cols=["city","state","type","cluster"], # 还提取这些附加列作为静态协变量

value_cols="sales", # 目标变量

fill_missing_dates=True, # 填充缺失的日期

freq='D') # 频率为每天

for ts in list_of_TS_family:

ts = ts.astype(np.float32) # 将时间序列转换为浮点型

list_of_TS_family = sorted(list_of_TS_family, key=lambda ts: int(ts.static_covariates_values()[0,0])) # 按商店编号排序

family_TS_dict[family] = list_of_TS_family # 将时间序列存储在字典中,键为家族

# 转换销售数据

family_pipeline_dict = {}

family_TS_transformed_dict = {}

for key in family_TS_dict:

train_filler = MissingValuesFiller(verbose=False, n_jobs=-1, name="Fill NAs") # 填充缺失值

static_cov_transformer = StaticCovariatesTransformer(verbose=False, transformer_cat = sklearn.preprocessing.OneHotEncoder(), name="Encoder") # 静态协变量转换为独热编码

log_transformer = InvertibleMapper(np.log1p, np.expm1, verbose=False, n_jobs=-1, name="Log-Transform") # 对数转换

train_scaler = Scaler(verbose=False, n_jobs=-1, name="Scaling") # 缩放

train_pipeline = Pipeline([train_filler,

static_cov_transformer,

log_transformer,

train_scaler]) # 创建数据处理流程

training_transformed = train_pipeline.fit_transform(family_TS_dict[key]) # 对时间序列进行转换

family_pipeline_dict[key] = train_pipeline # 存储数据处理流程

family_TS_transformed_dict[key] = training_transformed # 存储转换后的时间序列

# 创建时间序列对象(Darts)

list_of_TS = TimeSeries.from_group_dataframe(

train_merged,

time_col="date",

group_cols=["store_nbr","family"], # 按商店编号和家庭分组提取单独的时间序列

static_cols=["city","state","type","cluster"], # 还提取这些附加列作为静态协变量

value_cols="sales", # 目标变量

fill_missing_dates=True, # 填充缺失的日期

freq='D') # 频率为每天

for ts in list_of_TS:

ts = ts.astype(np.float32) # 将时间序列转换为浮点型

list_of_TS = sorted(list_of_TS, key=lambda ts: int(ts.static_covariates_values()[0,0])) # 按商店编号排序

# 转换销售数据

train_filler = MissingValuesFiller(verbose=False, n_jobs=-1, name="Fill NAs") # 填充缺失值

static_cov_transformer = StaticCovariatesTransformer(verbose=False, transformer_cat = sklearn.preprocessing.OneHotEncoder(), name="Encoder") # 静态协变量转换为独热编码

log_transformer = InvertibleMapper(np.log1p, np.expm1, verbose=False, n_jobs=-1, name="Log-Transform") # 对数转换

train_scaler = Scaler(verbose=False, n_jobs=-1, name="Scaling") # 缩放

train_pipeline = Pipeline([train_filler,

static_cov_transformer,

log_transformer,

train_scaler]) # 创建数据处理流程

training_transformed = train_pipeline.fit_transform(list_of_TS) # 对时间序列进行转换

# 创建7天和28天移动平均销售额

sales_moving_average_7 = MovingAverage(window=7) # 创建7天移动平均对象

sales_moving_average_28 = MovingAverage(window=28) # 创建28天移动平均对象

sales_moving_averages_dict = {}

for key in family_TS_transformed_dict:

sales_mas_family = []

for ts in family_TS_transformed_dict[key]:

ma_7 = sales_moving_average_7.filter(ts) # 对时间序列应用7天移动平均

ma_7 = TimeSeries.from_series(ma_7.pd_series()) # 将移动平均结果转换为时间序列

ma_7 = ma_7.astype(np.float32) # 将时间序列转换为浮点型

ma_7 = ma_7.with_columns_renamed(col_names=ma_7.components, col_names_new="sales_ma_7") # 重命名列

ma_28 = sales_moving_average_28.filter(ts) # 对时间序列应用28天移动平均

ma_28 = TimeSeries.from_series(ma_28.pd_series()) # 将移动平均结果转换为时间序列

ma_28 = ma_28.astype(np.float32) # 将时间序列转换为浮点型

ma_28 = ma_28.with_columns_renamed(col_names=ma_28.components, col_names_new="sales_ma_28") # 重命名列

mas = ma_7.stack(ma_28) # 将两个移动平均结果堆叠在一起

sales_mas_family.append(mas) # 存储堆叠后的时间序列

sales_moving_averages_dict[key] = sales_mas_family # 存储移动平均结果

# 一般协变量(基于时间和油价)

full_time_period = pd.date_range(start='2013-01-01', end='2017-08-31', freq='D') # 创建完整的时间范围

# 基于时间的协变量

year = datetime_attribute_timeseries(time_index = full_time_period, attribute="year") # 提取年份

month = datetime_attribute_timeseries(time_index = full_time_period, attribute="month") # 提取月份

day = datetime_attribute_timeseries(time_index = full_time_period, attribute="day") # 提取日期

dayofyear = datetime_attribute_timeseries(time_index = full_time_period, attribute="dayofyear") # 提取一年中的第几天

weekday = datetime_attribute_timeseries(time_index = full_time_period, attribute="dayofweek") # 提取星期几

weekofyear = datetime_attribute_timeseries(time_index = full_time_period, attribute="weekofyear") # 提取一年中的第几周

timesteps = TimeSeries.from_times_and_values(times=full_time_period,

values=np.arange(len(full_time_period)),

columns=["linear_increase"]) # 创建线性增长的时间序列

time_cov = year.stack(month).stack(day).stack(dayofyear).stack(weekday).stack(weekofyear).stack(timesteps) # 将所有时间协变量堆叠在一起

time_cov = time_cov.astype(np.float32) # 将时间序列转换为浮点型

# 转换

time_cov_scaler = Scaler(verbose=False, n_jobs=-1, name="Scaler") # 缩放

time_cov_train, time_cov_val = time_cov.split_before(pd.Timestamp('20170816')) # 将时间序列分割为训练集和验证集

time_cov_scaler.fit(time_cov_train) # 对训练集进行缩放

time_cov_transformed = time_cov_scaler.transform(time_cov) # 对时间序列进行转换

# 油价

oil = TimeSeries.from_dataframe(df_oil,

time_col = 'date',

value_cols = ['dcoilwtico'],

freq = 'D') # 创建油价时间序列

oil = oil.astype(np.float32) # 将时间序列转换为浮点型

# 转换

oil_filler = MissingValuesFiller(verbose=False, n_jobs=-1, name="Filler") # 填充缺失值

oil_scaler = Scaler(verbose=False, n_jobs=-1, name="Scaler") # 缩放

oil_pipeline = Pipeline([oil_filler, oil_scaler]) # 创建数据处理流程

oil_transformed = oil_pipeline.fit_transform(oil) # 对油价时间序列进行转换

# 油价的移动平均

oil_moving_average_7 = MovingAverage(window=7) # 创建7天移动平均对象

oil_moving_average_28 = MovingAverage(window=28) # 创建28天移动平均对象

oil_moving_averages = []

ma_7 = oil_moving_average_7.filter(oil_transformed).astype(np.float32) # 对油价时间序列应用7天移动平均

ma_7 = ma_7.with_columns_renamed(col_names=ma_7.components, col_names_new="oil_ma_7") # 重命名列

ma_28 = oil_moving_average_28.filter(oil_transformed).astype(np.float32) # 对油价时间序列应用28天移动平均

ma_28 = ma_28.with_columns_renamed(col_names=ma_28.components, col_names_new="oil_ma_28") # 重命名列

oil_moving_averages = ma_7.stack(ma_28) # 将两个移动平均结果堆叠在一起

# 将一般协变量堆叠在一起

general_covariates = time_cov_transformed.stack(oil_transformed).stack(oil_moving_averages) # 将时间协变量、油价和油价的移动平均堆叠在一起

# 商店特定协变量(交易和节假日)

# 交易

df_transactions.sort_values(["store_nbr","date"], inplace=True) # 按商店编号和日期排序

TS_transactions_list = TimeSeries.from_group_dataframe(

df_transactions,

time_col="date",

group_cols=["store_nbr"], # 按商店编号分组提取单独的时间序列

value_cols="transactions", # 协变量

fill_missing_dates=True, # 填充缺失的日期

freq='D') # 频率为每天

transactions_list = []

for ts in TS_transactions_list:

series = TimeSeries.from_series(ts.pd_series()) # 必要的处理以删除静态协变量(以便可以在后面堆叠协变量)

series = series.astype(np.float32) # 将时间序列转换为浮点型

transactions_list.append(series) # 存储时间序列

transactions_list[24] = transactions_list[24].slice(start_ts=pd.Timestamp('20130102'), end_ts=pd.Timestamp('20170815')) # 对时间序列进行切片

from datetime import datetime, timedelta

transactions_list_full = []

for ts in transactions_list:

if ts.start_time() > pd.Timestamp('20130101'):

end_time = (ts.start_time() - timedelta(days=1))

delta = end_time - pd.Timestamp('20130101')

zero_series = TimeSeries.from_times_and_values(

times=pd.date_range(start=pd.Timestamp('20130101'),

end=end_time, freq="D"),

values=np.zeros(delta.days+1))

ts = zero_series.append(ts)

transactions_list_full.append(ts)

transactions_filler = MissingValuesFiller(verbose=False, n_jobs=-1, name="Filler") # 填充缺失值

transactions_scaler = Scaler(verbose=False, n_jobs=-1, name="Scaler") # 缩放

transactions_pipeline = Pipeline([transactions_filler, transactions_scaler]) # 创建数据处理流程

transactions_transformed = transactions_pipeline.fit_transform(transactions_list_full) # 对交易时间序列进行转换

# 交易的移动平均

trans_moving_average_7 = MovingAverage(window=7) # 创建7天移动平均对象

trans_moving_average_28 = MovingAverage(window=28) # 创建28天移动平均对象

transactions_covs = []

for ts in transactions_transformed:

ma_7 = trans_moving_average_7.filter(ts).astype(np.float32) # 对交易时间序列应用7天移动平均

ma_7 = ma_7.with_columns_renamed(col_names=ma_7.components, col_names_new="transactions_ma_7") # 重命名列

ma_28 = trans_moving_average_28.filter(ts).astype(np.float32) # 对交易时间序列应用28天移动平均

ma_28 = ma_28.with_columns_renamed(col_names=ma_28.components, col_names_new="transactions_ma_28") # 重命名列

trans_and_mas = ts.with_columns_renamed(col_names=ts.components, col_names_new="transactions").stack(ma_7).stack(ma_28) # 将交易时间序列和移动平均结果堆叠在一起

transactions_covs.append(trans_and_mas) # 存储堆叠后的时间序列

# 重新定义节假日的类别

df_holidays_events['type'] = np.where(df_holidays_events['transferred'] == True,'Transferred',

df_holidays_events['type']) # 将转让的节假日类型更改为'Transferred'

df_holidays_events['type'] = np.where(df_holidays_events['type'] == 'Transfer','Holiday',

df_holidays_events['type']) # 将'Transfer'类型的节假日更改为'Holiday'

df_holidays_events['type'] = np.where(df_holidays_events['type'] == 'Additional','Holiday',

df_holidays_events['type']) # 将'Additional'类型的节假日更改为'Holiday'

df_holidays_events['type'] = np.where(df_holidays_events['type'] == 'Bridge','Holiday',

df_holidays_events['type']) # 将'Bridge'类型的节假日更改为'Holiday'

# 将节假日分配给所有时间序列并保存在字典中

def holiday_list(df_stores):

listofseries = []

for i in range(0,len(df_stores)):

df_holiday_dummies = pd.DataFrame(columns=['date'])

df_holiday_dummies["date"] = df_holidays_events["date"]

df_holiday_dummies["national_holiday"] = np.where(((df_holidays_events["type"] == "Holiday") & (df_holidays_events["locale"] == "National")), 1, 0) # 判断是否为国家级节假日

df_holiday_dummies["earthquake_relief"] = np.where(df_holidays_events['description'].str.contains('Terremoto Manabi'), 1, 0) # 判断是否为地震救灾日

df_holiday_dummies["christmas"] = np.where(df_holidays_events['description'].str.contains('Navidad'), 1, 0) # 判断是否为圣诞节

df_holiday_dummies["football_event"] = np.where(df_holidays_events['description'].str.contains('futbol'), 1, 0) # 判断是否为足球赛事

df_holiday_dummies["national_event"] = np.where(((df_holidays_events["type"] == "Event") & (df_holidays_events["locale"] == "National") & (~df_holidays_events['description'].str.contains('Terremoto Manabi')) & (~df_holidays_events['description'].str.contains('futbol'))), 1, 0) # 判断是否为国家级活动

df_holiday_dummies["work_day"] = np.where((df_holidays_events["type"] == "Work Day"), 1, 0) # 判断是否为工作日

df_holiday_dummies["local_holiday"] = np.where(((df_holidays_events["type"] == "Holiday") & ((df_holidays_events["locale_name"] == df_stores['state'][i]) | (df_holidays_events["locale_name"] == df_stores['city'][i]))), 1, 0) # 判断是否为地方级节假日

listofseries.append(df_holiday_dummies)

return listofseries

def remove_0_and_duplicates(holiday_list):

listofseries = []

for i in range(0,len(holiday_list)):

df_holiday_per_store = list_of_holidays_per_store[i].set_index('date')

df_holiday_per_store = df_holiday_per_store.loc[~(df_holiday_per_store==0).all(axis=1)] # 删除全为0的行

df_holiday_per_store = df_holiday_per_store.groupby('date').agg({'national_holiday':'max', 'earthquake_relief':'max',

'christmas':'max', 'football_event':'max',

'national_event':'max', 'work_day':'max',

'local_holiday':'max'}).reset_index() # 将相同日期的行合并

listofseries.append(df_holiday_per_store)

return listofseries

def holiday_TS_list_54(holiday_list):

listofseries = []

for i in range(0,54):

holidays_TS = TimeSeries.from_dataframe(list_of_holidays_per_store[i],

time_col = 'date',

fill_missing_dates=True,

fillna_value=0,

freq='D') # 创建节假日时间序列

holidays_TS = holidays_TS.slice(pd.Timestamp('20130101'),pd.Timestamp('20170831')) # 对时间序列进行切片

holidays_TS = holidays_TS.astype(np.float32) # 将时间序列转换为浮点型

listofseries.append(holidays_TS)

return listofseries

list_of_holidays_per_store = holiday_list(df_stores) # 获取每个商店的节假日列表

list_of_holidays_per_store = remove_0_and_duplicates(list_of_holidays_per_store) # 删除全为0的行并合并相同日期的行

list_of_holidays_store = holiday_TS_list_54(list_of_holidays_per_store) # 将节假日列表转换为时间序列

holidays_filler = MissingValuesFiller(verbose=False, n_jobs=-1, name="Filler") # 填充缺失值

holidays_scaler = Scaler(verbose=False, n_jobs=-1, name="Scaler") # 缩放

holidays_pipeline = Pipeline([holidays_filler, holidays_scaler]) # 创建数据处理流程

holidays_transformed = holidays_pipeline.fit_transform(list_of_holidays_store) # 对节假日时间序列进行转换

# 将商店特定协变量与一般协变量堆叠在一起

store_covariates_future = []

for store in range(0,len(store_list)):

stacked_covariates = holidays_transformed[store].stack(general_covariates) # 将节假日时间序列和一般协变量堆叠在一起

store_covariates_future.append(stacked_covariates) # 存储堆叠后的时间序列

store_covariates_past = []

holidays_transformed_sliced = holidays_transformed # 用于切片过去协变量

for store in range(0,len(store_list)):

holidays_transformed_sliced[store] = holidays_transformed[store].slice_intersect(transactions_covs[store]) # 对节假日时间序列进行切片

general_covariates_sliced = general_covariates.slice_intersect(transactions_covs[store]) # 对一般协变量进行切片

stacked_covariates = transactions_covs[store].stack(holidays_transformed_sliced[store]).stack(general_covariates_sliced) # 将交易时间序列、节假日时间序列和一般协变量堆叠在一起

store_covariates_past.append(stacked_covariates) # 存储堆叠后的时间序列

# 商店/家族特定协变量(促销)

df_promotion = pd.concat([df_train, df_test], axis=0) # 将训练集和测试集合并

df_promotion = df_promotion.sort_values(["store_nbr","family","date"]) # 按商店编号、家族和日期排序

df_promotion.tail()

family_promotion_dict = {}

for family in family_list:

df_family = df_promotion.loc[df_promotion['family'] == family] # 选择特定家族的数据

list_of_TS_promo = TimeSeries.from_group_dataframe(

df_family,

time_col="date",

group_cols=["store_nbr","family"], # 按商店编号和家族分组提取单独的时间序列

value_cols="onpromotion", # 协变量

fill_missing_dates=True, # 填充缺失的日期

freq='D') # 频率为每天

for ts in list_of_TS_promo:

ts = ts.astype(np.float32) # 将时间序列转换为浮点型

family_promotion_dict[family] = list_of_TS_promo # 存储时间序列

promotion_transformed_dict = {}

for key in tqdm(family_promotion_dict):

promo_filler = MissingValuesFiller(verbose=False, n_jobs=-1, name="Fill NAs") # 填充缺失值

promo_scaler = Scaler(verbose=False, n_jobs=-1, name="Scaling") # 缩放

promo_pipeline = Pipeline([promo_filler,

promo_scaler])

promotion_transformed = promo_pipeline.fit_transform(family_promotion_dict[key]) # 对时间序列进行转换

# 促销的移动平均

promo_moving_average_7 = MovingAverage(window=7) # 创建7天移动平均对象

promo_moving_average_28 = MovingAverage(window=28) # 创建28天移动平均对象

promotion_covs = []

for ts in promotion_transformed:

ma_7 = promo_moving_average_7.filter(ts) # 对时间序列应用7天移动平均

ma_7 = TimeSeries.from_series(ma_7.pd_series()) # 将移动平均结果转换为时间序列

ma_7 = ma_7.astype(np.float32) # 将时间序列转换为浮点型

ma_7 = ma_7.with_columns_renamed(col_names=ma_7.components, col_names_new="promotion_ma_7") # 重命名列

ma_28 = promo_moving_average_28.filter(ts) # 对时间序列应用28天移动平均

ma_28 = TimeSeries.from_series(ma_28.pd_series()) # 将移动平均结果转换为时间序列

ma_28 = ma_28.astype(np.float32) # 将时间序列转换为浮点型

ma_28 = ma_28.with_columns_renamed(col_names=ma_28.components, col_names_new="promotion_ma_28") # 重命名列

promo_and_mas = ts.stack(ma_7).stack(ma_28) # 将两个移动平均结果堆叠在一起

promotion_covs.append(promo_and_mas) # 存储堆叠后的时间序列

promotion_transformed_dict[key] = promotion_covs # 存储移动平均结果

# 2.5. 将所有协变量组装成字典

past_covariates_dict = {}

for key in tqdm(promotion_transformed_dict):

promotion_family = promotion_transformed_dict[key]

sales_mas = sales_moving_averages_dict[key]

covariates_past = [promotion_family[i].slice_intersect(store_covariates_past[i]).stack(store_covariates_past[i].stack(sales_mas[i])) for i in range(0,len(promotion_family))] # 将促销时间序列、过去协变量和移动平均结果堆叠在一起

past_covariates_dict[key] = covariates_past # 存储堆叠后的时间序列

future_covariates_dict = {}

for key in tqdm(promotion_transformed_dict):

promotion_family = promotion_transformed_dict[key]

covariates_future = [promotion_family[i].stack(store_covariates_future[i]) for i in range(0,len(promotion_family))] # 将促销时间序列和未来协变量堆叠在一起

future_covariates_dict[key] = covariates_future # 存储堆叠后的时间序列

only_past_covariates_dict = {}

for key in tqdm(sales_moving_averages_dict):

sales_moving_averages = sales_moving_averages_dict[key]

only_past_covariates = [sales_moving_averages[i].stack(transactions_covs[i]) for i in range(0,len(sales_moving_averages))] # 将移动平均结果和交易时间序列堆叠在一起

only_past_covariates_dict[key] = only_past_covariates # 存储堆叠后的时间序列

# 删除原始数据集以节省内存

del(df_train)

del(df_test)

del(df_stores)

del(df_holidays_events)

del(df_oil)

del(df_transactions)

gc.collect()

100%|██████████| 33/33 [01:27<00:00, 2.65s/it]

100%|██████████| 33/33 [00:20<00:00, 1.63it/s]

100%|██████████| 33/33 [00:06<00:00, 5.00it/s]

100%|██████████| 33/33 [00:04<00:00, 8.08it/s]

19

2.2. 探索性数据分析

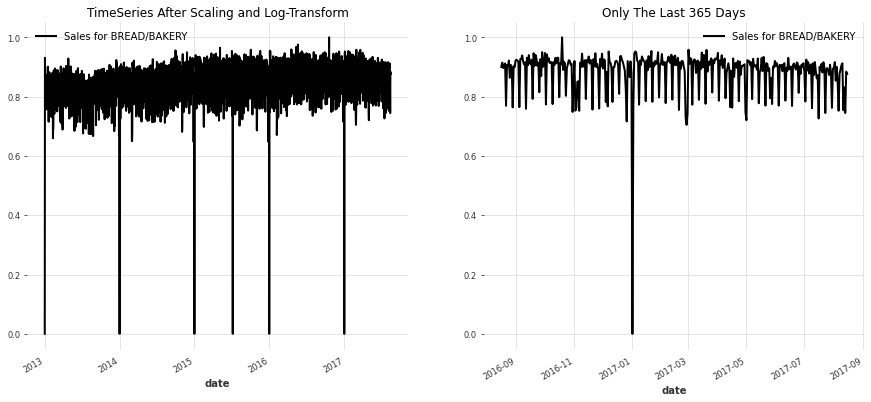

为了给出第一印象,让我们来看一下1782个(商店x产品系列)的时间序列中的一些:

# Some EDA

# 获取'BREAD/BAKERY'类别的第一个TimeSeries

bread_series = family_TS_dict['BREAD/BAKERY'][0]

# 获取'CELEBRATION'类别的第11个TimeSeries

celebration_series = family_TS_dict['CELEBRATION'][11]

# 打印出1782个TimeSeries中的两个

# 创建一个2x2的图表,大小为15x6

plt.subplots(2, 2, figsize=(15, 6))

# 在第1行第1列的位置绘制图像

plt.subplot(1, 2, 1)

# 绘制bread_series的图像,并添加标签,标签内容为bread_series的静态协变量值的一部分

bread_series.plot(label='Sales for {}'.format(bread_series.static_covariates_values()[0,1],

bread_series.static_covariates_values()[0,0],

bread_series.static_covariates_values()[0,2]))

# 绘制celebration_series的图像,并添加标签,标签内容为celebration_series的静态协变量值的一部分

celebration_series.plot(label='Sales for {}'.format(celebration_series.static_covariates_values()[0,1],

celebration_series.static_covariates_values()[0,0],

celebration_series.static_covariates_values()[0,2]))

# 设置标题为"Two Out Of 1782 TimeSeries"

plt.title("Two Out Of 1782 TimeSeries")

# 在第1行第2列的位置绘制图像

plt.subplot(1, 2, 2)

# 绘制bread_series最后365天的图像,并添加标签,标签内容为bread_series的静态协变量值的一部分

bread_series[-365:].plot(label='Sales for {}'.format(bread_series.static_covariates_values()[0,1],

bread_series.static_covariates_values()[0,0],

bread_series.static_covariates_values()[0,2]))

# 绘制celebration_series最后365天的图像,并添加标签,标签内容为celebration_series的静态协变量值的一部分

celebration_series[-365:].plot(label='Sales for {}'.format(celebration_series.static_covariates_values()[0,1],

celebration_series.static_covariates_values()[0,0],

celebration_series.static_covariates_values()[0,2]))

# 设置标题为"Only The Last 365 Days"

plt.title("Only The Last 365 Days")

# 显示图表

plt.show()

让我们还绘制两个系列的自相关图(ACF),并研究季节性模式:

# 绘制面包销量时间序列的自相关图

plot_acf(fill_missing_values(bread_series), m=7, alpha=0.05)

plt.title("{}, store {} in {}".format(bread_series.static_covariates_values()[0,1],

bread_series.static_covariates_values()[0,0],

bread_series.static_covariates_values()[0,2]))

# 绘制庆祝活动时间序列的自相关图

plot_acf(fill_missing_values(celebration_series), alpha=0.05)

plt.title("{}, store {} in {}".format(celebration_series.static_covariates_values()[0,1],

celebration_series.static_covariates_values()[0,0],

celebration_series.static_covariates_values()[0,2]))

如我们所见,BREAD/BAKERY系列显示出强烈的每周季节性,这是我们所预期的。然而,CELEBRATION系列的季节性模式不太明显。

我对静态协变量进行了编码,并对所有系列应用了0-1缩放+对数转换。静态协变量在时间上不变化 - 我们数据集中的示例是店铺编号或地区。缩放对于许多深度学习模型非常重要,而对训练数据进行对数转换将有助于避免我们的预测低估实际销售额。

# 展示差分后的时间序列

# 首先对示例序列进行转换

# 使用MissingValuesFiller类填充缺失值,不显示详细信息,使用所有可用的CPU核心,命名为"Fill NAs"

train_filler_bread = MissingValuesFiller(verbose=False, n_jobs=-1, name="Fill NAs")

# 使用StaticCovariatesTransformer类进行静态协变量转换,不显示详细信息,使用OneHotEncoder进行分类变量转换,命名为"Encoder"

static_cov_transformer_bread = StaticCovariatesTransformer(verbose=False, transformer_cat = sklearn.preprocessing.OneHotEncoder(), name="Encoder")

# 使用InvertibleMapper类进行对数转换,不显示详细信息,使用所有可用的CPU核心,命名为"Log-Transform"

log_transformer_bread = InvertibleMapper(np.log1p, np.expm1, verbose=False, n_jobs=-1, name="Log-Transform")

# 使用Scaler类进行缩放,不显示详细信息,使用所有可用的CPU核心,命名为"Scaling"

train_scaler_bread = Scaler(verbose=False, n_jobs=-1, name="Scaling")

# 使用MissingValuesFiller类填充缺失值,不显示详细信息,使用所有可用的CPU核心,命名为"Fill NAs"

train_filler_celebration = MissingValuesFiller(verbose=False, n_jobs=-1, name="Fill NAs")

# 使用StaticCovariatesTransformer类进行静态协变量转换,不显示详细信息,使用OneHotEncoder进行分类变量转换,命名为"Encoder"

static_cov_transformer_celebration = StaticCovariatesTransformer(verbose=False, transformer_cat = sklearn.preprocessing.OneHotEncoder(), name="Encoder")

# 使用InvertibleMapper类进行对数转换,不显示详细信息,使用所有可用的CPU核心,命名为"Log-Transform"

log_transformer_celebration = InvertibleMapper(np.log1p, np.expm1, verbose=False, n_jobs=-1, name="Log-Transform")

# 使用Scaler类进行缩放,不显示详细信息,使用所有可用的CPU核心,命名为"Scaling"

train_scaler_celebration = Scaler(verbose=False, n_jobs=-1, name="Scaling")

# 创建Pipeline对象,包含填充缺失值、静态协变量转换、对数转换和缩放操作

train_pipeline_bread = Pipeline([train_filler_bread,

static_cov_transformer_bread,

log_transformer_bread,

train_scaler_bread])

train_pipeline_celebration = Pipeline([train_filler_celebration,

static_cov_transformer_celebration,

log_transformer_celebration,

train_scaler_celebration])

# 对面包销售时间序列进行转换

bread_series_transformed = train_pipeline_bread.fit_transform(bread_series)

# 对庆祝活动销售时间序列进行转换

celebration_series_transformed = train_pipeline_celebration.fit_transform(celebration_series)

# 绘图

# 创建2x2的子图,图像大小为15x6

plt.subplots(2, 2, figsize=(15, 6))

# 第一个子图,行1,列2,索引1

plt.subplot(1, 2, 1)

# 绘制面包销售时间序列图,标签为"Sales for {静态协变量值1, 静态协变量值2, 静态协变量值3}"

bread_series_transformed.plot(label='Sales for {}'.format(bread_series.static_covariates_values()[0,1],

bread_series.static_covariates_values()[0,0],

bread_series.static_covariates_values()[0,2]))

plt.title("缩放和对数转换后的时间序列")

# 第二个子图,索引2

plt.subplot(1, 2, 2)

# 绘制最后365天的面包销售时间序列图,标签为"Sales for {静态协变量值1, 静态协变量值2, 静态协变量值3}"

bread_series_transformed[-365:].plot(label='Sales for {}'.format(bread_series.static_covariates_values()[0,1],

bread_series.static_covariates_values()[0,0],

bread_series.static_covariates_values()[0,2]))

plt.title("最后365天")

plt.show()

# 创建2x2的子图,图像大小为15x6

plt.subplots(2, 2, figsize=(15, 6))

# 第一个子图,行1,列2,索引1

plt.subplot(1, 2, 1)

# 绘制庆祝活动销售时间序列图,标签为"Sales for {静态协变量值1, 静态协变量值2, 静态协变量值3}"

celebration_series_transformed.plot(label='Sales for {}'.format(celebration_series.static_covariates_values()[0,1],

celebration_series.static_covariates_values()[0,0],

celebration_series.static_covariates_values()[0,2]))

plt.title("缩放和对数转换后的时间序列")

# 第二个子图,索引2

plt.subplot(1, 2, 2)

# 绘制最后365天的庆祝活动销售时间序列图,标签为"Sales for {静态协变量值1, 静态协变量值2, 静态协变量值3}"

celebration_series_transformed[-365:].plot(label='Sales for {}'.format(celebration_series.static_covariates_values()[0,1],

celebration_series.static_covariates_values()[0,0],

celebration_series.static_covariates_values()[0,2]))

plt.title("最后365天")

plt.show()

协变量

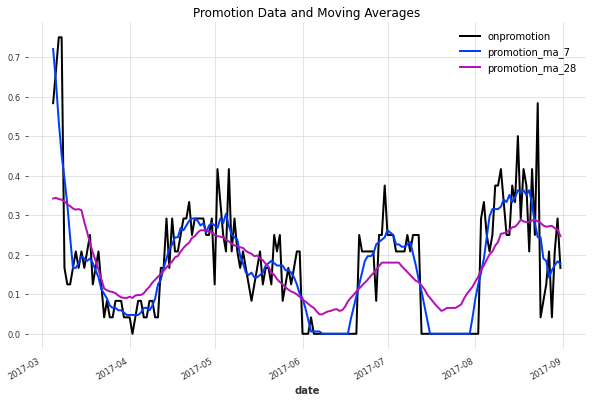

让我们来看看在1号店中BREAD/BAKERY系列过去180天的协变量。

销售移动平均项

# 设置图形大小为10x6

plt.figure(figsize=(10, 6))

# 绘制'BREAD/BAKERY'类别最近180天的销售数据的时间序列图

family_TS_transformed_dict['BREAD/BAKERY'][0][-180:].plot()

# 绘制'BREAD/BAKERY'类别最近180天的销售数据的7日移动平均线图

sales_moving_averages_dict['BREAD/BAKERY'][0][-180:].plot()

# 设置图形标题为"Sales 7- and 28-day Moving Averages"

plt.title("Sales 7- and 28-day Moving Averages")

促销数据

# 设置绘图大小

plt.figure(figsize=(10, 6))

# 绘制'BREAD/BAKERY'类别的促销数据的最近180天的折线图

promotion_transformed_dict['BREAD/BAKERY'][0][-180:].plot()

# 设置图表标题

plt.title("促销数据和移动平均值")

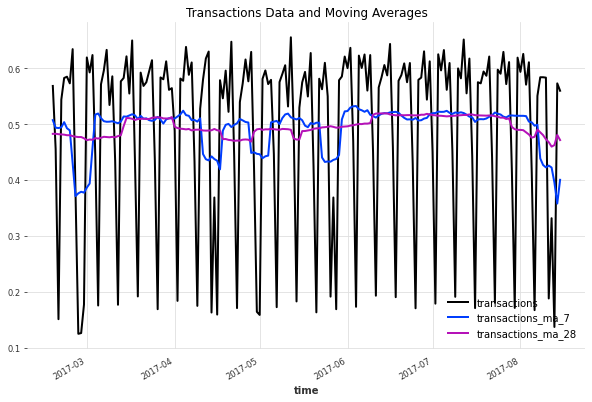

交易

# 设置画布大小为10*6

plt.figure(figsize=(10, 6))

# 取出transactions_covs中最后180个数据,并绘制折线图

transactions_covs[0][-180:].plot()

# 设置图表标题为"Transactions Data and Moving Averages"

plt.title("Transactions Data and Moving Averages")

油价

# 设置图形的大小为10x6

plt.figure(figsize=(10, 6))

# 绘制最近180天的原始油价数据

oil_transformed[-180:].plot()

# 绘制最近180天的移动平均线数据

oil_moving_averages[-180:].plot()

# 设置图形的标题为"Oil Price and Moving Averages"

plt.title("Oil Price and Moving Averages")

时间虚拟变量和协变量

# 设置图形的大小为10x6

plt.figure(figsize=(10, 6))

# 绘制时间相关的协变量的最后180个数据点的图形

time_cov_transformed[-180:].plot()

# 设置图形的标题为"时间相关的协变量"

plt.title("Time-Related Covariates")

假期和事件

我按照以下七个类别对可用的假期数据进行了排序。我认为进一步概括这些类别可能会更好。

# 创建一个图形窗口,设置大小为10x6

plt.figure(figsize=(10, 6))

# 获取第一个商店的节假日数据,并排除"date"列

holidays_per_store = list_of_holidays_per_store[0].loc[:, list_of_holidays_per_store[0].columns != "date"]

# 对每个节假日进行求和,并绘制条形图

holidays_per_store.sum().plot.bar(rot=0)

# 设置图表标题为"Holidays and Events"

plt.title("Holidays and Events")

3. 简单基准模型

在考虑神经网络之前,我首先使用传统和更简单的方法建立了一个基准。基准预测性能随后构成了机器学习模型预期性能的下限。我使用了三个简单易实现的模型:

- 朴素季节模型(重复最近的7天)

- 指数平滑

- Facebook Prophet

指数平滑给出了最好的结果。出于计算原因,我在后面注释掉了其他两个模型的训练/评估部分。

3.1. 一些快速回测

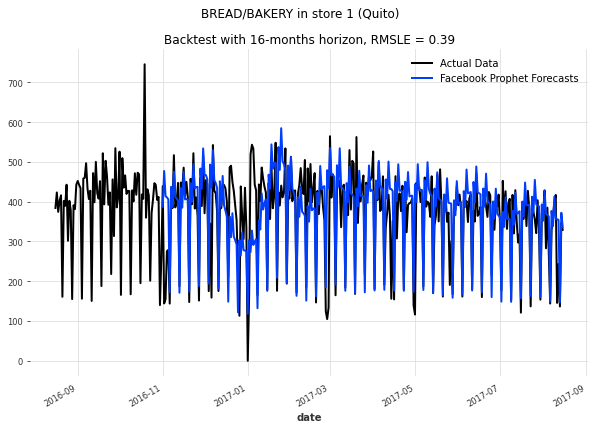

让我们快速查看我们大型数据集中两个个体系列的回测(历史预测)。我们从1号店的面包和糕点销售开始——这是我们数据集中更为一致和季节性的系列之一。

# 导入需要的模块和库

from darts.models import NaiveSeasonal, ExponentialSmoothing, Prophet

from darts.timeseries import concatenate

import logging

# 禁用cmdstanpy日志

cmdstanpy_logger = logging.getLogger("cmdstanpy")

cmdstanpy_logger.disabled = True

# 定义三个模型

Naive_Seasonal_Model = NaiveSeasonal(K=7)

Exponential_Smoothing_Model = ExponentialSmoothing()

Prophet_Model = Prophet()

# 定义评估函数,用于评估模型的预测效果

def eval_backtest(backtest_series, actual_series, horizon, transformer, model):

# 将预测结果和实际结果进行反转换,得到真实值

actualdata = transformer.inverse_transform(actual_series, partial=True)

forecasts = transformer.inverse_transform(backtest_series, partial=True)

# 绘制预测结果和实际结果的图像

plt.figure(figsize=(10, 6))

actualdata[-365:].plot(label="Actual Data")

forecasts.plot(label=model)

# 添加图像标题

plt.suptitle("{} in store {} ({})".format(static_cov_transformer_bread.inverse_transform(actual_series).static_covariates_values()[0,1],

static_cov_transformer_bread.inverse_transform(actual_series).static_covariates_values()[0,0],

static_cov_transformer_bread.inverse_transform(actual_series).static_covariates_values()[0,2]))

# 添加图例和标题

plt.legend()

plt.title("Backtest with {}-months horizon, RMSLE = {:.2f}".format(horizon,

rmsle(actual_series=actualdata, pred_series=forecasts)))

# 使用NaiveSeasonal模型进行历史预测

backtest_series_SN = Naive_Seasonal_Model.historical_forecasts(

bread_series_transformed,

start=pd.Timestamp('20161101'),

forecast_horizon=16,

stride=16,

last_points_only=False,

retrain=True,

verbose=False,

)

# 使用ExponentialSmoothing模型进行历史预测

backtest_series_ES = Exponential_Smoothing_Model.historical_forecasts(

bread_series_transformed,

start=pd.Timestamp('20161101'),

forecast_horizon=16,

stride=16,

last_points_only=False,

retrain=True,

verbose=False,

)

# 使用Prophet模型进行历史预测

backtest_series_Prophet = Prophet_Model.historical_forecasts(

bread_series_transformed,

start=pd.Timestamp('20161101'),

forecast_horizon=16,

stride=16,

last_points_only=False,

retrain=True,

verbose=False,

)

# 对三个模型的预测结果进行评估

eval_backtest(

backtest_series=concatenate(backtest_series_SN),

actual_series=bread_series_transformed,

horizon=16,

transformer=train_pipeline_bread,

model="Seasonal Naive (K=7) Forecasts"

)

eval_backtest(

backtest_series=concatenate(backtest_series_ES),

actual_series=bread_series_transformed,

horizon=16,

transformer=train_pipeline_bread,

model="Exponential Smoothing Forecasts"

)

eval_backtest(

backtest_series=concatenate(backtest_series_Prophet),

actual_series=bread_series_transformed,

horizon=16,

transformer=train_pipeline_bread,

model="Facebook Prophet Forecasts"

)

除了2017年圣诞/新年期间的零销售外,该系列遵循非常一致的模式-人们每周都吃面包。所有三个模型都能够相当好地预测这种模式。然而,季节性Naive模型在这个观察到的向下尖峰之后严重失败。

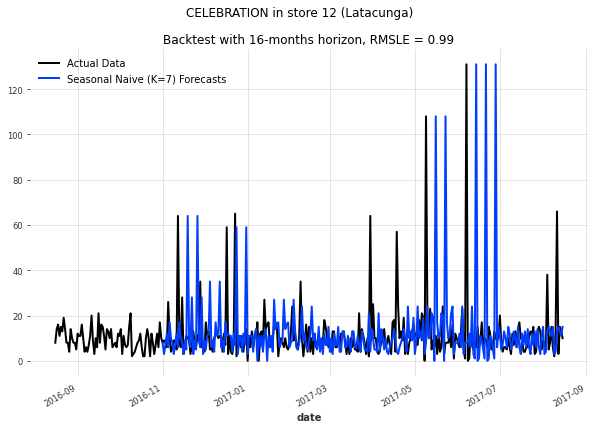

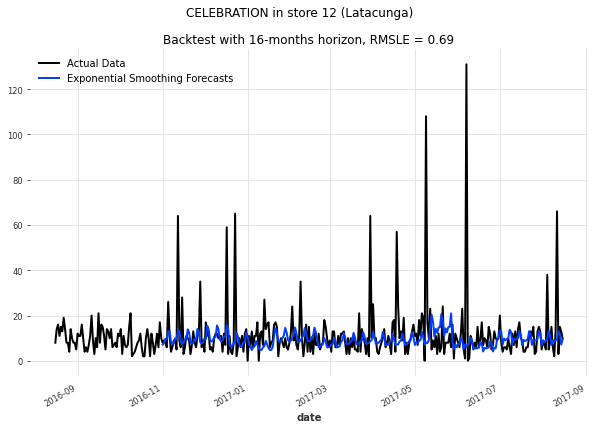

现在让我们看一个更复杂的系列-以19号店的CELEBRATION产品系列为例。尽管这个系列也有季节性模式,但它显示出更多的尖峰。我们的基准模型现在会怎样表现呢?

# 评估回测函数

def eval_backtest(backtest_series, actual_series, horizon, transformer, model):

# 将实际数据进行逆变换

actualdata = transformer.inverse_transform(actual_series, partial=True)

# 将回测数据进行逆变换

forecasts = transformer.inverse_transform(backtest_series, partial=True)

# 绘制图像

plt.figure(figsize=(10, 6))

# 绘制最近365天的实际数据

actualdata[-365:].plot(label="Actual Data")

# 绘制预测数据

forecasts.plot(label=model)

plt.legend()

# 设置图像标题

plt.suptitle("{} in store {} ({})".format(static_cov_transformer_celebration.inverse_transform(actual_series).static_covariates_values()[0,1],

static_cov_transformer_celebration.inverse_transform(actual_series).static_covariates_values()[0,0],

static_cov_transformer_celebration.inverse_transform(actual_series).static_covariates_values()[0,2]))

# 设置子标题

plt.title("Backtest with {}-months horizon, RMSLE = {:.2f}".format(horizon,

rmsle(actual_series=actualdata, pred_series=forecasts)))

# Naive Seasonal Model 回测

backtest_series_SN_2 = Naive_Seasonal_Model.historical_forecasts(

celebration_series_transformed,

start=pd.Timestamp('20161101'),

forecast_horizon=16,

stride=16,

last_points_only=False,

retrain=True,

verbose=False,

)

# Exponential Smoothing Model 回测

backtest_series_ES_2 = Exponential_Smoothing_Model.historical_forecasts(

celebration_series_transformed,

start=pd.Timestamp('20161101'),

forecast_horizon=16,

stride=16,

last_points_only=False,

retrain=True,

verbose=False,

)

# Prophet Model 回测

backtest_series_Prophet_2 = Prophet_Model.historical_forecasts(

celebration_series_transformed,

start=pd.Timestamp('20161101'),

forecast_horizon=16,

stride=16,

last_points_only=False,

retrain=True,

verbose=False,

)

调用评估回测函数进行评估

eval_backtest(

backtest_series=concatenate(backtest_series_SN_2),

actual_series=celebration_series_transformed,

horizon=16,

transformer=train_pipeline_celebration,

model=“Seasonal Naive (K=7) Forecasts”

)

eval_backtest(

backtest_series=concatenate(backtest_series_ES_2),

actual_series=celebration_series_transformed,

horizon=16,

transformer=train_pipeline_celebration,

model=“Exponential Smoothing Forecasts”

)

eval_backtest(

backtest_series=concatenate(backtest_series_Prophet_2),

actual_series=celebration_series_transformed,

horizon=16,

transformer=train_pipeline_celebration,

model=“Facebook Prophet Forecasts”

)

这些预测并不理想 - 预测销售并不那么容易。 "庆祝"产品的销售高峰很可能是由特殊事件引起的。 虽然季节性Naive模型会生成许多错误预测的高峰(并错过大部分实际高峰),指数平滑和Prophet模型可以捕捉到潜在的季节性模式和趋势,但却错过了所有高峰。 根据这些预测,当最需要时,我们的超市将不会提供足够的庆祝商品,从而错过了很多利润。

从这个小实验中,我们已经可以看出好的模型必须既捕捉到产品销售的一般季节性和趋势,又要理解可预测的高峰和其他特殊模式。 例如,由于我们有关于假期的数据,希望在某种程度上可能实现这一点。

<a id="3.2."></a> <br>

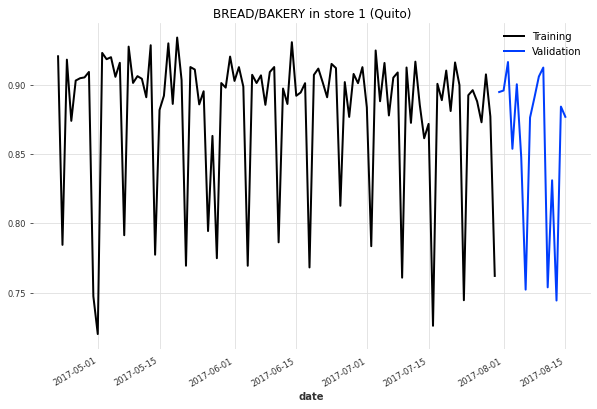

# 3.2. 训练/测试分割性能比较

从现在开始,我将使用**简单的训练/测试分割**来评估所有系列的模型 - 预测的时间范围将是**16天**,以模拟排行榜预测任务。虽然滚动窗口验证(如之前的回测)是一种更可靠的方法,但在预测项目的实验阶段中,它将需要太多的计算资源。一旦我们开始训练神经网络模型,这一点将变得明显。

```python

# 展示目标序列的训练集和验证集示例

# 将目标序列的前16个数据作为训练集

training_series_bread = bread_series_transformed[:-16]

# 将目标序列的后16个数据作为验证集

val_series_bread = bread_series_transformed[-16:]

# 创建一个大小为10x6的图像

plt.figure(figsize=(10, 6))

# 绘制训练集最后100个数据的折线图,并标注为"Training"

training_series_bread[-100:].plot(label='Training')

# 绘制验证集的折线图,并标注为"Validation"

val_series_bread.plot(label='Validation')

# 添加图例

plt.legend()

# 设置图像标题,使用目标序列的静态协变量值进行格式化

plt.title("{} in store {} ({})".format(bread_series.static_covariates_values()[0,1],

bread_series.static_covariates_values()[0,0],

bread_series.static_covariates_values()[0,2]))

对于我们的基准方法,我们现在训练1782个模型,每个(店铺x产品类别)时间序列一个模型。然后,这些模型生成的预测结果将被转换回原始比例。此外,我们对所有在过去两周内没有销售的系列预测纯零预测(这是一个相对随意的选择)。

# Exponential Smoothing Models and Forecasts

# 定义指数平滑模型构建函数

def ESModelBuilder(training_list):

# 创建一个空列表,用于存储指数平滑模型

listofESmodels = []

# 遍历训练数据列表

for i in range(0,len(training_list)):

# 创建一个指数平滑模型对象

ES_model = ExponentialSmoothing()

# 使用训练数据拟合模型

ES_model.fit(training_list[i])

# 将拟合好的模型添加到列表中

listofESmodels.append(ES_model)

# 返回指数平滑模型列表

return listofESmodels

# 定义指数平滑模型预测函数

def ESForecaster(model_list):

# 创建一个空列表,用于存储预测结果

listofESpreds = []

# 遍历模型列表

for i in range(0,len(model_list)):

# 使用模型进行预测,预测未来16个时间步的值

pred_ES = model_list[i].predict(n=16)

# 将预测结果添加到列表中

listofESpreds.append(pred_ES)

# 返回预测结果列表

return listofESpreds

# 创建指数平滑模型和预测结果的字典

ES_Models_Family_Dict = {}

ES_Forecasts_Family_Dict = {}

# 获取开始时间

st = time.time()

# 遍历家族列表

for family in tqdm(family_list):

# 获取家族的销售时间序列数据

sales_family = family_TS_transformed_dict[family]

# 提取训练数据,去除最后16个时间步

training_data = [ts[:-16] for ts in sales_family]

# 使用训练数据构建指数平滑模型

ES_Models_Family_Dict[family] = ESModelBuilder(training_data)

# 使用指数平滑模型进行预测

forecasts_ES = ESForecaster(ES_Models_Family_Dict[family])

# 反向转换

ES_Forecasts_Family_Dict[family] = family_pipeline_dict[family].inverse_transform(forecasts_ES, partial=True)

# 零预测

for i in range(0,len(ES_Forecasts_Family_Dict[family])):

# 如果训练数据的最后14个时间步的值都为0

if (training_data[i].univariate_values()[-14:] == 0).all():

# 将预测结果设置为0

ES_Forecasts_Family_Dict[family][i] = ES_Forecasts_Family_Dict[family][i].map(lambda x: x * 0)

# 获取结束时间

et = time.time()

# 计算执行时间

elapsed_time_exp = et - st

100%|██████████| 33/33 [10:53<00:00, 19.79s/it]

# Exponential Smoothing模型和预测的函数

# Naive Seasonal模型的构建函数

def NSModelBuilder(training_list):

# 用于存储Naive Seasonal模型的列表

listofNSmodels = []

# 遍历训练数据列表

for i in range(0,len(training_list)):

# 构建Naive Seasonal模型

NS_model = NaiveSeasonal(K=7)

# 对模型进行拟合

NS_model.fit(training_list[i])

# 将模型添加到列表中

listofNSmodels.append(NS_model)

# 返回模型列表

return listofNSmodels

# Naive Seasonal模型的预测函数

def NSForecaster(model_list):

# 用于存储预测结果的列表

listofNSpreds = []

# 遍历模型列表

for i in range(0,len(model_list)):

# 对模型进行预测

pred_NS = model_list[i].predict(n=16)

# 将预测结果添加到列表中

listofNSpreds.append(pred_NS)

# 返回预测结果列表

return listofNSpreds

# Exponential Smoothing模型的构建函数

def ESModelBuilder(training_list):

# 用于存储Exponential Smoothing模型的列表

listofESmodels = []

# 遍历训练数据列表

for i in range(0,len(training_list)):

# 构建Exponential Smoothing模型

ES_model = ExponentialSmoothing()

# 对模型进行拟合

ES_model.fit(training_list[i])

# 将模型添加到列表中

listofESmodels.append(ES_model)

# 返回模型列表

return listofESmodels

# Exponential Smoothing模型的预测函数

def ESForecaster(model_list):

# 用于存储预测结果的列表

listofESpreds = []

# 遍历模型列表

for i in range(0,len(model_list)):

# 对模型进行预测

pred_ES = model_list[i].predict(n=16)

# 将预测结果添加到列表中

listofESpreds.append(pred_ES)

# 返回预测结果列表

return listofESpreds

# Prophet模型的构建函数

def ProphetModelBuilder(training_list):

# 用于存储Prophet模型的列表

listofPmodels = []

# 遍历训练数据列表

for i in range(0,len(training_list)):

# 构建Prophet模型

P_model = Prophet()

# 对模型进行拟合

P_model.fit(training_list[i])

# 将模型添加到列表中

listofPmodels.append(P_model)

# 返回模型列表

return listofPmodels

# Prophet模型的预测函数

def ProphetForecaster(model_list):

# 用于存储预测结果的列表

listofPpreds = []

# 遍历模型列表

for i in range(0,len(model_list)):

# 对模型进行预测

pred_P = model_list[i].predict(n=16)

# 将预测结果添加到列表中

listofPpreds.append(pred_P)

# 返回预测结果列表

return listofPpreds

# Exponential Smoothing模型的训练和预测

# 用于存储Naive Seasonal模型的字典

NS_Models_Family_Dict = {}

# 用于存储Naive Seasonal模型预测结果的字典

NS_Forecasts_Family_Dict = {}

# 用于存储Exponential Smoothing模型的字典

ES_Models_Family_Dict = {}

# 用于存储Exponential Smoothing模型预测结果的字典

ES_Forecasts_Family_Dict = {}

# 用于存储Prophet模型的字典

Prophet_Models_Family_Dict = {}

# 用于存储Prophet模型预测结果的字典

Prophet_Forecasts_Family_Dict = {}

# 导入所需的库

import time

from multiprocessing import Pool

# 遍历family_list列表

for family in tqdm(family_list):

# 获取该family的销售时间序列数据

sales_family = family_TS_transformed_dict[family]

# 获取训练数据

training_data = [ts[:-16] for ts in sales_family]

# 构建Naive Seasonal模型

NS_Models_Family_Dict[family] = NSModelBuilder(training_data)

# 对模型进行预测

forecasts_NS = NSForecaster(NS_Models_Family_Dict[family])

# 将预测结果进行反转换

NS_Forecasts_Family_Dict[family] = family_pipeline_dict[family].inverse_transform(forecasts_NS, partial=True)

# 进行零预测

for i in range(0,len(NS_Forecasts_Family_Dict[family])):

if (training_data[i].univariate_values()[-21:] == 0).all():

NS_Forecasts_Family_Dict[family][i] = NS_Forecasts_Family_Dict[family][i].map(lambda x: x * 0)

for family in tqdm(family_list):

# 获取该family的销售时间序列数据

sales_family = family_TS_transformed_dict[family]

# 获取训练数据

training_data = [ts[:-16] for ts in sales_family]

# 构建Exponential Smoothing模型

ES_Models_Family_Dict[family] = ESModelBuilder(training_data)

# 对模型进行预测

forecasts_ES = ESForecaster(ES_Models_Family_Dict[family])

# 将预测结果进行反转换

ES_Forecasts_Family_Dict[family] = family_pipeline_dict[family].inverse_transform(forecasts_ES, partial=True)

# 进行零预测

for i in range(0,len(ES_Forecasts_Family_Dict[family])):

if (training_data[i].univariate_values()[-21:] == 0).all():

ES_Forecasts_Family_Dict[family][i] = ES_Forecasts_Family_Dict[family][i].map(lambda x: x * 0)

# 由于计算时间较长,此处注释掉

# for family in tqdm(family_list):

# # 获取该family的销售时间序列数据

# sales_family = family_TS_transformed_dict[family]

# # 获取训练数据

# training_data = [ts[:-16] for ts in sales_family]

# # 构建Prophet模型

# Prophet_Models_Family_Dict[family] = ProphetModelBuilder(training_data)

# # 对模型进行预测

# forecasts_Prophet = ProphetForecaster(Prophet_Models_Family_Dict[family])

# # 将预测结果进行反转换

# Prophet_Forecasts_Family_Dict[family] = family_pipeline_dict[family].inverse_transform(forecasts_Prophet, partial=True)

# # 进行零预测

# for i in range(0,len(Prophet_Forecasts_Family_Dict[family])):

# if (training_data[i].univariate_values()[-21:] == 0).all():

# Prophet_Forecasts_Family_Dict[family][i] = Prophet_Forecasts_Family_Dict[family][i].map(lambda x: x * 0)

'\n# Functions for Exponential Smoothing Models and Forecasts\n\ndef NSModelBuilder(training_list):\n\n listofNSmodels = []\n\n for i in range(0,len(training_list)):\n NS_model = NaiveSeasonal(K=7)\n NS_model.fit(training_list[i])\n listofNSmodels.append(NS_model)\n\n return listofNSmodels \n\ndef NSForecaster(model_list):\n\n listofNSpreds = []\n\n for i in range(0,len(model_list)):\n pred_NS = model_list[i].predict(n=16)\n listofNSpreds.append(pred_NS) \n\n return listofNSpreds \n\ndef ESModelBuilder(training_list):\n\n listofESmodels = []\n\n for i in range(0,len(training_list)):\n ES_model = ExponentialSmoothing()\n ES_model.fit(training_list[i])\n listofESmodels.append(ES_model)\n\n return listofESmodels \n\ndef ESForecaster(model_list):\n\n listofESpreds = []\n\n for i in range(0,len(model_list)):\n pred_ES = model_list[i].predict(n=16)\n listofESpreds.append(pred_ES) \n\n return listofESpreds \n\ndef ProphetModelBuilder(training_list):\n\n listofPmodels = []\n\n for i in range(0,len(training_list)):\n P_model = Prophet()\n P_model.fit(training_list[i])\n listofPmodels.append(P_model)\n\n return listofPmodels \n\ndef ProphetForecaster(model_list):\n\n listofPpreds = []\n\n for i in range(0,len(model_list)):\n pred_P = model_list[i].predict(n=16)\n listofPpreds.append(pred_P) \n\n return listofPpreds \n\n# Train and Forecast with Exponential Smoothing Models\n\nNS_Models_Family_Dict = {}\nNS_Forecasts_Family_Dict = {}\nES_Models_Family_Dict = {}\nES_Forecasts_Family_Dict = {}\nProphet_Models_Family_Dict = {}\nProphet_Forecasts_Family_Dict = {}\n\nimport time\nfrom multiprocessing import Pool\n\nfor family in tqdm(family_list):\n\n sales_family = family_TS_transformed_dict[family]\n training_data = [ts[:-16] for ts in sales_family]\n\n NS_Models_Family_Dict[family] = NSModelBuilder(training_data)\n forecasts_NS = NSForecaster(NS_Models_Family_Dict[family])\n \n # Transform Back\n NS_Forecasts_Family_Dict[family] = family_pipeline_dict[family].inverse_transform(forecasts_NS, partial=True)\n\n # Zero Forecasting\n for i in range(0,len(NS_Forecasts_Family_Dict[family])):\n if (training_data[i].univariate_values()[-21:] == 0).all():\n NS_Forecasts_Family_Dict[family][i] = NS_Forecasts_Family_Dict[family][i].map(lambda x: x * 0)\n \n \nfor family in tqdm(family_list):\n\n sales_family = family_TS_transformed_dict[family]\n training_data = [ts[:-16] for ts in sales_family]\n\n ES_Models_Family_Dict[family] = ESModelBuilder(training_data)\n forecasts_ES = ESForecaster(ES_Models_Family_Dict[family])\n \n # Transform Back\n ES_Forecasts_Family_Dict[family] = family_pipeline_dict[family].inverse_transform(forecasts_ES, partial=True)\n\n # Zero Forecasting\n for i in range(0,len(ES_Forecasts_Family_Dict[family])):\n if (training_data[i].univariate_values()[-21:] == 0).all():\n ES_Forecasts_Family_Dict[family][i] = ES_Forecasts_Family_Dict[family][i].map(lambda x: x * 0)\n \n # commented out due to long computation\n\nfor family in tqdm(family_list):\n\n sales_family = family_TS_transformed_dict[family]\n training_data = [ts[:-16] for ts in sales_family]\n\n Prophet_Models_Family_Dict[family] = ProphetModelBuilder(training_data)\n forecasts_Prophet = ProphetForecaster(Prophet_Models_Family_Dict[family])\n \n # Transform Back\n Prophet_Forecasts_Family_Dict[family] = family_pipeline_dict[family].inverse_transform(forecasts_Prophet, partial=True)\n\n # Zero Forecasting\n for i in range(0,len(Prophet_Forecasts_Family_Dict[family])):\n if (training_data[i].univariate_values()[-21:] == 0).all():\n Prophet_Forecasts_Family_Dict[family][i] = Prophet_Forecasts_Family_Dict[family][i].map(lambda x: x * 0)\n '

让我们来检查一下我们创建的16天验证集上的RMSLE得分:

# Re-Format Forecasts from Dictionaries to One List

# 创建一个空列表 forecast_list_ES

forecast_list_ES = []

# 遍历 family_list 中的每个元素 family

for family in family_list:

# 将 ES_Forecasts_Family_Dict 字典中 family 对应的值添加到 forecast_list_ES 列表中

forecast_list_ES.append(ES_Forecasts_Family_Dict[family])

# 创建一个空列表 sales_data

sales_data = []

# 遍历 family_list 中的每个元素 family

for family in family_list:

# 将 family_TS_dict 字典中 family 对应的值添加到 sales_data 列表中

sales_data.append(family_TS_dict[family])

# Function to Flatten Nested Lists

# 定义一个函数 flatten,用于将嵌套列表展开为一维列表

def flatten(l):

# 使用列表推导式将嵌套列表展开为一维列表

return [item for sublist in l for item in sublist]

# 将 sales_data 列表展开为一维列表,赋值给 actual_list

actual_list = flatten(sales_data)

# 将 forecast_list_ES 列表展开为一维列表,赋值给 pred_list_ES

pred_list_ES = flatten(forecast_list_ES)

# Mean RMSLE

# 使用 rmsle 函数计算 actual_list 和 pred_list_ES 的均值 RMSLE,将结果赋值给 ES_rmsle

ES_rmsle = rmsle(actual_series=actual_list,

pred_series=pred_list_ES,

n_jobs=-1,

inter_reduction=np.mean)

# 打印输出结果

print("\n")

print("The mean RMSLE for the Local Exponential Smoothing Models over 1782 series is {:.5f}.".format(ES_rmsle))

print('Training & Inference duration:', elapsed_time_exp, 'seconds')

print("\n")

The mean RMSLE for the Local Exponential Smoothing Models over 1782 series is 0.37411.

Training & Inference duration: 653.0645875930786 seconds

# Re-Format Forecasts from Dictionaries to One List

# 创建一个空列表 forecast_list_NS

forecast_list_NS = []

# 遍历 family_list 列表中的每一个元素

for family in tqdm(family_list):

# 将 NS_Forecasts_Family_Dict 字典中对应 family 键的值添加到 forecast_list_NS 列表中

forecast_list_NS.append(NS_Forecasts_Family_Dict[family])

# 创建一个空列表 forecast_list_ES

forecast_list_ES = []

# 遍历 family_list 列表中的每一个元素

for family in tqdm(family_list):

# 将 ES_Forecasts_Family_Dict 字典中对应 family 键的值添加到 forecast_list_ES 列表中

forecast_list_ES.append(ES_Forecasts_Family_Dict[family])

# 创建一个空列表 sales_data

sales_data = []

# 遍历 family_list 列表中的每一个元素

for family in tqdm(family_list):

# 将 family_TS_dict 字典中对应 family 键的值添加到 sales_data 列表中

sales_data.append(family_TS_dict[family])

# 定义一个函数 flatten,用于将嵌套列表展开为一维列表

def flatten(l):

# 使用列表推导式将嵌套列表展开为一维列表

return [item for sublist in l for item in sublist]

# 将 sales_data 列表展开为一维列表,并赋值给 actual_list

actual_list = flatten(sales_data)

# 将 forecast_list_NS 列表展开为一维列表,并赋值给 pred_list_NS

pred_list_NS = flatten(forecast_list_NS)

# 将 forecast_list_ES 列表展开为一维列表,并赋值给 pred_list_ES

pred_list_ES = flatten(forecast_list_ES)

# Mean RMSLE

# 调用 rmsle 函数计算 actual_list 和 pred_list_NS 的 RMSLE 值,使用 np.mean 进行内部归约,将结果赋值给 NS_rmsle

NS_rmsle = rmsle(actual_series = actual_list,

pred_series = pred_list_NS,

n_jobs = -1,

inter_reduction=np.mean)

# 调用 rmsle 函数计算 actual_list 和 pred_list_ES 的 RMSLE 值,使用 np.mean 进行内部归约,将结果赋值给 ES_rmsle

ES_rmsle = rmsle(actual_series = actual_list,

pred_series = pred_list_ES,

n_jobs = -1,

inter_reduction=np.mean)

# 打印 Naive Seasonal (K=7) Model 的平均 RMSLE 值

print("The mean RMSLE for the Naive Seasonal (K=7) Model over all 1782 series is {:.5f}.".format(NS_rmsle))

print("\n")

# 打印 Exponential Smoothing 的平均 RMSLE 值

print("The mean RMSLE for Exponential Smoothing over all 1782 series is {:.5f}.".format(ES_rmsle))

print("\n")

'\n# Mean RMSLE\n\nNS_rmsle = rmsle(actual_series = actual_list,\n pred_series = pred_list_NS,\n n_jobs = -1,\n inter_reduction=np.mean)\n\nES_rmsle = rmsle(actual_series = actual_list,\n pred_series = pred_list_ES,\n n_jobs = -1,\n inter_reduction=np.mean)\n\n#Prophet_rmsle = rmsle(actual_series = actual_list,\n# pred_series = pred_list_Prophet,\n# n_jobs = -1,\n# inter_reduction=np.mean)\n\nprint("The mean RMSLE for the Naive Seasonal (K=7) Model over all 1782 series is {:.5f}.".format(NS_rmsle))\nprint("\n")\nprint("The mean RMSLE for Exponential Smoothing over all 1782 series is {:.5f}.".format(ES_rmsle))\nprint("\n")\n#print("The mean RMSLE for Prophet over all 1782 series is {:.5f}.".format(Prophet_rmsle))\n'

指数平滑法在我们的验证数据中取得了最小的误差(RMSLE = 0.37411)!

为了进一步研究这些模型的性能,让我们打印出每个产品类别的平均RMSLE:

# Mean RMSLE for Families

# 创建一个空字典用于存储每个家庭的预测RMSLE值

family_forecast_rmsle_ES = {}

# 遍历家庭列表

for family in family_list:

# 计算家庭实际时间序列和预测时间序列的RMSLE值

ES_rmsle_family = rmsle(actual_series = family_TS_dict[family],

pred_series = ES_Forecasts_Family_Dict[family],

n_jobs = -1,

inter_reduction=np.mean)

# 将家庭和对应的RMSLE值添加到字典中

family_forecast_rmsle_ES[family] = ES_rmsle_family

# 按照RMSLE值对字典进行排序

family_forecast_rmsle_ES = dict(sorted(family_forecast_rmsle_ES.items(), key=lambda item: item[1]))

# 打印结果标题

print("\n")

print("Mean RMSLE for the 33 different product families, from worst to best:")

print("\n")

# 遍历字典中的键/值对并打印它们

for key, value in family_forecast_rmsle_ES.items():

print(key, ' : ', value)

Mean RMSLE for the 33 different product families, from worst to best:

BOOKS : 0.02651197503458817

PRODUCE : 0.16843766125266366

DAIRY : 0.17848655145201983

BABY CARE : 0.18114626716417453

BREAD/BAKERY : 0.18897676415105819

DELI : 0.2066933250933373

GROCERY I : 0.2127276035335266

POULTRY : 0.21482292812599496

MEATS : 0.21491541089879915

BEVERAGES : 0.2473325661277561

PREPARED FOODS : 0.264123423522481

PERSONAL CARE : 0.2664165041230439

HOME APPLIANCES : 0.26717466516377136

EGGS : 0.2776606798514665

FROZEN FOODS : 0.2784342567353302

HOME CARE : 0.3332755628285514

CLEANING : 0.3497135935068673

LAWN AND GARDEN : 0.3534249305533779

LIQUOR,WINE,BEER : 0.42864824751226854

LADIESWEAR : 0.44249147647648795

PLAYERS AND ELECTRONICS : 0.4470152310152792

SEAFOOD : 0.4648584971121303

PET SUPPLIES : 0.46642742684488553

HOME AND KITCHEN II : 0.47475863290616677

HOME AND KITCHEN I : 0.49530259318248976

AUTOMOTIVE : 0.5076609042415264

HARDWARE : 0.511367753289844

MAGAZINES : 0.5339185188412147

CELEBRATION : 0.5348196982287526

BEAUTY : 0.5420208134871055

GROCERY II : 0.6049866249277764

LINGERIE : 0.6661096176342214

SCHOOL AND OFFICE SUPPLIES : 0.9951124074306023

我还绘制了生成的三个最差的预测 - 也许我们可以从中学到一些东西:

# 绘制五个最差的预测结果

errorlist = [] # 创建一个空列表用于存储错误值

# 遍历actual_list中的每个元素

for i in range(0, len(actual_list)):

# 计算RMSLE错误值

error = rmsle(actual_series = actual_list[i],

pred_series = pred_list_ES[i])

# 获取当前actual_list元素的静态协变量值

errorfam = actual_list[i].static_covariates_values()[0,1]

# 将错误值和静态协变量值添加到errorlist列表中

errorlist.append([errorfam,error])

# 创建一个名为rmsle_series_ES的DataFrame,列名为'family'和'RMSLE',数据为errorlist中的值

rmsle_series_ES = pd.DataFrame(errorlist,columns=['family','RMSLE'])

# 对rmsle_series_ES按'RMSLE'列进行降序排序,并取前3个最大值

worst_3_ES = rmsle_series_ES.sort_values(by=['RMSLE'], ascending=False).head(3)

# 创建一个包含worst_3_ES个数个子图的图表,大小为(20, 5)

fig,axs = plt.subplots(1,len(worst_3_ES),figsize=(20, 5))

# 定义标签列表

labels = ["actual data", "ES forecast"]

# 遍历worst_3_ES中的每个元素

for i in range(0, len(worst_3_ES)):

# 获取ES预测结果和实际数据

plt_forecast = pred_list_ES[(worst_3_ES.index[i])]

plt_actual = actual_list[(worst_3_ES.index[i])]

# 计算当前预测结果和实际数据的RMSLE错误值

plt_err = rmsle(plt_actual, plt_forecast)

# 获取当前子图

axis = axs[i]

# 在当前子图上绘制实际数据的最后100个数据点

plt_actual[-100:].plot(ax=axis, label="actual data")

# 在当前子图上绘制ES预测结果

plt_forecast.plot(ax=axis, label="ES forecast")

# 在当前子图上添加图例,位置为左上角

axis.legend(loc="upper left")

# 设置当前子图的标题,包括静态协变量值、店铺编号和时间戳,以及RMSLE错误值

axis.title.set_text("{} in store {} ({}) \n RMSLE: {}".format(plt_forecast.static_covariates_values()[0,1],

plt_forecast.static_covariates_values()[0,0],

plt_forecast.static_covariates_values()[0,2],

plt_err))

显然,学校终于开始了;)那些预测非常糟糕,但是指数平滑模型无法预测像这样的销售突然飙升。我的希望是使用更具信息的模型,如神经网络模型,使用协变量捕捉这种(在这种情况下可能是月/周特定的)模式。

4. 全局建模

现在我们已经建立了一个坚实的基准,我们能改进我们的预测吗?我对时间序列预测的当前理解是这样的:

当处理小数据集和少量维度时,花哨/复杂的模型(我在说你,神经网络)不会带来太多好处,甚至可能没有好处。我经常发现简单的统计模型表现更好。

直观地说,这是有道理的:神经网络是高度复杂的非线性模型,不对手头的数据施加任何结构。另一方面,ARIMA、指数平滑或Prophet等统计方法涉及大量固定结构,并且不像神经网络或提升树模型那样灵活。但是,当建模一个只有几百个数据点的单变量时间序列时,我们不需要这么多的灵活性-我们的数据中没有足够的信号来建模高度复杂的关系。当涉及捕捉季节性和趋势等基本模式时,统计方法做得很好。