【AI】Swin Transformer源码解析

0. Swin Transformer简介

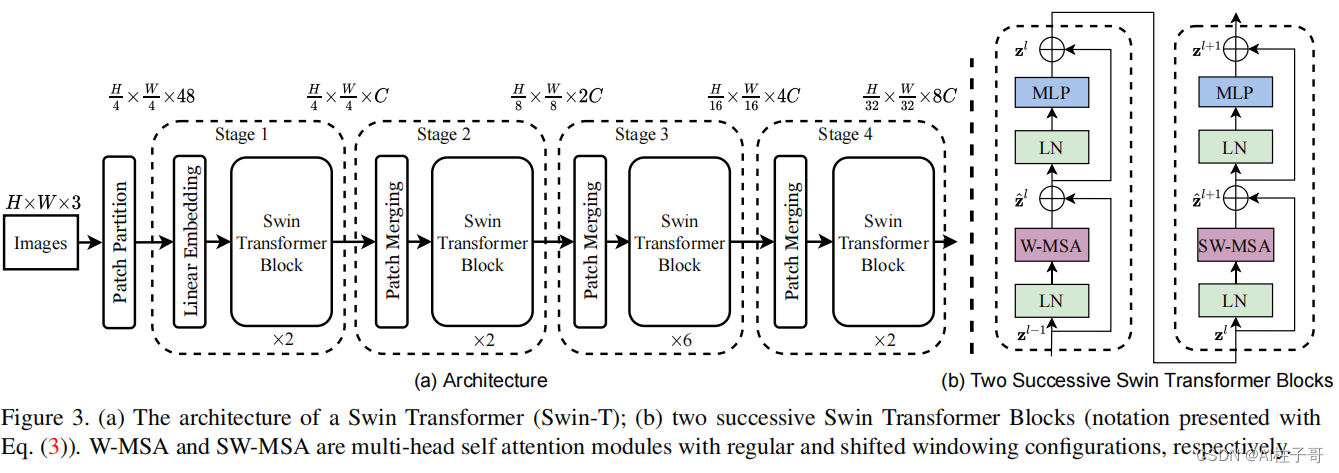

Swin Transformer指出,CV中的Token(处理单元)的大小不固定,并且数量相较于NLP要多。为解决这两个问题,Swin Transformer不仅使用了分层结构(金字塔结构),同时还提出了一种线性复杂度的Attention计算,最终得到了几种不同大小的结构。

看图说话:

(1)使用了类似卷积神经网络中的层次化构建方法,通过金字塔分层结构和下采样拓宽了感受野,这样的backbone有助于在此基础上构建目标检测,实例分割等任务

(2)在Swin Transformer中使用了Windows Multi-Head Self-Attention(W-MSA)的概念,将特征图划分成了多个不相交的区域(Window),Multi-Head Self-Attention只在每个窗口(Window)内进行。这样的结构可以减少计算量,但也会导致不同window中的结果缺少关联性,所以又添加了滑动窗口的Windows Multi-Head Self-Attention(W-MSA)

所以Swin Transform 主要的要点就是Patch Merging, W-MSA, SW-MSA, 相对位置编码这四个方面。

Source code:

https://github.com/microsoft/Swin-Transformer

1.源码解析

由Swin Tranformer的网络结构图我们可以看出来数据处理流程:Patch Embeding -> Basic Layer (SwinTransBlock * n -> Patch Merging) -> avgpool -> mlp head

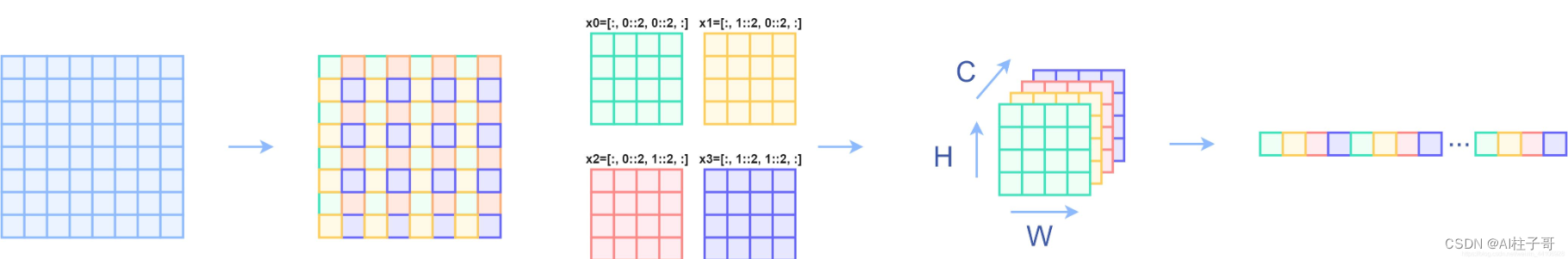

PatchEmbedding

一张图展示数据处理的方式:

class PatchEmbed(nn.Module):

r""" Image to Patch Embedding

Args:

img_size (int): Image size. Default: 224.

patch_size (int): Patch token size. Default: 4.

in_chans (int): Number of input image channels. Default: 3.

embed_dim (int): Number of linear projection output channels. Default: 96.

norm_layer (nn.Module, optional): Normalization layer. Default: None

"""

def __init__(self, img_size=224, patch_size=4, in_chans=3, embed_dim=96, norm_layer=None):

super().__init__()

img_size = to_2tuple(img_size)

patch_size = to_2tuple(patch_size)

patches_resolution = [img_size[0] // patch_size[0], img_size[1] // patch_size[1]]

self.img_size = img_size

self.patch_size = patch_size

self.patches_resolution = patches_resolution

self.num_patches = patches_resolution[0] * patches_resolution[1]

self.in_chans = in_chans

self.embed_dim = embed_dim

self.proj = nn.Conv2d(in_chans, embed_dim, kernel_size=patch_size, stride=patch_size)

if norm_layer is not None:

self.norm = norm_layer(embed_dim)

else:

self.norm = None

def forward(self, x):

B, C, H, W = x.shape

# FIXME look at relaxing size constraints

assert H == self.img_size[0] and W == self.img_size[1], \

f"Input image size ({H}*{W}) doesn't match model ({self.img_size[0]}*{self.img_size[1]})."

x = self.proj(x).flatten(2).transpose(1, 2) # B Ph*Pw C

if self.norm is not None:

x = self.norm(x)

return x

def flops(self):

Ho, Wo = self.patches_resolution

flops = Ho * Wo * self.embed_dim * self.in_chans * (self.patch_size[0] * self.patch_size[1])

if self.norm is not None:

flops += Ho * Wo * self.embed_dim

return flops

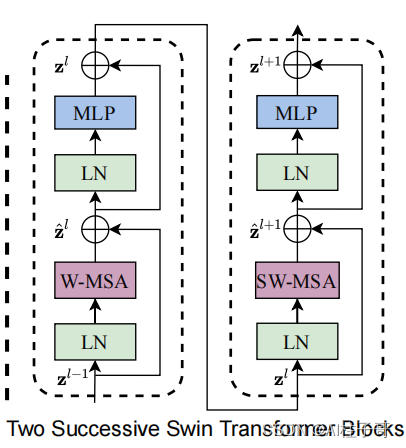

Swin Transformer Block

这里的LN和MLP层都是比较熟悉的处理方式,不同于VIT的是,这里将Multi-head Attention换成了W-MSA和SW-MSA两种结构,这里的W就是Swin中的window,SW就是shift-window,是我们需要着重了解的。

class SwinTransformerBlock(nn.Module):

r""" Swin Transformer Block.

Args:

dim (int): Number of input channels.

input_resolution (tuple[int]): Input resulotion.

num_heads (int): Number of attention heads.

window_size (int): Window size.

shift_size (int): Shift size for SW-MSA.

mlp_ratio (float): Ratio of mlp hidden dim to embedding dim.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set.

drop (float, optional): Dropout rate. Default: 0.0

attn_drop (float, optional): Attention dropout rate. Default: 0.0

drop_path (float, optional): Stochastic depth rate. Default: 0.0

act_layer (nn.Module, optional): Activation layer. Default: nn.GELU

norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm

"""

def __init__(self, dim, input_resolution, num_heads, window_size=7, shift_size=0,

mlp_ratio=4., qkv_bias=True, qk_scale=None, drop=0., attn_drop=0., drop_path=0.,

act_layer=nn.GELU, norm_layer=nn.LayerNorm):

super().__init__()

self.dim = dim

self.input_resolution = input_resolution

self.num_heads = num_heads

self.window_size = window_size

self.shift_size = shift_size

self.mlp_ratio = mlp_ratio

#首先确定shift_size是多少,如果输入的分辨率小于窗口尺寸,wmsa和msa等价,因此shift设为0,窗口调整为input大小。

if min(self.input_resolution) <= self.window_size:

# if window size is larger than input resolution, we don't partition windows

self.shift_size = 0

self.window_size = min(self.input_resolution)

assert 0 <= self.shift_size < self.window_size, "shift_size must in 0-window_size"

self.norm1 = norm_layer(dim)

self.attn = WindowAttention(

dim, window_size=to_2tuple(self.window_size), num_heads=num_heads,

qkv_bias=qkv_bias, qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop)

self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

self.norm2 = norm_layer(dim)

mlp_hidden_dim = int(dim * mlp_ratio)

self.mlp = Mlp(in_features=dim, hidden_features=mlp_hidden_dim, act_layer=act_layer, drop=drop)

if self.shift_size > 0:

# calculate attention mask for SW-MSA

H, W = self.input_resolution

img_mask = torch.zeros((1, H, W, 1)) # 1 H W 1

#slice 截取固定数量的元素

h_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

w_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

#给每个window编号

cnt = 0

for h in h_slices:

for w in w_slices:

img_mask[:, h, w, :] = cnt

cnt += 1

#mask window也进window切分部分,执行roll等操作。

mask_windows = window_partition(img_mask, self.window_size) # nW, window_size, window_size, 1

mask_windows = mask_windows.view(-1, self.window_size * self.window_size)

#通过广播操作相减,使得index相同的部分attn_mask上为0,后续根据attn_mask上的值处理

attn_mask = mask_windows.unsqueeze(1) - mask_windows.unsqueeze(2)

attn_mask = attn_mask.masked_fill(attn_mask != 0, float(-100.0)).masked_fill(attn_mask == 0, float(0.0))

else:

attn_mask = None

#定义一个不可学习的变量,attn_mask为属性名。

self.register_buffer("attn_mask", attn_mask)

def forward(self, x):

H, W = self.input_resolution

B, L, C = x.shape

assert L == H * W, "input feature has wrong size"

shortcut = x

x = self.norm1(x)

x = x.view(B, H, W, C)

# cyclic shift 这里使用shift_size就是区分W-MSA和SW-MSA的结构

if self.shift_size > 0:

shifted_x = torch.roll(x, shifts=(-self.shift_size, -self.shift_size), dims=(1, 2))

else:

shifted_x = x

# partition windows

x_windows = window_partition(shifted_x, self.window_size) # nW*B, window_size, window_size, C

x_windows = x_windows.view(-1, self.window_size * self.window_size, C) # nW*B, window_size*window_size, C

# W-MSA/SW-MSA

attn_windows = self.attn(x_windows, mask=self.attn_mask) # nW*B, window_size*window_size, C

# merge windows

attn_windows = attn_windows.view(-1, self.window_size, self.window_size, C)

shifted_x = window_reverse(attn_windows, self.window_size, H, W) # B H' W' C

# reverse cyclic shift

if self.shift_size > 0:

x = torch.roll(shifted_x, shifts=(self.shift_size, self.shift_size), dims=(1, 2))

else:

x = shifted_x

x = x.view(B, H * W, C)

# FFN

x = shortcut + self.drop_path(x)

x = x + self.drop_path(self.mlp(self.norm2(x)))

return x

def extra_repr(self) -> str:

return f"dim={self.dim}, input_resolution={self.input_resolution}, num_heads={self.num_heads}, " \

f"window_size={self.window_size}, shift_size={self.shift_size}, mlp_ratio={self.mlp_ratio}"

def flops(self):

flops = 0

H, W = self.input_resolution

# norm1

flops += self.dim * H * W

# W-MSA/SW-MSA

nW = H * W / self.window_size / self.window_size

flops += nW * self.attn.flops(self.window_size * self.window_size)

# mlp

flops += 2 * H * W * self.dim * self.dim * self.mlp_ratio

# norm2

flops += self.dim * H * W

return flops

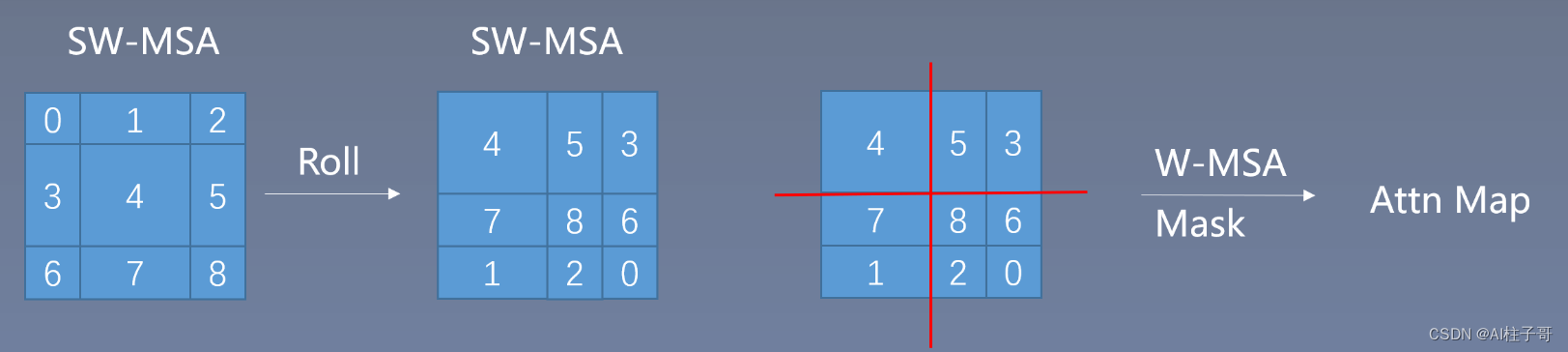

SW-MSA处理方式

可以通过roll的方式将切碎的小window从左上角挪动到有右下角,拼一起,计算时候把不同window间的值mask掉,计算完成后再roll回来。

这里的操作源码写的比较精炼,这里的shift是负数,但roll回来的时候,就变为正值了

shifted_x = torch.roll(x, shifts=(-self.shift_size, -self.shift_size), dims=(1, 2))

相对位置编码实现

coords_h = torch.arange(self.window_size[0])

coords_w = torch.arange(self.window_size[1])

#meshgrid,生成网格,沿着x,y轴的方向复制coords_h和coords_w

coords = torch.stack(torch.meshgrid([coords_h, coords_w])) # 2, Wh, Ww

coords_flatten = torch.flatten(coords, 1) # 2, Wh*Ww

relative_coords = coords_flatten[:, :, None] - coords_flatten[:, None, :] # 2, Wh*Ww, Wh*Ww

relative_coords = relative_coords.permute(1, 2, 0).contiguous() # Wh*Ww, Wh*Ww, 2

relative_coords[:, :, 0] += self.window_size[0] - 1 # shift to start from 0

relative_coords[:, :, 1] += self.window_size[1] - 1

relative_coords[:, :, 0] *= 2 * self.window_size[1] - 1

relative_position_index = relative_coords.sum(-1) # Wh*Ww, Wh*Ww

self.register_buffer("relative_position_index", relative_position_index)

mask的实现形式

if self.shift_size > 0:

# calculate attention mask for SW-MSA

H, W = self.input_resolution

img_mask = torch.zeros((1, H, W, 1)) # 1 H W 1

#slice 截取固定数量的元素

h_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

w_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

#给每个window编号

cnt = 0

for h in h_slices:

for w in w_slices:

img_mask[:, h, w, :] = cnt

cnt += 1

#mask window也进window切分部分,执行roll等操作。

mask_windows = window_partition(img_mask, self.window_size) # nW, window_size, window_size, 1

mask_windows = mask_windows.view(-1, self.window_size * self.window_size)

#通过广播操作相减,使得index相同的部分attn_mask上为0,后续根据attn_mask上的值处理

attn_mask = mask_windows.unsqueeze(1) - mask_windows.unsqueeze(2)

attn_mask = attn_mask.masked_fill(attn_mask != 0, float(-100.0)).masked_fill(attn_mask == 0, float(0.0))

else:

attn_mask = None

参考文章:

Swin-Transformer网络结构详解

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- 豆瓣小组爬虫

- 第二篇【传奇开心果短博文系列】Python的OpenCV库技术点案例示例:图像处理

- 【SpringBoot篇】解决缓存击穿问题① — 基于互斥锁方式

- 深入理解依赖反转原则(DIP)

- Linux查找命令(which命令、find命令)

- 解决MySQL数据库IN查询不根据用的ID顺序进行返回数据

- 力扣精选算法100题——在排序数组中查找元素的第一个和最后一个位置(二分查找专题)

- 快速排序非递归实现

- 推荐系统中 排序策略 CTR 预估加权平均法

- linux 安装 pg 数据库(亲测有效)