risk-jianmo整体流程梳理

发布时间:2024年01月22日

1. 加载数据与探索

1. 数据探索

from sklearn.metrics import roc_auc_score, roc_curve, auc

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

import numpy as np

import xgboost as xgb

import toad, math

df = pd.read_csv("scorecard.txt")

# 探索性数据分析

toad.detector.detect(df) 2. 样本切分

ex_lis = ['uid', 'samp_type', 'label'] # 指定不参与训练列名

ft_lis = list(df.columns) # 参与训练列名

for i in ex_lis:

ft_lis.remove(i)

# 开发样本、验证样本与时间外样本

dev = df[(df['samp_type']=='dev')]

val = df[(df['samp_type']=='val')]

off = df[(df['samp_type']=='off')] 2. 特征筛选

????? 可以使用缺失率、IV、相关系数进行特征筛选。但是考虑到后续建模过程要对变量进行分箱处理,该操作会使变量的IV变小,变量间的相关性变大,因此此处可以对IV和相关系数的阈值限制适当放松,或不做限制。

dev_slct1, drop_lst= toad.selection.select(dev, dev['label'],

empty=0.7, iv=0.03, corr=0.7, return_drop=True, exclude=ex_lis)

print("keep:", dev_slct1.shape[1],

"drop empty:", len(drop_lst['empty']),

"drop iv:", len(drop_lst['iv']),

"drop corr:", len(drop_lst['corr']))

# keep: 12; drop empty: 0; drop iv: 1; drop corr: 03. 卡方分箱

combiner = toad.transform.Combiner() # 得到切分节点

combiner.fit(dev_slct1, dev_slct1['label'], method='chi', min_samples=0.05, exclude=ex_lis)

bins = combiner.export() # 导出分箱的节点

print(bins)

{

'td_score': [0.7989831262724624],

'jxl_score': [0.4197048501965005],

'mj_score': [0.3615303943747963],

'zzc_score': [0.4469861520889339],

'zcx_score': [0.7007847486465795],

'person_info': [-0.2610139784946237, -0.1286774193548387, -0.05371756272401434, 0.013863440860215051, 0.06266021505376344, 0.07885304659498207],

'finance_info': [0.047619047619047616],

'credit_info': [0.02, 0.04, 0.11],

'act_info': [0.1153846153846154, 0.14102564102564102, 0.16666666666666666, 0.20512820512820512, 0.2692307692307692, 0.35897435897435903, 0.3974358974358974, 0.5256410256410257]

}

4. Bivar图&分箱调整

????? 画图观察每个变量(以单变量act_info为例)在开发样本和时间外样本上的Bivar图。

dev_slct2 = combiner.transform(dev_slct1) # 根据节点实施分箱

val2 = combiner.transform(val[dev_slct1.columns])

off2 = combiner.transform(off[dev_slct1.columns])

# 分箱后通过画图观察

from toad.plot import bin_plot, badrate_plot

bin_plot(dev_slct2, x='act_info', target='label')

bin_plot(val2, x='act_info', target='label')

bin_plot(off2, x='act_info', target='label')

????? 由于前3箱的变化趋势与整体不符(整体为递减趋势),因此需将其合并(第4~6箱合并,最后3箱进行合并),从而得到严格递减的变化趋势。

print(bins['act_info'])

# [0.115,0.141,...,0.525]

adj_bin = {'act_info': [0.166,0.3589,]}

combiner.set_rules(adj_bin)

dev_slct3 = combiner.transform(dev_slct1)

val3 = combiner.transform(val[dev_slct1.columns])

off3 = combiner.transform(off[dev_slct1.columns])

# 画出Bivar图

bin_plot(dev_slct3, x='act_info', target='label')

bin_plot(val3, x='act_info', target='label')

bin_plot(off3, x='act_info', target='label')

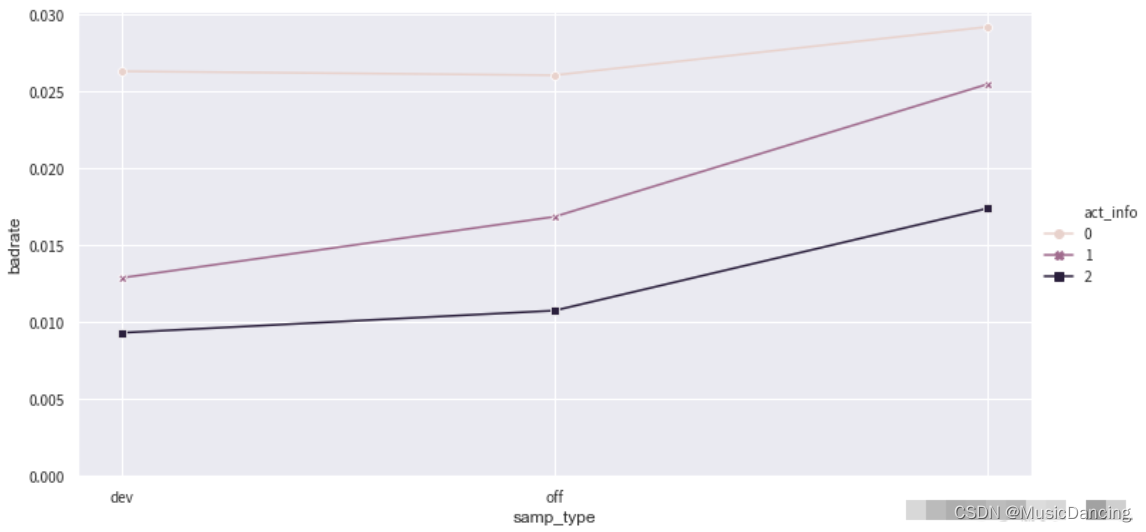

5. 绘制负样本占比关联图

data = pd.concat([dev_slct3,val3,off3], join='inner')

badrate_plot(data, x='samp_type', target='label', by='act_info')

????? 图中的线没有交叉,不需要对该特征的分组进行合并,即使有少量交叉也不会对结果造成明显的影响,只有当错位比较严重的情况下才进行调整。

6. WOE编码,并验证IV

计算训练样本与测试样本的PSI

t = toad.transform.WOETransformer()

dev_slct3_woe = t.fit_transform(dev_slct3, dev_slct3['label'], exclude=ex_lis)

val_woe = t.transform(val3[dev_slct3.columns])

off_woe = t.transform(off3[dev_slct3.columns])

data = pd.concat([dev_slct3_woe, val_woe, off_woe])

psi_df = toad.metrics.PSI(dev_slct3_woe, val_woe).sort_values(0)

psi_df = psi_df.reset_index()

psi_df = psi_df.rename(columns = {'index': 'feature', 0: 'psi'})

print(psi_df)通常单个特征的PSI值建议在0.1以下,根据具体情况可以适当调整

11

-

删除PSI大于0.13的特征

-

本案例数据为演示数据变量PSI普遍较大,因此选择0.13作为阈值

文章来源:https://blog.csdn.net/MusicDancing/article/details/135749773

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:chenni525@qq.com进行投诉反馈,一经查实,立即删除!

最新文章

- Python教程

- 深入理解 MySQL 中的 HAVING 关键字和聚合函数

- Qt之QChar编码(1)

- MyBatis入门基础篇

- 用Python脚本实现FFmpeg批量转换

- AI嵌入式K210项目(5)-串口通讯

- 案例163:基于微信小程序的校园二手交易平台系统设计与开发

- iPayLinks观察:从9月中国游戏厂商出海收入排行榜看游戏出海趋势

- mfc100u.dll文件丢失了要怎么解决?修复mfc100u.dll详细指南

- Swin Transformer 学习笔记(附代码)

- Likeshop单商户高级版商城的二次开发之路

- js逆向第12例:猿人学第5题js混淆-乱码增强

- STM32单片机实现简单的声音和光的采样

- 最新国内免费使用GPT-4分享,GPT语音对话,Midjourney绘画

- EDA期末复习